Visual Workflow Mocking and Testing with Specmatic and Arazzo API Specifications

By Hari Krishnan

Table of Contents

- Introduction

- Why visual Arazzo authoring and Specmatic for API workflow testing

- Authoring an Arazzo workflow visually

- From Arazzo spec to coordinated workflow mocks

- Transitioning from mocks to API workflow testing against real services

- Interpreting test results and coverage

- Practical example: running an entire workflow test locally

- Best practices for reliable API workflow testing

- Troubleshooting common issues

- How we measure success with API workflow testing

- Conclusion

- FAQ

API workflow testing with Arazzo and Specmatic: Visual authoring, workflow mocking, and backend verification

Here we’ll walk through a practical approach to API workflow testing using the Arazzo API specification and Specmatic. In this demonstration we explain how we author Arazzo specs visually, generate accurate YAML, spin up coordinated workflow mocks for front-end development, and transition the same specification into comprehensive API workflow testing against real backend services. This guide focuses on the steps, decisions, and best practices we use to keep front-end and backend teams productive while ensuring reliable behavior across multi-service journeys.

Introduction

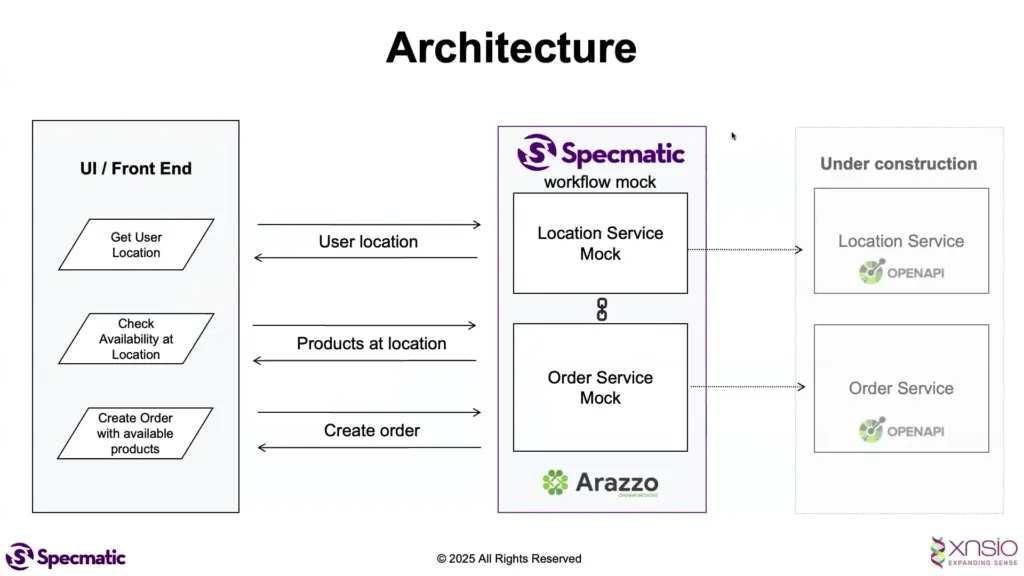

We often build user journeys that span multiple backend services: locate the user, fetch catalog items for their location, and finally create an order. That simple sequence becomes complex when responses conditionally determine the next step. To maintain developer velocity and quality, we apply Arazzo specifications and Specmatic to support both workflow mocking and API workflow testing. Using a single source of truth — the Arazzo API spec — we can mock coordinated service responses for front-end development and run accurate multi-service tests against staging or local backends. Throughout this guide we emphasize how the same Arazzo spec enables both mocks and tests, and how Specmatic orchestrates the process to deliver deterministic, reproducible results for API workflow testing.

Why visual Arazzo authoring and Specmatic for API workflow testing

We chose Arazzo as our API specification standard because it provides a clear, machine-readable contract for service behavior, servers, and data shapes. Specmatic complements Arazzo by using that contract to:

- Generate data-driven mocks that represent entire workflows spanning multiple services.

- Validate end-to-end behavior with API workflow testing that follows the same paths the front end will exercise.

- Remove manual YAML authoring, reducing human error and speeding iteration.

When we say “workflow”, we mean a chained sequence of operations across services where each step’s outcome controls subsequent steps. API workflow testing captures these multi-call journeys and validates the behavior end-to-end rather than just unit or single-operation contract checks. Using Arazzo authored visually, we ensure the specification captures success criteria, error paths, and branching logic in a way that supports both mocking and testing.

Authoring an Arazzo workflow visually

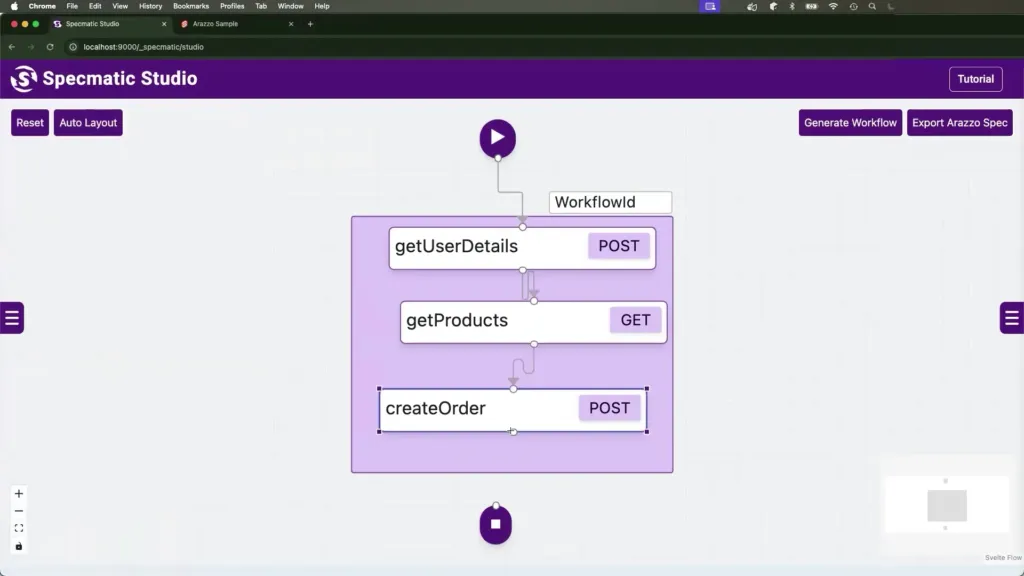

Authoring Arazzo specifications by hand can be tedious and error-prone. Instead, we prefer a visual model where we can drag operations, connect them to show order, and let tooling generate the precise YAML. Our workflow for a typical order placement journey looks like this:

- Get user location (location service)

- Get available products at that location (order/catalog service)

- Place an order (order service)

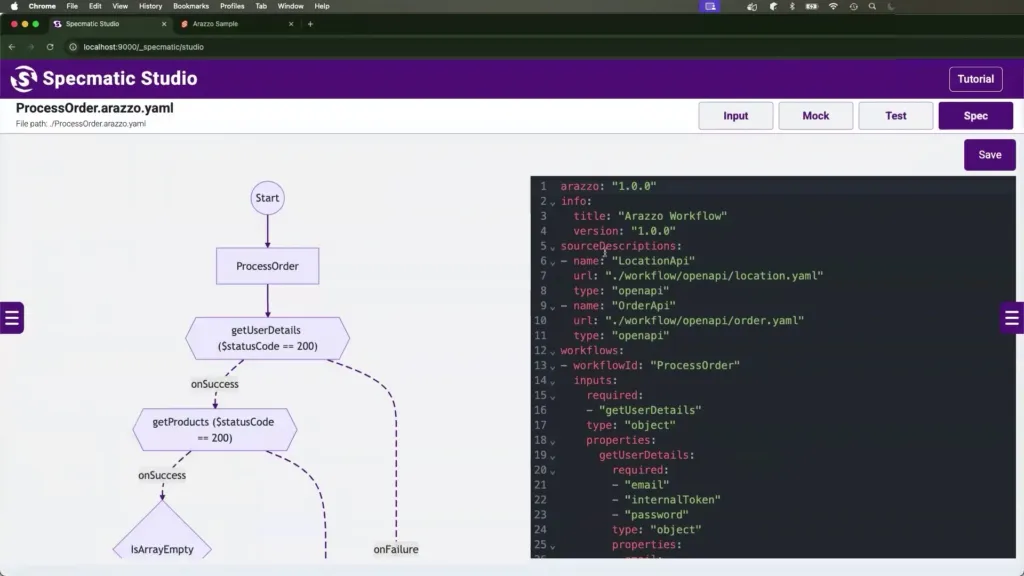

We drag operations from each service specification to a canvas and link them to define the sequence. The visual canvas does more than layout — it communicates the expected paths the application will follow. Specmatic uses that linkage to infer the branching logic: which response codes count as success and should continue the flow and which ones terminate the journey.

For example, when the “Get user details” call returns a 2xx status we continue to the “Get products” step. If it returns a non-2xx status, the workflow terminates or takes an alternate path. Specmatic identifies these success criteria for each operation automatically from the Arazzo spec and the responses defined in the spec.

Once the visual model is complete, we click “Generate workflow” and obtain a fully accurate Arazzo YAML specification. This YAML is the canonical contract we will use for both mocks and tests. Because the spec came from a visual source, it is significantly easier to review and maintain, and we avoid many pitfalls of hand-editing YAML such as indentation errors, missing schema fields, or mismatched response examples.

From Arazzo spec to coordinated workflow mocks

One of the immediate benefits of the Arazzo + Specmatic workflow is that we can spin up a workflow mock that coordinates responses across services. This lets front-end engineers proceed without waiting for backend implementation to be complete or without risking flaky tests due to external dependencies.

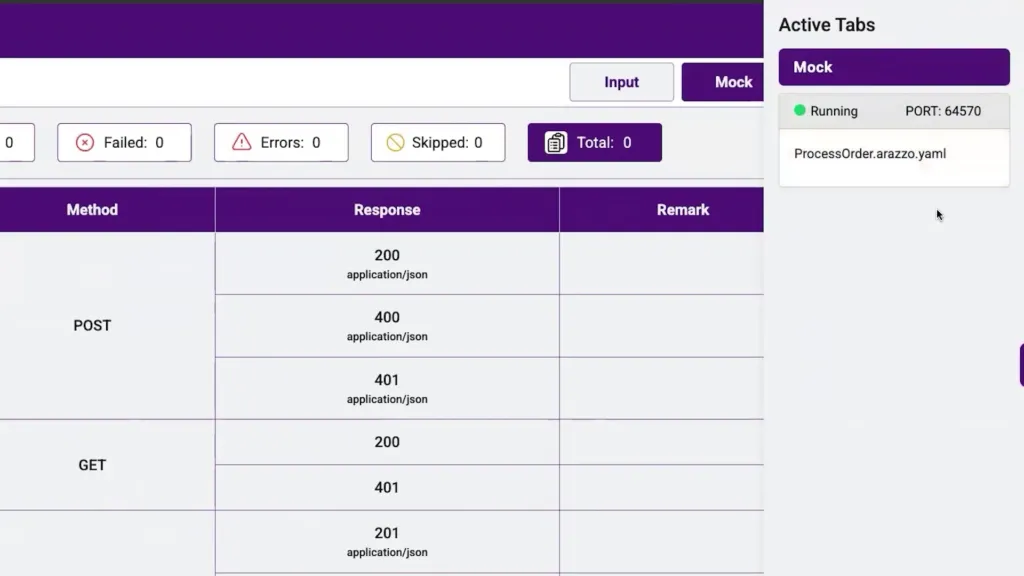

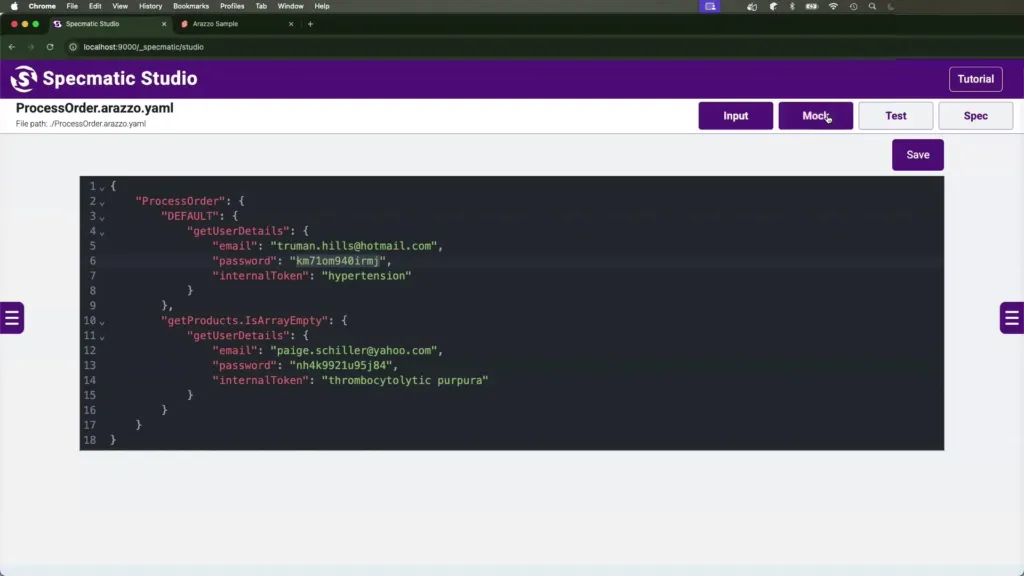

When we start the workflow mock, Specmatic uses the Arazzo spec to generate stub data and journey inputs. The mock is aware of multiple possible journeys (happy and unhappy paths) and provides datasets for each. With a running mock, we can launch the front end and interact with it as if the real services were present.

In practice, we test the happy path first. Specmatic provides sample credentials and payloads that match the generated stub database. We use those credentials in the front-end UI, log in, and the workflow mock returns a 200 response from the location service, then returns available products for that location. The front end then proceeds to the order creation step. The mock logs each call and its response, so we can validate that the expected API contract was followed.

Data-driven journeys for realistic mocking

We design our Arazzo spec and examples such that the mock contains multiple journey contexts. For the order placement workflow we typically include at least two contexts:

- Default/happy path: location found, non-empty product list, order created successfully.

- Unhappy path: location found but no products available, order flow stops, and the front end displays an appropriate message.

Switching contexts in the mock is trivial — we pick a different input dataset and re-run the front end interaction to validate alternate user experiences. Because the Arazzo spec defines the response schemas and examples, the mock responses remain consistent and realistic.

Transitioning from mocks to API workflow testing against real services

Mocking is indispensable for front-end development. However, when we start integrating real back-end services, we need to run API workflow testing — that is, execute the same multi-call journeys against actual services and validate the end-to-end behavior. Using the same Arazzo spec makes this transition smooth.

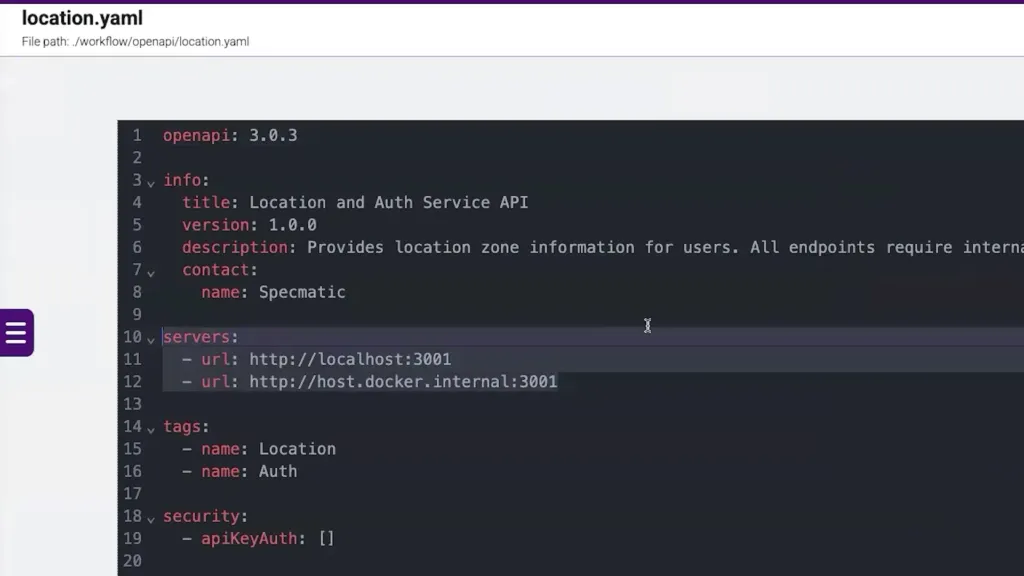

To move into API workflow testing we switch the Specmatic context from “mock” to “test”. In test mode, Specmatic will make real HTTP calls to the servers defined in the Arazzo spec. Because the Arazzo spec includes server URLs for each service, Specmatic knows exactly where to send requests without additional configuration.

Before running the test, we ensure that the backend services (for example, the location service and the order service) are running locally or in a test environment. We also prepare input data that matches what the services expect in their databases. For example, if the test uses an email credential to fetch a user’s location, our database must have a user record for that email. Specmatic validates our input against the Arazzo schema before executing the test, which helps catch mismatches early.

How Specmatic discovers service endpoints from the Arazzo spec

There is no need to one-off configure endpoint URLs in the test runner. We rely on the “servers” section of the Arazzo YAML for each service. Specmatic reads the server definitions and routes requests accordingly. That means changes to where services run only need to be updated in the spec, keeping orchestration declarative and centralized.

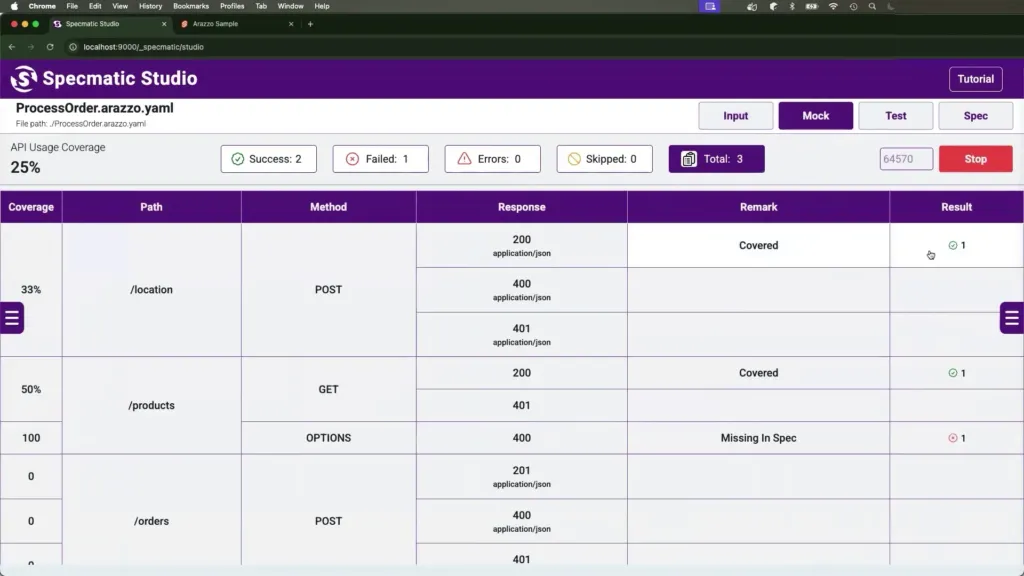

Interpreting test results and coverage

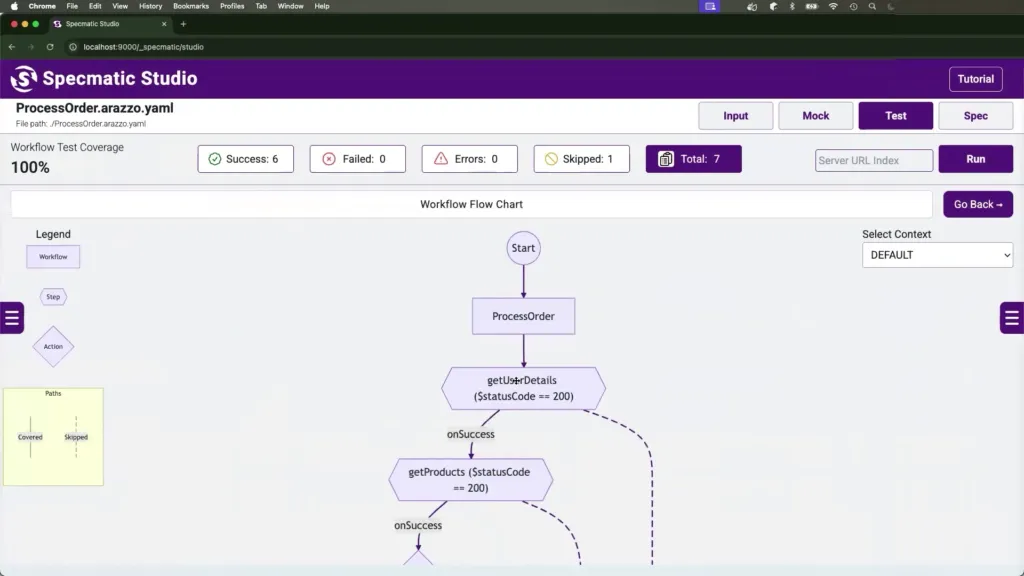

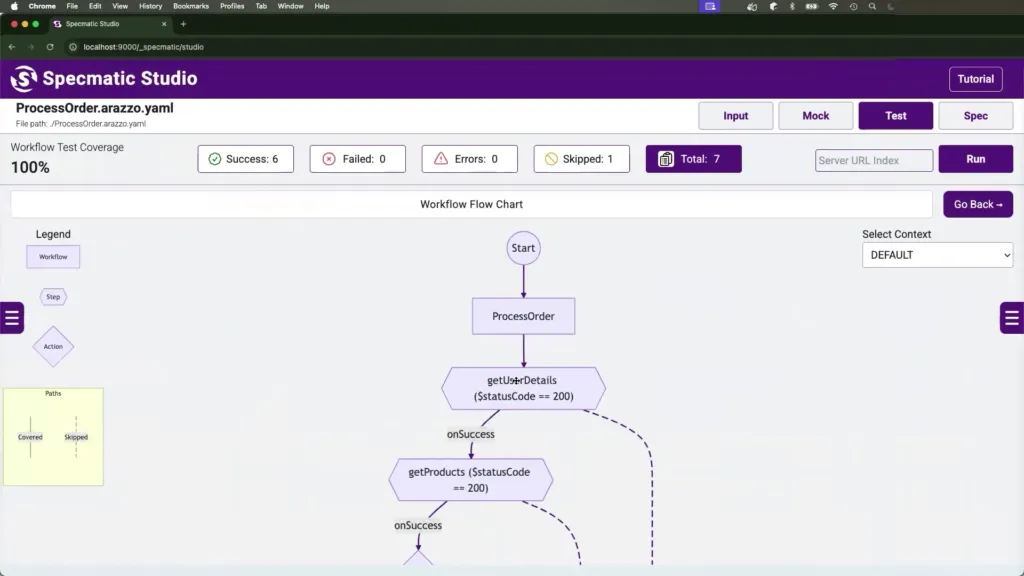

When we run API workflow testing, Specmatic produces both a visual flowchart of executed paths and detailed request/response logs for every operation. The flowchart highlights which journey context was executed and whether the path matched the expected success criteria defined in the Arazzo spec.

Coverage reporting is extremely valuable: Specmatic evaluates whether each defined workflow path was exercised and reports the percentage of workflow coverage achieved by the test run. For our simple order workflow we typically see 100% API workflow coverage when both the happy and unhappy paths are executed.

We can drill down from the flowchart into each operation to inspect request payloads, response bodies, headers, and validation errors. This detail helps us answer questions like:

- Did the service return a response that conforms to the Arazzo schema?

- Was the success criteria for this operation (e.g., a 2xx status) satisfied?

- If an operation failed, what was the failure mode and how did it affect downstream steps?

Practical example: running an entire workflow test locally

We outline a minimal, repeatable sequence to run a local API workflow test against two services (location and order):

- Start both backend services on known ports (e.g., location service on port 3000, order service on port 3001).

- Ensure the Arazzo YAML contains the correct “servers” entries for both services.

- Prepare test input that corresponds to records in the local database (e.g., user emails used in the mock’s examples).

- Switch Specmatic from mock to test mode and run the workflow test.

- Review the flowchart for executed paths and drill into operations for details.

This process gives us two important assurances: first, the contract in the Arazzo spec remains the single source of truth; second, the workflow behavior that we developed against mocks is validated against real code and real data.

Best practices for reliable API workflow testing

Over time we converged on practices that make API workflow testing with Arazzo and Specmatic both dependable and efficient:

- Design for contexts: Define distinct journey contexts (happy path, boundary conditions, error paths) in your Arazzo spec so both mocks and tests can exercise them.

- Keep servers declarative: Maintain server URLs in the Arazzo YAML to avoid duplicating configuration across tools.

- Use realistic examples: Populate spec examples and mock datasets with realistic, but non-sensitive, test data so front-end flows and tests reveal true integration issues.

- Validate inputs early: Leverage Specmatic’s input validation against the Arazzo schema to catch schema mismatches before calls execute.

- Automate workflow tests: Integrate API workflow testing into CI pipelines so that multi-service regressions are caught quickly.

- Version your Arazzo spec: Treat the spec as code and version it with the service repositories so changes remain auditable and consistent.

Practicing these habits reduces surprises during integration and makes our API workflow testing more predictable and actionable.

Troubleshooting common issues

We encounter a few recurring problems when moving from mocks to tests. Here’s how we address them:

1. Test data mismatch

Symptoms: The mock returns expected results but the test run against local services fails or returns empty data. Reason: The local database lacks records matching the test inputs.

Fix: Seed the database with test fixtures that match the examples in the Arazzo spec. Where possible, script fixture loading into CI jobs, or use containerized databases initialized with known state for tests.

2. Servers defined incorrectly

Symptoms: Specmatic attempts to hit the wrong host or port. Reason: The Arazzo YAML contains stale or incorrect server entries.

Fix: Update the servers section in the Arazzo YAML to the correct hosts/ports. Keep environment-specific overrides in a controlled place (for example, environment variables or separate spec files per environment) and ensure Specmatic reads the right spec for the target environment.

3. Schema drift

Symptoms: Responses do not validate against the Arazzo schema. Reason: Back-end implementation changed the response structure without updating the spec.

Fix: When tests reveal schema mismatches, we update the Arazzo spec to reflect intended behavior or fix the backend implementation to conform. Treat schema mismatches as first-class bugs and resolve them before continuing further integration.

How we measure success with API workflow testing

We judge the success of our API workflow testing setup by a few practical metrics:

- Workflow coverage: percentage of defined journey paths exercised by automated tests.

- Time to detect regression: how quickly a failing multi-service interaction is reported in CI.

- Developer velocity: how often front-end commits proceed without backend gating due to robust mocks.

- Reproducibility: how consistently tests pass when services and data are in the expected state.

High workflow coverage and low regression detection time typically indicate our Arazzo specifications are accurately capturing expected behavior and that Specmatic tests are effectively validating the behavior across services.

Conclusion

We use Arazzo and Specmatic to bridge the gap between front-end development and backend integration. By authoring Arazzo specs visually, generating accurate YAML, and leveraging Specmatic to produce coordinated workflow mocks and API workflow testing, we streamline development cycles and reduce integration surprises. The same Arazzo spec powers mocks that allow front-end developers to work in isolation and powers tests that validate the real back-end behavior end-to-end. If we prioritize clear contexts, realistic examples, and declarative server configuration, API workflow testing becomes a reliable, repeatable part of our delivery pipeline that improves quality and developer confidence.

We encourage teams to adopt a single, well-maintained Arazzo spec, integrate Specmatic workflow tests into CI, and treat the spec as part of their living documentation. Doing so transforms API workflow testing from a bottleneck into a productivity enabler that keeps multi-service systems robust and predictable.

FAQ

Q: What exactly is API workflow testing?

A: API workflow testing is the practice of executing and validating multi-step interactions across multiple services, using predefined workflows that represent user journeys. API workflow testing verifies that each step behaves as expected and that the overall flow satisfies success criteria. Using Arazzo specs plus Specmatic, we can define these workflows declaratively and run them against mocks or real services to ensure correct behavior.

Q: How does the Arazzo spec enable both mocks and tests?

A: The Arazzo spec describes endpoints, request and response schemas, example payloads, and server locations. Specmatic reads that spec to generate mock responses that align with schema and examples. When switching to test mode, Specmatic uses the server locations in the same Arazzo spec to direct requests to real services. This single source of truth removes duplication and ensures mocks match what we test against.

Q: Do we need separate specs for mock and test?

A: No. We maintain a single Arazzo spec and switch Specmatic modes. The same spec contains examples and server definitions. In mock mode, Specmatic serves generated responses; in test mode, it issues real HTTP calls to servers defined in the spec. If you have multiple environments, you can maintain environment-specific server entries or override servers at runtime, but the API schema and workflow should remain shared.

Q: How do we achieve reliable API workflow testing in CI?

A: For deterministic CI runs, we recommend containerizing services and databases or using a shared test environment that is reset to a known state before workflow tests run. Seed the database with fixtures matching the Arazzo examples and make sure services start consistently. Incorporate Specmatic workflow tests into CI pipelines to detect regressions early.

Q: Can Specmatic handle conditional branching and multiple response codes?

A: Yes. Specmatic uses the Arazzo spec and the visual workflow definition to determine branching logic. It understands that only 2xx responses should continue a happy-path flow, while other codes may branch to error-handling paths. We author workflows with these conditions in mind so tests accurately reflect real-world behavior.

Q: How often should we update the Arazzo spec?

A: Update the Arazzo spec whenever the contract between services changes — either by design or accidental drift. Because the spec is the source of truth for both mocks and tests, keeping it current prevents misaligned expectations and integration issues. We treat spec updates as part of the same review process as code changes.