Testing MCP servers: How Specmatic MCP Auto-Test Catches Schema Drift and Automates Regression

By Yogesh Nikam

We recently shared a hands-on walkthrough with Specmatic where we explored practical approaches for Testing MCP servers, and in this post we want to expand on that experience. Our team has been building MCP servers for multiple use cases and, as these servers evolved, two problems surfaced repeatedly: the tedium of manual verification and the danger of schema drift between declared tool schemas and actual implementations. In the course of investigating, we used MCP Inspector to interactively call tools on a remote MCP server and then built a solution—Specmatic MCP Auto-Test—that automates Testing MCP servers and detects schema mismatches early in the development lifecycle.

Table of Contents

- Why Testing MCP servers matters

- Our starting point: exploring tools with MCP Inspector

- Design goals for a better approach to Testing MCP servers

- Introducing Specmatic MCP Auto-Test

- Resiliency testing: automated exploration of input space

- Detecting schema mismatches—the shift-left advantage

- Key capabilities of Specmatic MCP Auto-Test

- How to get started

- Best practices when Testing MCP servers

- FAQ

- Conclusion and invitation

Why Testing MCP servers matters

When you operate one or more MCP servers, each server exposes a set of tools, and each tool has an input-output schema. These schemas are intended to be a contract between consumers and the implementation. If you change code behind a tool, or deploy a new version of a server, you need confidence that you haven’t introduced regressions or broken that contract. Testing MCP servers is not a one-off task; it’s continuous validation you must build into your workflow.

Two recurring issues drove us to build an automated approach:

- Manual testing is unsustainable: Using an interactive inspector (like MCP Inspector) to click through inputs for every tool quickly becomes boring, slow, and error-prone as the number of tools grows.

- Schema drift and hidden required fields: The declared input schema for a tool can and does get out of sync with the implementation. A field that appears optional in the schema may, in reality, be required at runtime. These edge cases are easy to miss during manual testing.

Our starting point: exploring tools with MCP Inspector

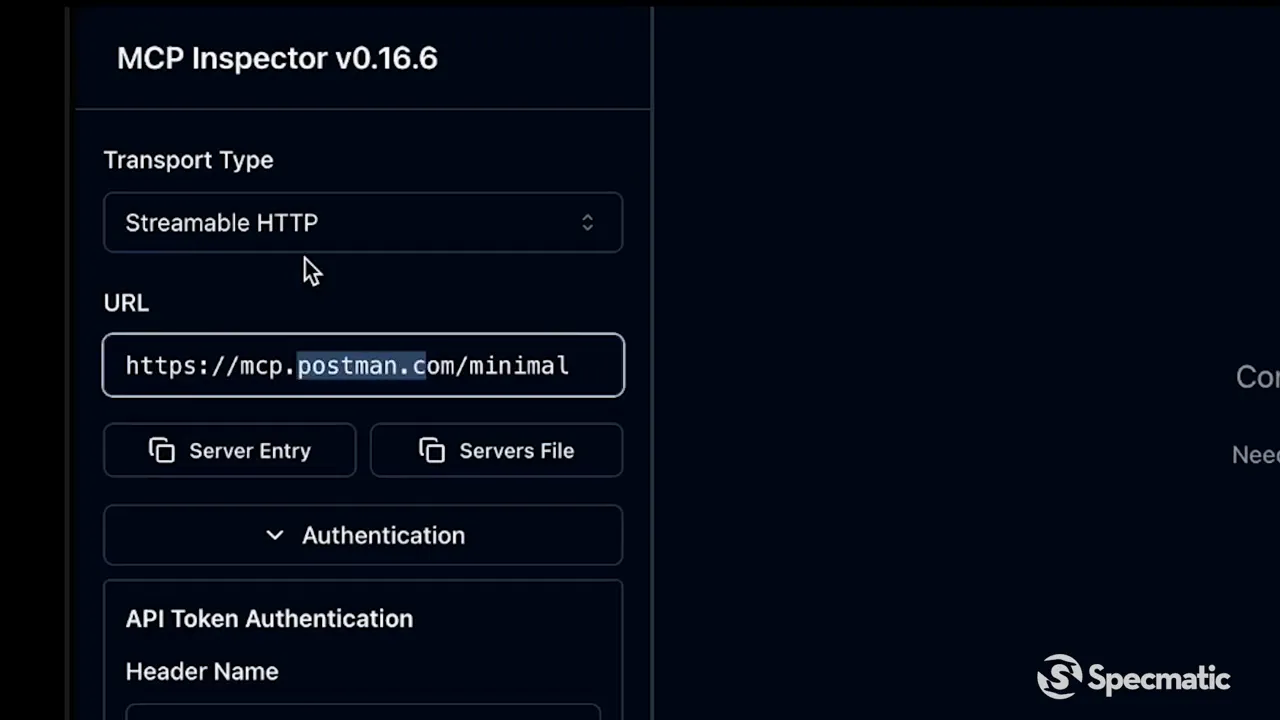

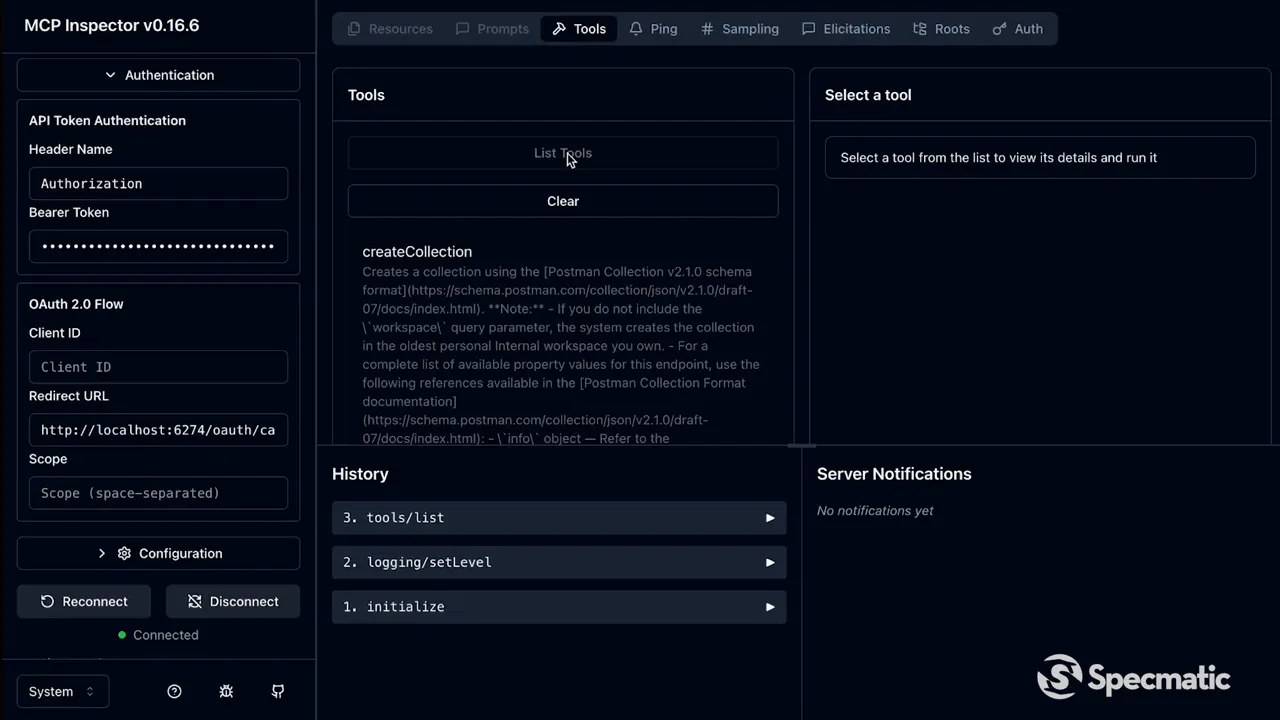

To illustrate the typical manual workflow, we connected MCP Inspector to Postman’s remote MCP server. The inspector makes it easy to list tools, select a tool like create workspace, fill the input form, and invoke the tool manually.

After connecting, we listed the tools exposed by the server and started calling them. Here’s the typical flow we used:

- List tools and pick one to test.

- Inspect the input schema that the inspector surfaces.

- Manually populate the form and invoke the tool.

For a tool like create workspace, manual testing is straightforward: we provided a name, description, and selected from the workspace type enum (personal, public, team). The calls returned success and we verified the different enum values manually.

Why this approach falls short

Manual testing is workable for a few tools and a few inputs, but quickly becomes impractical when:

- You have dozens of tools and many possible input combinations.

- Your schema includes optional fields, enums, or nested objects that can interact in unexpected ways.

- You must repeatedly validate behavior after every change or CI deployment.

And then there’s the nastier problem we encountered: schema drift.

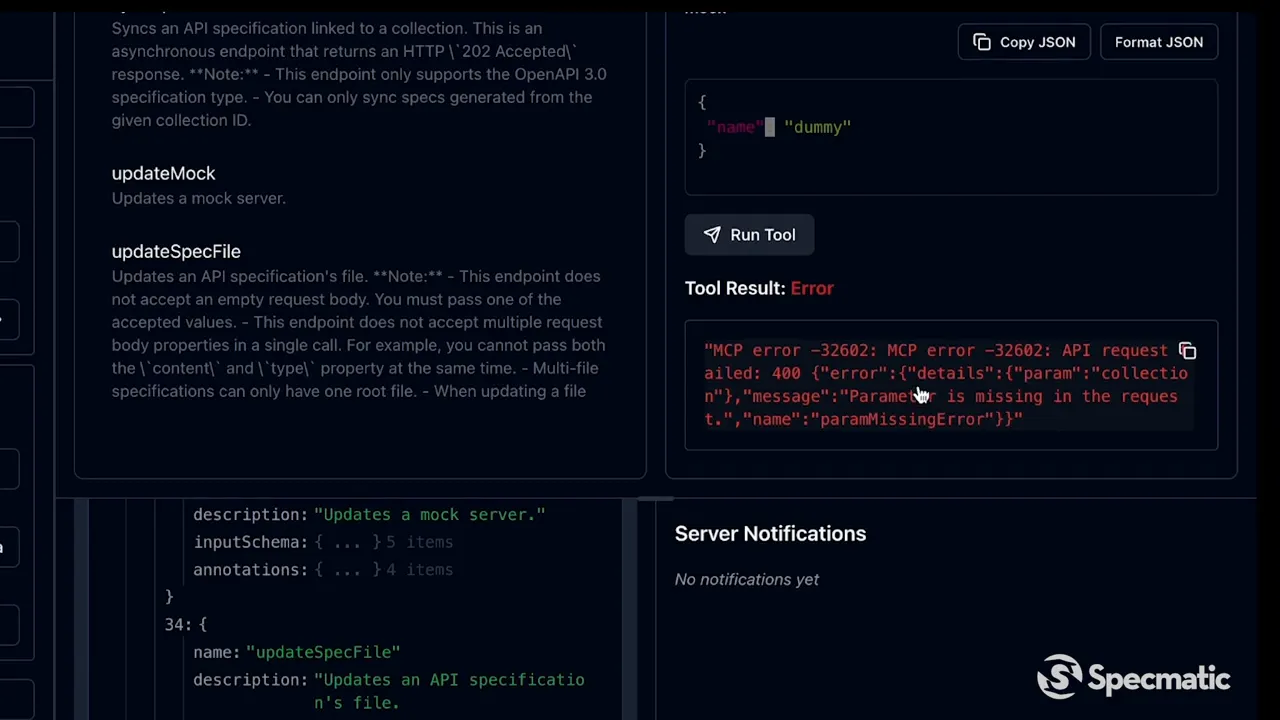

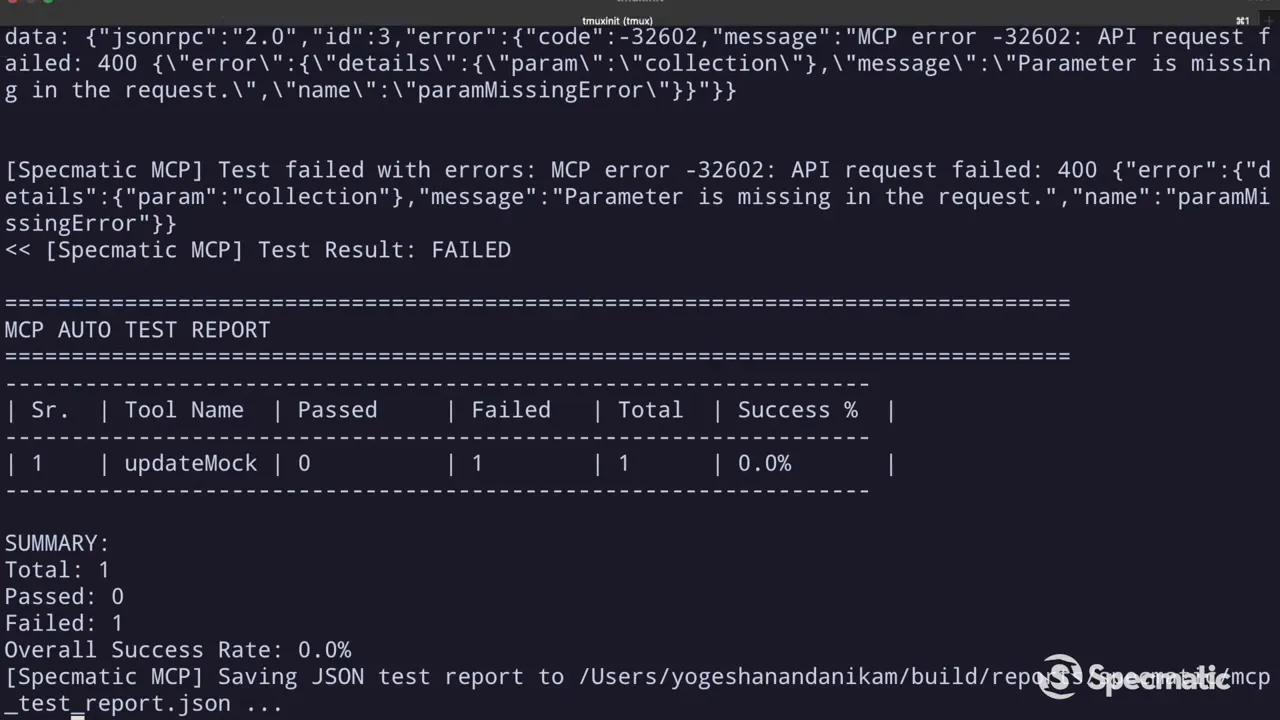

We tried invoking update mock using the inspector and, based on the input schema shown, only supplied a name field. The server returned an error complaining that a collection parameter was missing. The schema shown in the inspector didn’t indicate this requirement. This exact mismatch—an implementation expecting something the schema didn’t declare—demonstrated how manual testing can miss hidden constraints and how dangerous schema drift can be for production stability.

Design goals for a better approach to Testing MCP servers

After the manual walkthrough, we established clear goals for a testing solution:

- Automatically generate test calls for every tool using the tool’s declared input-output schema.

- Explore multiple input combinations (including enums, optional fields, and nested structures) to catch edge cases.

- Use realistic test data so server validations behave like production inputs.

- Produce repeatable, CI-friendly test suites to prevent regressions across deployments.

Introducing Specmatic MCP Auto-Test

To address these requirements, we built Specmatic MCP Auto-Test: a command-line subcommand under the Specmatic parent CLI that automates Testing MCP servers. It fetches tools and their schemas from an MCP server, generates test inputs, invokes tools, validates responses against declared output schemas, and reports pass/fail status.

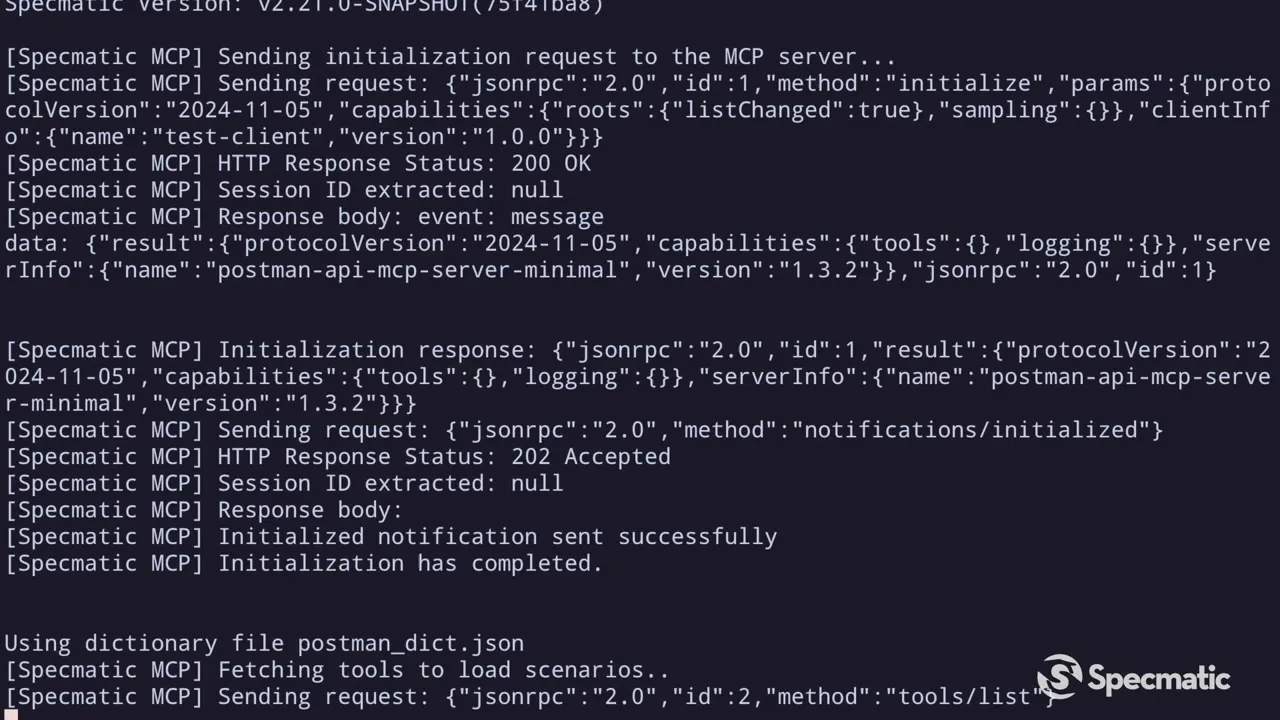

Here’s what happens under the hood when you run a test:

- Specmatic initializes a connection to the remote MCP server (using your transport and authentication configuration).

- It invokes the tools list endpoint to fetch all available tools and their input-output schemas.

- Using those schemas it automatically creates multiple test cases per tool—default tests and, optionally, resilience tests that explore many input combinations.

- Each generated test is executed against the server; responses are checked for success/failure and validated against the output schema.

- Specmatic produces a console report and saves an adjacent test report detailing which tests passed, failed, and why.

This pipeline provides a repeatable, scriptable way to validate MCP servers at scale.

What the test run looks like

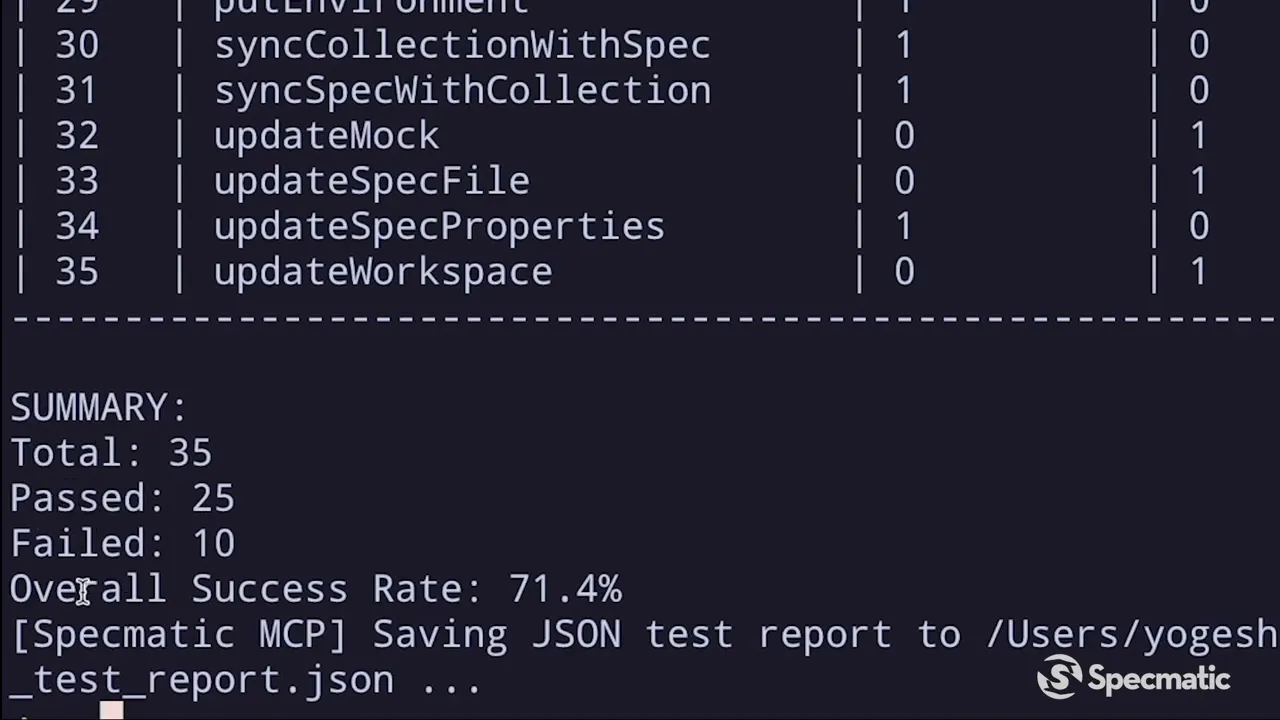

When we ran the MCC Auto-Test against Postman’s MCP server using a realistic dictionary (to populate names, descriptions, and other string fields), Specmatic generated and executed tests for over 35 tools in a single run. The console report summarized tool-by-tool pass/fail status and an overall success percentage. Clicking into individual tests showed the exact request arguments Specmatic auto-generated and the output the server returned.

Resiliency testing: automated exploration of input space

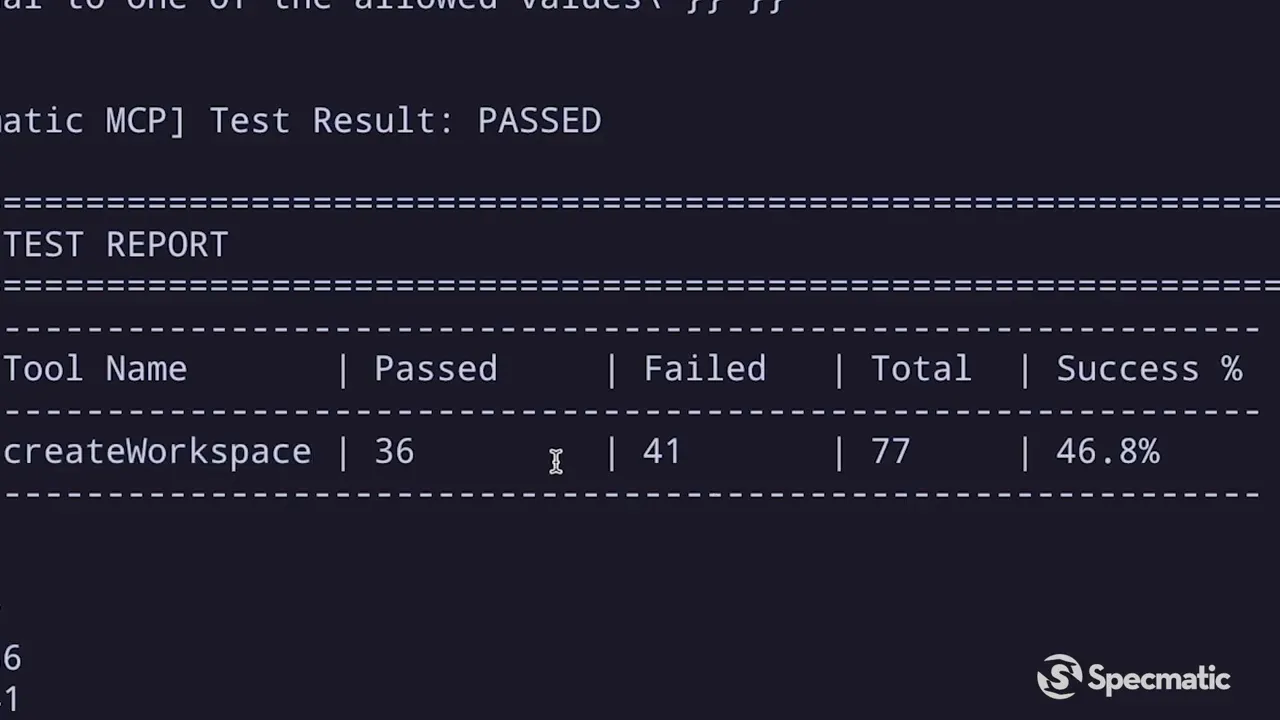

One particularly powerful feature is resiliency testing. By enabling this flag you tell Specmatic to generate comprehensive input combinations for a single tool—sampling enum values, toggling optional fields, and mutating nested structures. This replicates what you might painstakingly do manually in the inspector, but at scale and without the tedium.

For example, instead of manually testing the three workspace types (personal, public, team) for create workspace, Specmatic built and ran dozens of permutations automatically. Each test verifies that the server responds correctly for that specific combination. This is core to reliable Testing MCP servers: you want to ensure not only the happy path but also permutations and edge cases behave as expected.

Detecting schema mismatches—the shift-left advantage

Remember the update mock example where the schema falsely indicated an optional field? Specmatic ran an automated test for that tool and reproduced the exact error—parameter missing in the request. Because the test suite runs locally and in CI, this issue would be caught before code reaches production. That’s the “shift-left” advantage: developers find schema drift problems early, fix the implementation or update the schema, and avoid surprising runtime failures for consumers.

Key capabilities of Specmatic MCP Auto-Test

- Automatic test generation from tool schemas—no manual test-writing to start the coverage.

- Realistic test data via customizable dictionaries to exercise meaningful validation logic.

- Resiliency testing to sweep the input space for enums, optional fields, and nested structures.

- Schema drift detection where mismatches between implementation and declared schemas surface as failing tests.

- Repeatable test suites that integrate with CI to prevent regression and ensure continuous quality.

How to get started

At a high level, the workflow to adopt automated Testing MCP servers with Specmatic MCP Auto-Test looks like this:

- Install the Specmatic CLI and ensure you have a configured transport to your MCP server (including auth).

- Run a discovery test to fetch tools and generate a baseline test suite.

- Supply a dictionary file with realistic test data to improve exercise coverage.

- Enable resiliency testing selectively on tools that need deeper exploration.

- Integrate the command into your CI pipeline so tests run on pull requests and before merges.

Because the tool outputs a saved report next to console output, you can easily store artifacts and make test results searchable for auditability.

Best practices when Testing MCP servers

- Maintain accurate schemas: if a tool needs a required parameter, declare it as required in the schema so generated tests are honest reflections of runtime behavior.

- Keep dictionary data representative of real inputs: realistic test values reduce false negatives and increase meaningful coverage.

- Use resiliency testing judiciously: run broad explorations on complex tools and targeted tests on others to balance runtime cost and coverage.

- Run auto-tests early in CI: catching drift before deployment removes most production surprises.

FAQ

Q: Does Specmatic MCP Auto-Test change any production data?

A: No — by default, tests execute against the target server endpoints and respect whatever the target environment allows. You should run tests against staging or test instances when those endpoints mutate production data. Use mocks or safe endpoints if you want read-only verification.

Q: How does Specmatic know which inputs to send for each tool?

A: Specmatic consumes the input schema returned by the tools list call. From that schema it synthesizes sensible inputs, draws values from a customizable dictionary for realism, and explores permutations when resiliency testing is enabled.

Q: Can it be part of CI pipelines?

A: Absolutely. Specmatic MCP Auto-Test is a CLI tool designed to be scriptable and idempotent. You can add it to a CI job that runs on pull requests and fail the build if new changes break the test suite.

Q: What about false positives from flaky servers?

A: If you see intermittent failures, first verify network stability and server health. You can add retries or isolate flaky tools from the resiliency sweeps. The saved reports help debug and correlate transient issues.

Conclusion and invitation

Testing MCP servers at scale requires a different mindset than occasional manual checks. Automating test generation, exercising realistic input combinations, and running suites in CI gives teams confidence to change implementations without fear of regression. Specmatic MCP Auto-Test is designed to provide exactly that: automated discovery, resiliency exploration, schema validation, and repeatable reports that prevent surprises in production.

We built this tool as open source and invite the community to try it, report edge cases, and contribute improvements. If you’re responsible for Testing MCP servers or managing an MCP-based integration surface, give automated testing a shot—you’ll save time, reduce regressions, and catch schema drift far earlier in the cycle.

Ready to make Testing MCP servers easier? Try Specmatic MCP Auto-Test, add it to your CI, and let the tests do the tedious work for you.