Exposed: MCP Servers Are Lying About Their Schemas

By Yogesh Nikam

Table of Contents

- Practical Lessons from MCP Server Testing

- Key Takeaways

- Hugging Face MCP: When “optional” isn’t optional

- Non-standard constraints: numbers and the danger of relying on descriptions

- Postman MCP: When mutually exclusive fields are not declared

- Postman MCP: numeric limit without bounds

- Practical recommendations

- How MCP server testing helps teams

- Conclusion

Practical Lessons from MCP Server Testing

Over the last few weeks the Specmatic team ran a focused series of MCP server testing experiments against several remote MCP servers — notably Postman’s, Hugging Face’s, and GitHub’s MCP servers. The goal was straightforward: use Specmatic’s MCP Auto-Test to find places where the declared schemas differ from how the servers actually behave, and surface real, actionable problems that break integrations or cause consumer-facing failures.

In this write-up I’ll walk you through what we discovered, why these mismatches matter, and how to fix them. If you care about building reliable APIs, especially in the era of LLMs and automated agents, the insights below are directly applicable to your work.

Why we ran these tests (and why you should care)

MCP server testing helped us confirm something many teams suspect but rarely catch early: schema-implementation drift is real, common, and costly. When schemas omit strict constraints or accidentally mark fields optional, automated consumers — including testing tools, CI pipelines, and LLM-based agents — will make assumptions. Those assumptions often lead to bad requests, confusing errors, and unpredictable behavior.

Specmatic’s MCP Auto-Test automatically generates and runs inputs into an MCP server based on its declared schema. That allowed us to detect mismatches without manually hand-crafting hundreds of test cases. The results were eye-opening.

Key Takeaways

- Schema-Implementation Drift is Real: Servers sometimes treat fields as required while schemas mark them optional (or vice versa).

- Use Standard JSON Schema Constraints: Descriptions are not a substitute for explicit minimum/maximum or oneOf/anyOf operators.

- Vague Specs Lead to Hallucinations: Leaving rules to natural language descriptions invites automated tools or LLMs to produce invalid values.

- Automated MCP Server Testing Catches Issues Early: Run MCP Auto-Test during development to surface these problems before clients rely on the API.

Hugging Face MCP: When “optional” isn’t optional

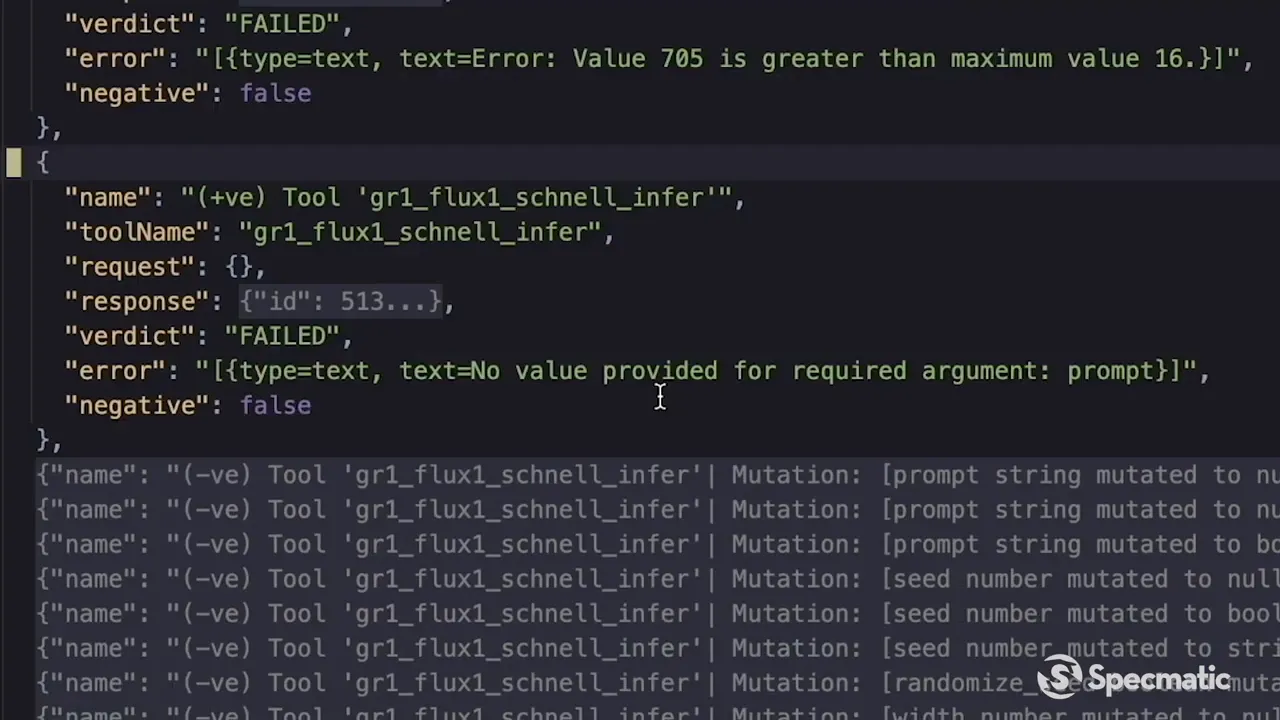

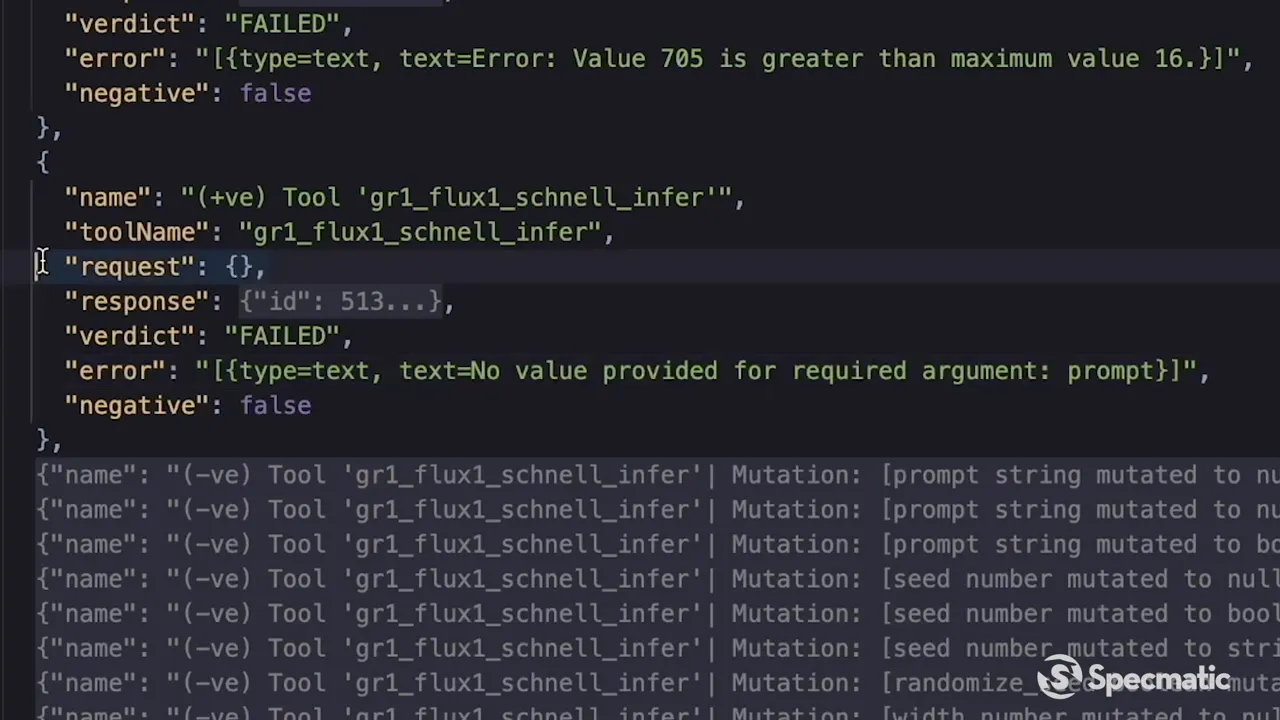

One of the clearer failures we found was with a tool where the server returned an error: “no value provided for the required argument prompt.” Looking at the request sent by Specmatic, we saw an empty request body — none of the arguments were provided.

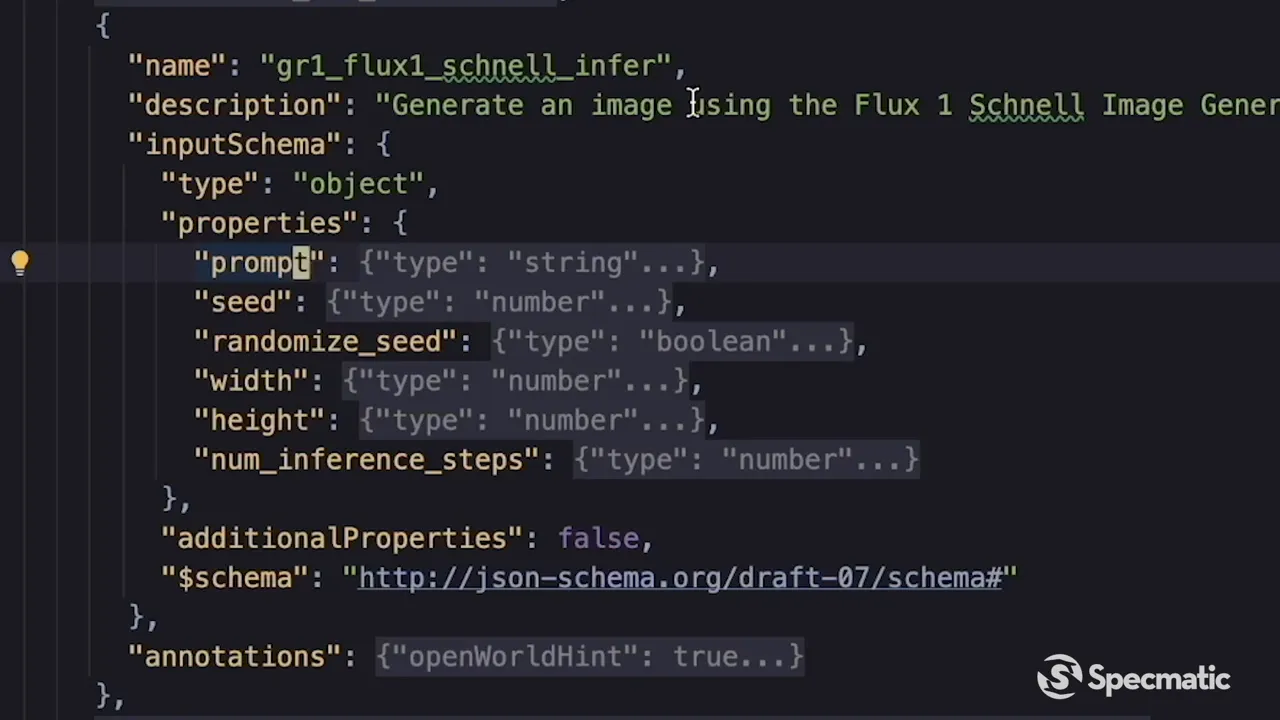

At first glance, that suggests Specmatic failed to pass the prompt. But the root cause was different. The tool schema declared all properties (including prompt) as optional — there was no “required” array in the JSON schema. That implies any property could be left out, so Specmatic generated a request without any fields. The implementation, however, treated prompt as mandatory. The result: schema says optional, server expects required.

This kind of schema-implementation drift is precisely what MCP server testing is designed to reveal. If a server treats a field as required, the schema must reflect that by including it in the “required” array. Otherwise consumers will either produce invalid requests or need custom, brittle workarounds.

Why this matters

Clients — human or automated — rely on the schema to know which fields to send. If the schema is wrong, integrations fail. For LLM-based agents that build requests from schema clues, incorrect optionality can produce empty or malformed requests that crash or behave unpredictably. Always keep the schema authoritative.

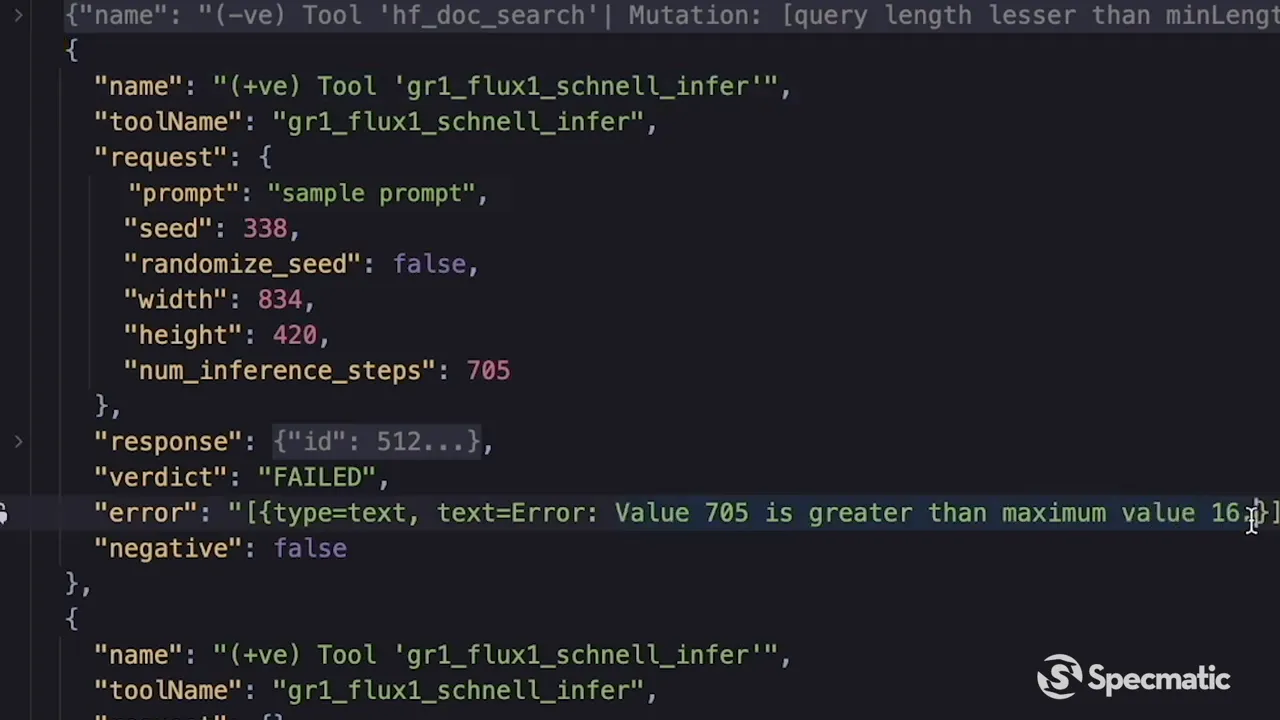

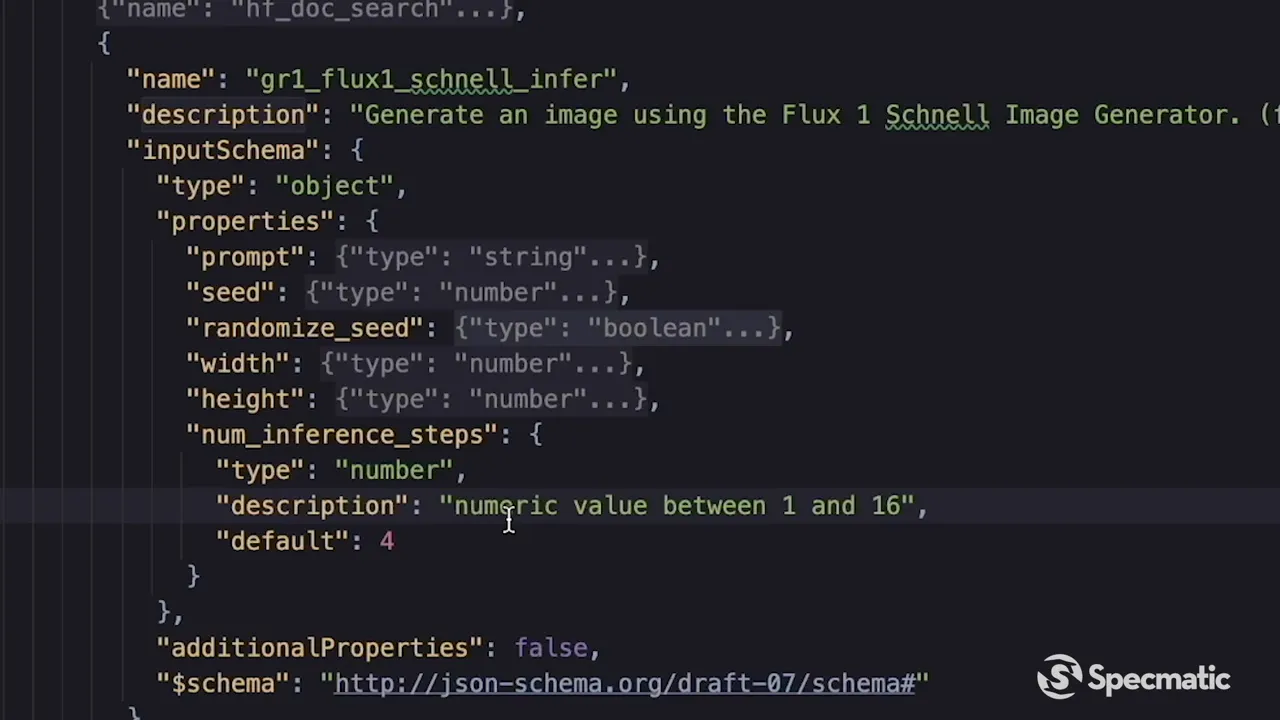

Non-standard constraints: numbers and the danger of relying on descriptions

Another issue we ran into with the same tool was numeric bounds. The server rejected a request with num_inference_steps of 705, saying “value 705 is greater than the maximum value 16.” But when we inspected the declared schema, the field was typed as a number with a description: “numeric value between 1 and 16.”

Here’s the problem: the schema used a human-readable description to state constraints, instead of using the standard JSON Schema keywords minimum and maximum. Specmatic’s auto-test — and any other strict JSON Schema consumer — has no way to derive numeric bounds from a free-text description, so it generated values outside the intended range.

Fix: use standard schema keywords

Define constraints using JSON Schema fields such as minimum, maximum, exclusiveMinimum, exclusiveMaximum, pattern for strings, enum for finite sets, and oneOf/anyOf/oneOf for mutually exclusive options. Relying on descriptions is convenient for humans, but it is invisible to automated consumers and testing tools.

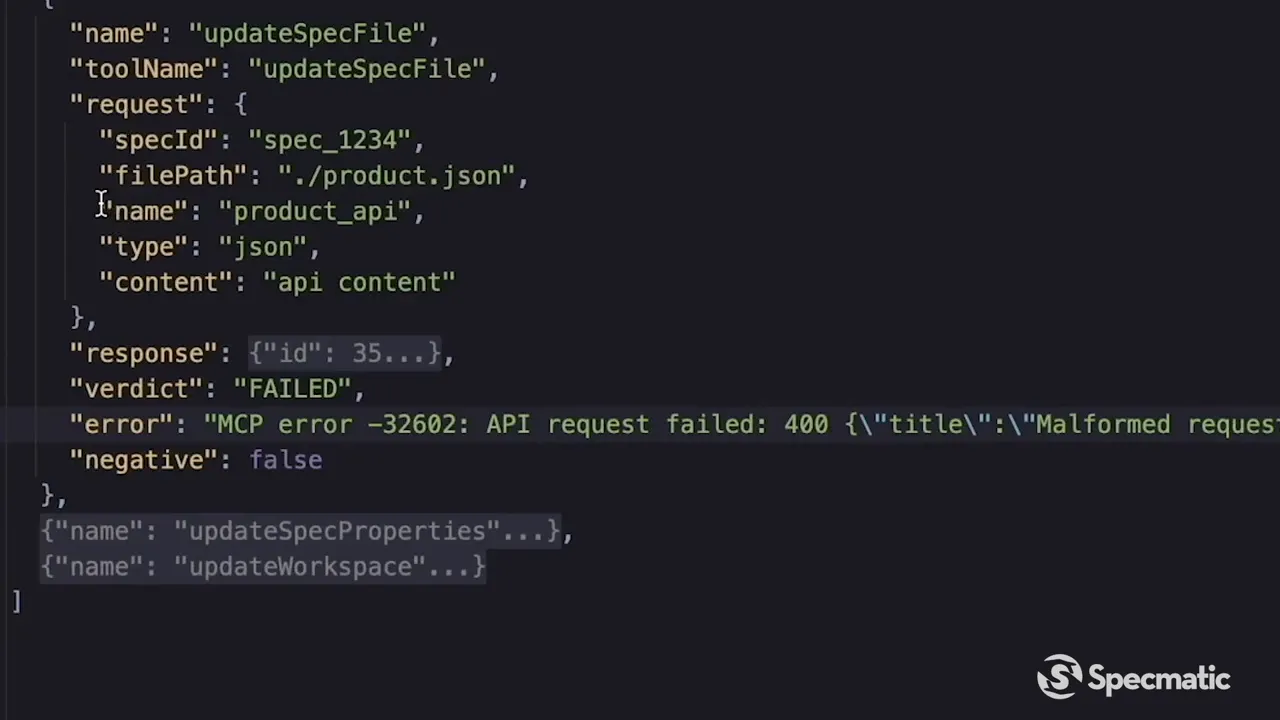

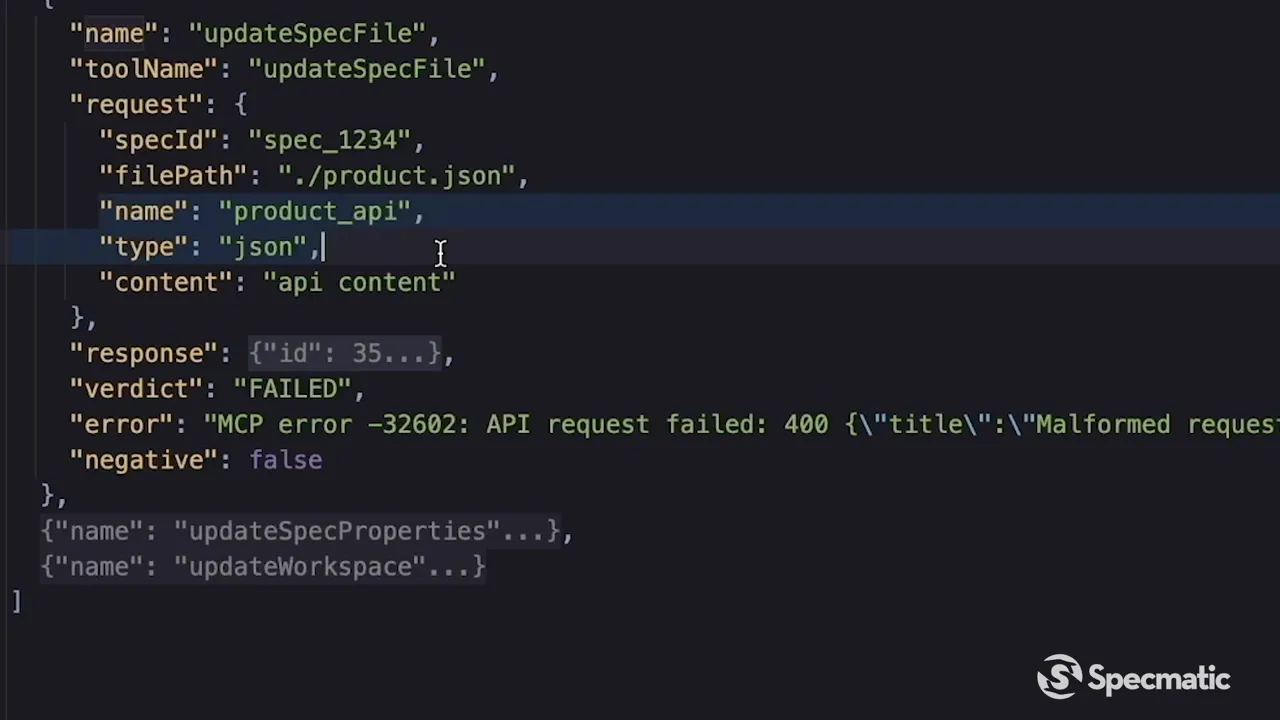

Postman MCP: When mutually exclusive fields are not declared

At Postman’s MCP server we hit subtly different issues that point to the same root cause: missing or underspecified schema operators. One tool, updateSpecFile, rejected requests with the error “only one of content_type or name should be provided.” The requests sent by Specmatic included three keys — content, type, name — but the server code enforced that only one of those keys be present at a time.

The schema defined these properties and even marked some as required, but it did not express the mutual exclusivity between name, content, and type. In JSON Schema terms, the schema should use an operator like oneOf or anyOf to declare that only one of the group may appear. Without that, Specmatic assumed any combination could be present and generated requests that the server then rejected.

How to model mutually exclusive fields

When designing APIs, call out exclusive options explicitly. Use oneOf to list acceptable schemas where only one of them can match, or use dependencies and conditional subschemas in JSON Schema to model more complex rules. This clarity prevents both human developers and automated tools from making invalid requests.

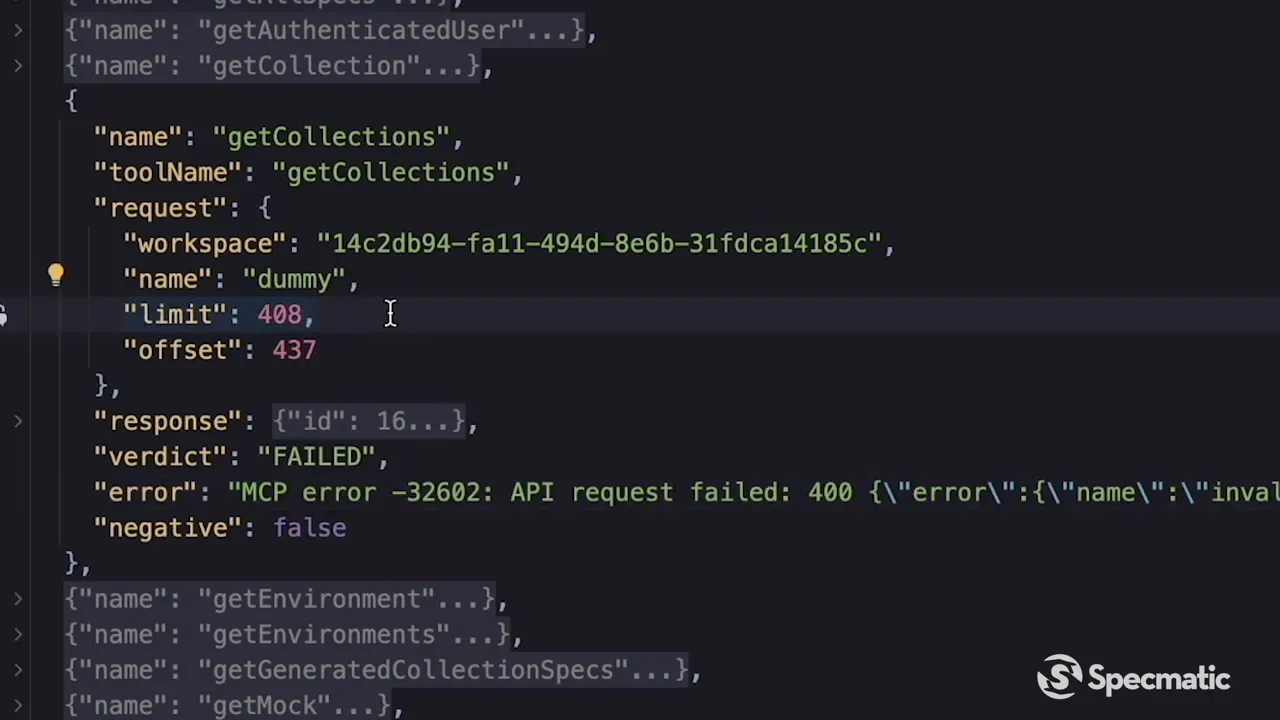

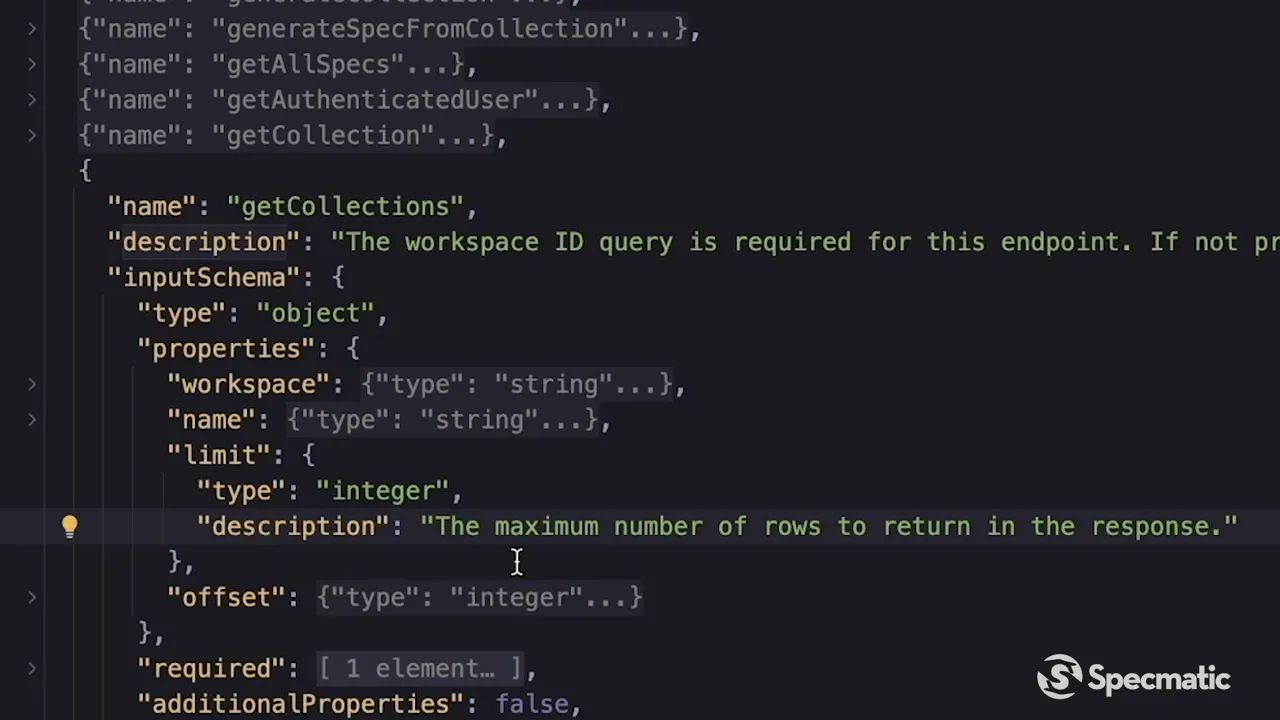

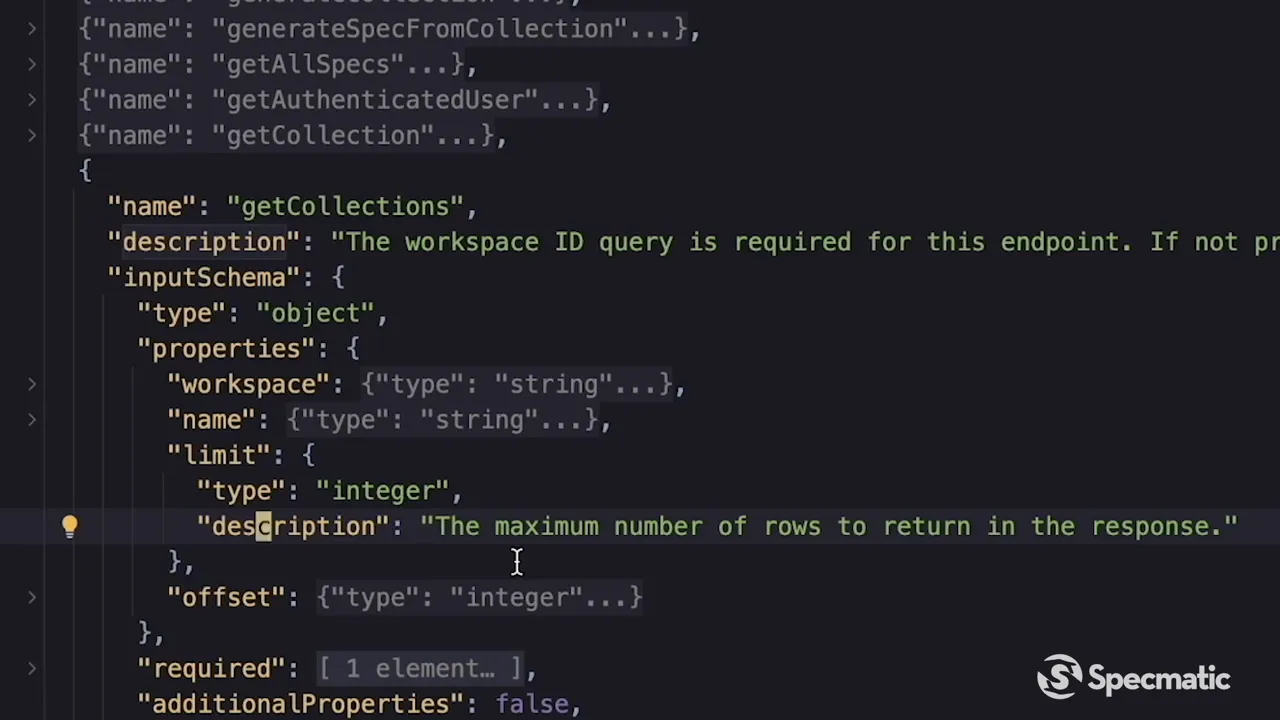

Postman MCP: numeric limit without bounds

We also saw a getCollections endpoint where the server rejected limit values above 100. Specmatic had generated a request with limit=408 and received the response: “Value must be a positive integer smaller than 100.” The schema only declared that limit is an integer and included a human-oriented description, but it omitted maximum: 100.

Again, the lack of explicit constraints in the schema caused an automated consumer to generate invalid inputs. The fix is consistent: codify the expectations using JSON Schema constraints, not descriptions.

Why this pattern keeps happening

Teams often document constraints in descriptions because it’s quick and readable. But time and again, that choice makes the schema unreliable for machines. Modern API ecosystems include automated testing, contract verification, SDK generation, and even LLM-driven clients — all of which depend on machine-readable constraints.

Practical recommendations

- Treat the schema as the single source of truth: if behavior changes, update the schema first, then implementation. The converse leads to drift.

- Use JSON Schema keywords: minimum, maximum, pattern, enum, oneOf/anyOf/required. Avoid relying on free-text descriptions to communicate machine-level constraints.

- Run MCP server testing early and often: integrate Specmatic’s MCP Auto-Test into CI to detect drift before consumers depend on the API.

- Model mutually exclusive fields explicitly: use oneOf or dependent schemas to express exclusivity rather than assuming clients will “figure it out.”

- Assume automated consumers: LLMs and other automated agents will make requests strictly from the schema. Make that schema robust.

How MCP server testing helps teams

Specmatic’s MCP Auto-Test automates the generation and execution of requests based on a server’s declared MCP schema. It exposes mismatches between what the schema promises and what the implementation enforces. That means you can:

- Catch required-field mismatches (schema says optional, implementation requires it).

- Detect missing numeric or string constraints that cause invalid values.

- Find logic-based rules like mutually exclusive fields that are not represented in the schema.

- Prevent downstream hallucinations from LLM-based clients that build requests mechanically.

Automated MCP server testing reduces guesswork and surfaces discrepancies that would otherwise be discovered by frustrated integrators or production bug reports.

Conclusion

MCP server testing revealed a simple truth: your schema is the contract you make with the world. If that contract is vague or inconsistent with the implementation, every automated consumer — tests, SDK generators, and LLMs — will eventually break in surprising ways. Use standard JSON Schema constructs to make constraints machine-readable, declare required fields, and model mutually exclusive properties explicitly.

Run Specmatic’s MCP Auto-Test (or similar contract-based tests) as part of your development lifecycle to catch schema-implementation drift early. It’s a small investment with outsized returns in reliability, developer experience, and trust.

FAQ — MCP server testing

What is MCP server testing?

MCP server testing is the practice of running automated tests against a Machine Consumable Protocol (MCP) server using its declared schema to generate request payloads and validate responses. Tools like Specmatic’s MCP Auto-Test generate inputs from the schema and check whether the server’s behavior matches the declared contract.

Why did Specmatic send invalid values during tests?

Specmatic generates inputs strictly from the schema. If the schema lacks explicit constraints (for example, maximum/minimum for numbers, or oneOf for mutually exclusive fields), Specmatic has no machine-readable guidance and may generate values outside the intended range. That’s why using standard JSON Schema keywords is essential.

How do I prevent schema-implementation drift?

Make sure the schema is the authoritative source of truth. Update the schema whenever API behavior changes, add machine-readable constraints (minimum, maximum, enum, oneOf), and run MCP server testing in CI to catch drift before it affects clients.

Can LLMs be trusted to follow schema descriptions?

No. Relying on natural language descriptions to convey constraints invites hallucinations. LLMs and other automated agents typically follow machine-readable schema fields. Always encode constraints using JSON Schema keywords to ensure deterministic behavior.

How often should I run MCP server testing?

Run it automatically on every schema or implementation change — for example, in pull request checks and nightly CI runs. The cost of running the tests is small compared to the cost of tracking down integration issues caused by schema drift.

What are the most common schema mistakes we found?

- Missing required arrays for fields that implementations treat as mandatory.

- Using descriptions to state numeric limits instead of minimum/maximum.

- Not modeling mutually exclusive fields with oneOf/anyOf.

- Lack of enums for finite sets that should be strictly enforced.

If you’re responsible for an MCP server or consume one, make schema quality a first-class concern. MCP server testing is a fast, scalable way to ensure your contract matches reality and to keep integrations working smoothly.