When Downstream Services Lag, Does Your API Gracefully Accept with 202 Responses?

By Naresh Jain

When Downstream Services Lag: Designing Reliable APIs with 202 responses

As systems get distributed, synchronous calls to downstream services become fragile. When a downstream service cannot produce a resource immediately, a well-designed API should not break or block forever. Instead, many architectures return 202 responses to accept the request and point clients to a way to retrieve the result later. Handling 202 responses correctly is essential for user experience, observability, and predictable retries.

Why 202 responses matter

A 202 responses strategy signals partial acceptance: the server has received the request but the work will be completed asynchronously. This reduces latency for the client and makes your API resilient to slow or intermittent downstream systems. Rather than returning an immediate 201 with an ID that may not exist yet, the API returns a monitor link where the client can poll for completion.

Core pattern: Accept now, provide a monitor endpoint

The typical flow when issuing 202 responses is:

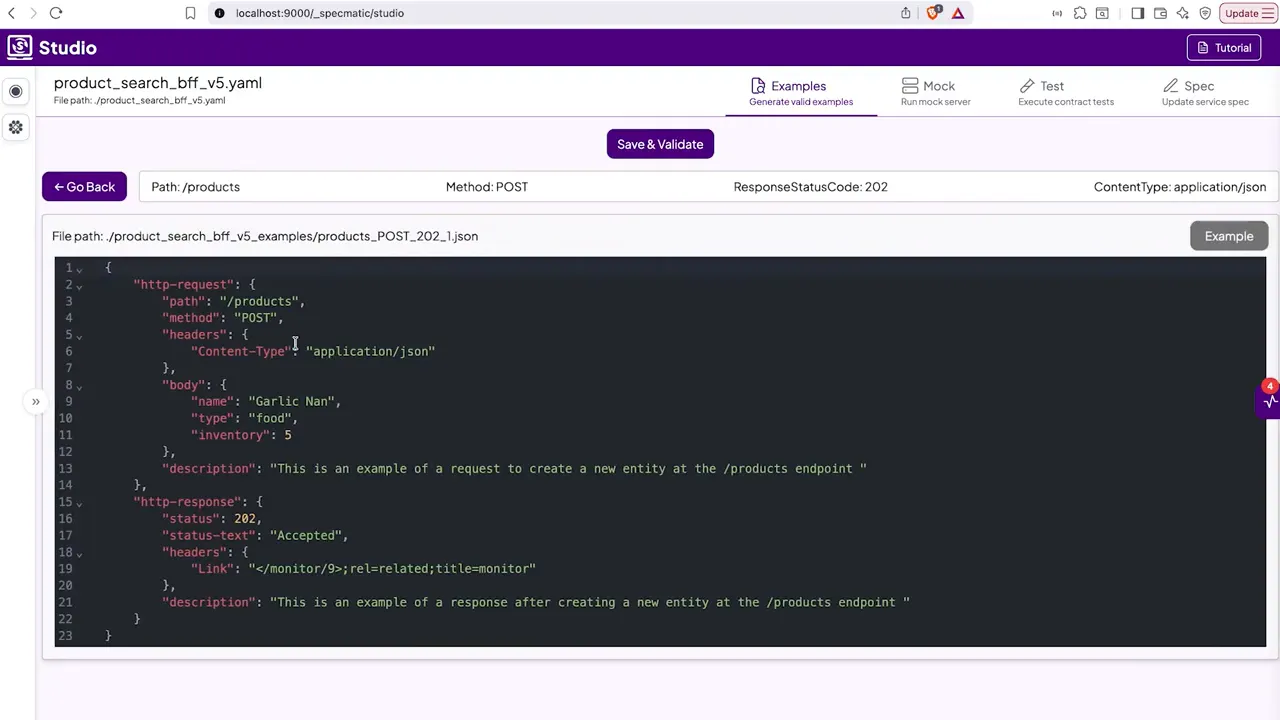

- Client posts a create request to /products.

- The backend attempts to create the resource in a downstream service.

- If the downstream service is slow, the API returns a 202 response with a location or monitor link.

- The client uses the monitor link to poll for the final result once the downstream service completes.

The monitor pattern keeps the initial response light and avoids blocking. It also formalizes how clients should retry or wait for completion.

Simulating delayed downstream behavior in tests

To trust your implementation, you must test for 202 responses. Mocks that instantly return a 201 will hide real-world failures. The trick is to introduce controlled delays so the BFF or API gateway returns a 202 instead of a 201.

Key test elements:

- Mock delay: Configure the mock downstream service to delay its response long enough that the API falls back to asynchronous acceptance.

- Transient behavior: Make the delayed response valid only for a specific request instance so tests can validate retry logic cleanly.

- Monitor verification: Ensure the monitor endpoint eventually returns the final successful resource once the downstream service completes.

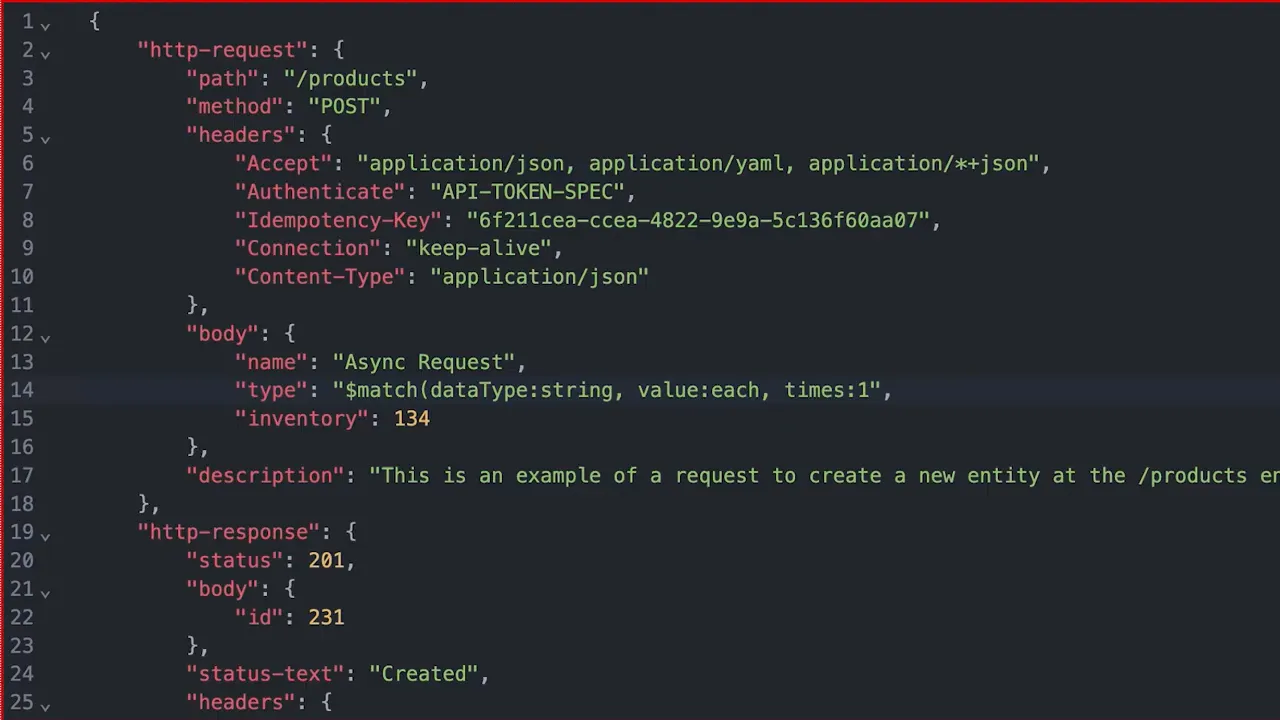

Practical tips: match functions and transient examples

When crafting tests that exercise 202 responses, avoid hard coding fields. Use match functions to express flexible expectations. For example:

– For a product name that must be unique, match the data type as string while allowing the enum of possible values to be exercised. – For numeric fields like inventory, match a min, max, and a value in-between rather than a single fixed number.

Combining match functions with a transient configuration ensures that a particular combination of request attributes triggers the delayed path only once. This allows you to validate that the initial call returns a 202 response and that a later poll to the monitor returns the completed resource.

Step-by-step: from failing test to robust validation

- Run your API test suite with a straightforward mock. If the mock responds immediately you will see 201s when you expect 202 responses.

- Add a delay to the mock for the specific request pattern you want to test. The delay must be long enough for the API to choose asynchronous acceptance.

- Use a match function to capture flexible values for the request body so you can test multiple enum variants and numeric constraints.

- Mark the mock behavior as transient so it only applies to the exact combination you want to simulate once. This avoids flakiness in subsequent test runs.

- Re-run the tests and verify the initial call returns a 202 response, then poll the monitor endpoint and confirm the downstream service eventually returns a 200 or 201 with the expected resource details.

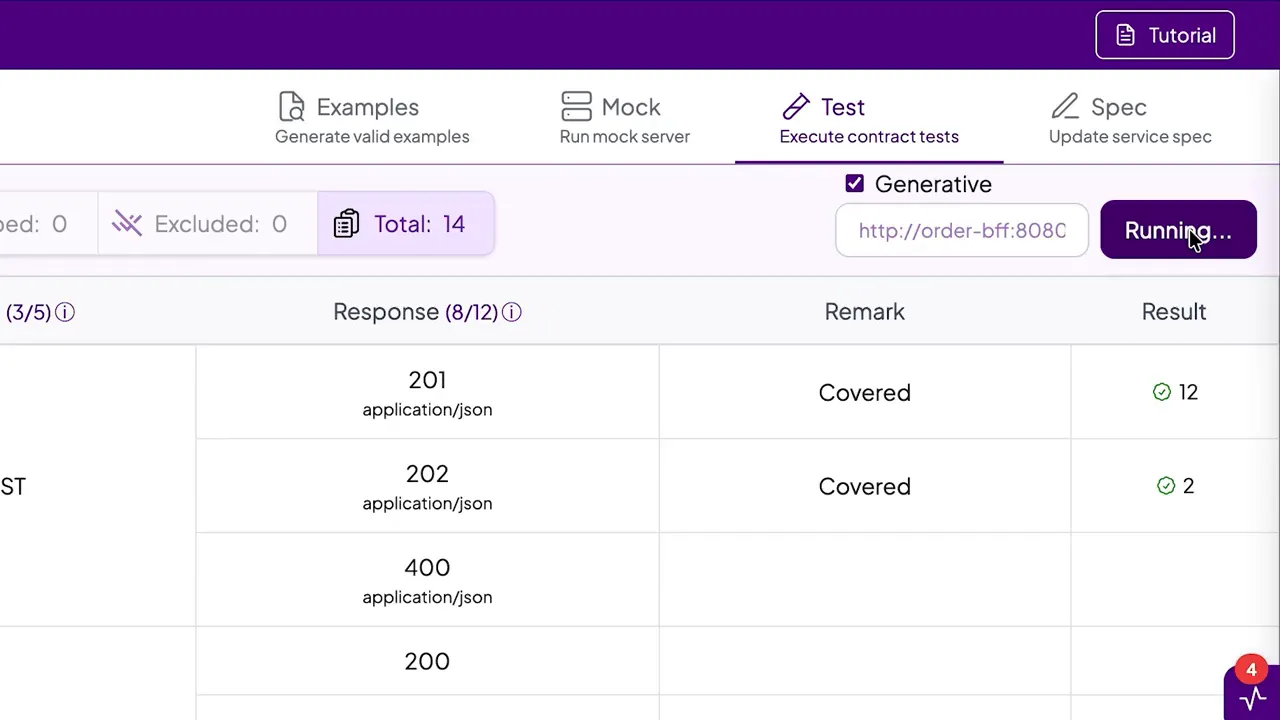

Scaling tests with generative testing

One-off tests are useful, but systems must handle every valid combination. Enabling generative testing lets the test tool synthesize all combinations of enum values and numeric constraints, validating 202 responses across the product surface automatically.

How generative testing helps:

- Automatically generates combinations of the enum-backed type field (for example, four different types).

- Cycles through numeric constraints like inventory min, max, and intermediate values.

- Runs the delayed path for each generated case so you can validate that 202 responses and monitor retries are handled for all permutations.

In practice, generative testing may produce dozens or hundreds of test cases. Each case might take a couple of seconds due to the simulated timeout and monitor retries, but the result is confidence: every permutation that could yield a 202 response is exercised.

Common pitfalls and how to avoid them

– Returning a 201 too quickly because the mock has no delay. Add explicit mock delays for the async scenario to validate 202 responses. – Over-constraining tests with hard-coded values. Use match functions to capture the structure and allow variability. – Forgetting to mark mock behaviors as transient, which can cause subsequent tests to misrepresent the async flow. – Not validating the monitor endpoint. Testing only the initial 202 response without polling the monitor misses whether the downstream service eventually succeeds.

Checklist for reliable 202 responses

- API returns a monitor link when the downstream service is slow.

- Mocks simulate delay to force the asynchronous path.

- Match functions are used to avoid fragile, hard-coded tests.

- Transient mock behavior restricts the delay to the intended test scenario.

- Monitor polling validates eventual success and correct resource details.

- Generative tests are enabled to exercise all enum and constraint combinations.

Conclusion

Designing for 202 responses is not optional when downstream services can be slow. It is a pragmatic approach that protects clients from blocking, improves resilience, and clarifies retry behavior through a monitor endpoint. Combined with smart mocking, match functions, transient behavior, and generative testing, teams can validate asynchronous flows across all combinations and maintain confidence that the API behaves predictably in production.

What exactly does a 202 response indicate?

A 202 response indicates the request has been received and accepted for processing, but the processing has not been completed. It often includes a monitor or location link for the client to poll for completion.

How should clients consume a monitor endpoint after a 202 response?

Clients should poll the monitor endpoint at reasonable intervals or use an exponential backoff strategy. The monitor should eventually return the last state, such as a 200 with the resource details or a failure code if the downstream service fails.

How do mock delays affect test runtime?

Introducing mock delays increases test duration because each case will wait for the simulated timeout and possibly multiple retries. Generative testing compounds this, so plan test execution time accordingly. Parallelizing tests and tuning delay durations can help.

Can generative testing produce false positives for 202 scenarios?

Generative testing reduces false positives by exercising a wide range of combinations. However, ensure your mock configurations faithfully represent production timing and transient behavior to avoid optimistic test results.