Testing API Resiliency using Kotlin: Leveraging Functional Programming at Scale

25 Jan 2025

Online

Summary

Resiliency is a cornerstone of robust API design. Functional programming in Kotlin provides an elegant toolkit for ensuring API robustness through advanced testing techniques like property-based testing and mutation testing. These techniques play a critical role in uncovering edge cases, verifying fault tolerance, and ensuring the reliability of APIs at scale.

In this talk, we’ll explore how Kotlin’s functional programming features like safe calls, list compression (map/folds), sequences (lazy evaluation), tail-recursion optimisation, etc. which enables testing APIs that can withstand real-world challenges. Discover how property-based testing allows us to go beyond hard-coded test cases, exploring a wider input space to identify edge cases traditional testing often misses. We’ll also delve into mutation testing, demonstrating how it verifies the effectiveness of test suites by identifying weak spots in API behavior under failure scenarios.

Drawing from my experience using Kotlin in Specmatic, I’ll showcase real-world examples of validating API contracts dynamically, handling unpredictable inputs gracefully, and improving fault tolerance. Learn how Kotlin’s functional constructs enable elegant, maintainable, and scalable testing solutions for high-pressure environments.

Join this session to discover how combining functional programming with advanced testing methodologies can help you build and test APIs that are not just functional, but resilient. Whether you’re an API developer or a testing enthusiast, you’ll leave with actionable strategies to improve the reliability and quality of your APIs.

Transcript

Testing API Resiliency Using Kotlin: Leveraging Functional Programming at Scale

In an increasingly interconnected world, API resiliency is crucial for maintaining the functionality of applications. This presentation dives into the methodologies for testing API robustness using Kotlin, emphasizing the power of functional programming techniques to enhance reliability and fault tolerance.

Table of Contents

- Introduction to API Resiliency Testing

- The Importance of API Resiliency

- Real-World Examples of API Failures

- Common Challenges in API Resiliency Testing

- Introducing Specmatic: An Open Source Solution

- Understanding the Architecture of API Testing

- Setting Up a Simple API Test

- Dynamic Test Generation with Specmatic

- Exploring Positive and Negative Test Scenarios

- Analyzing Test Results and Coverage

- Addressing the Combinatorial Explosion of Tests

- Optimizing Memory Usage with Lazy Lists

- The Shift from Eager to Lazy Evaluation

- Final Thoughts and Best Practices

- Frequently Asked Questions

Introduction to API Resiliency Testing

API resiliency testing is a critical practice in software development. It ensures that APIs can withstand various failures and continue to operate effectively. In today’s interconnected landscape, where services depend on one another, the stability of an API is paramount. This testing goes beyond just checking if an API works; it involves simulating real-world failures to see how well the API can recover.

Understanding API Resiliency

To grasp the essence of API resiliency, we need to consider the reliability of the services that depend on it. APIs are the backbone of modern applications, and their failure can lead to cascading issues across multiple systems. Therefore, testing for resiliency is not just a good practice; it’s a necessity.

Key Components of API Resiliency Testing

- Failure Simulation: Actively simulating failures to assess how the API handles them.

- Load Testing: Evaluating API performance under high traffic conditions.

- Dependency Testing: Testing how the API interacts with other services and what happens when those services fail.

- Monitoring and Alerts: Ensuring that proper monitoring is in place to detect failures quickly.

The Importance of API Resiliency

In a world where applications rely heavily on APIs, their resiliency is vital. A single API failure can lead to widespread disruptions. For instance, if a payment processing API goes down, it can halt transactions across multiple platforms, affecting businesses and consumers alike. This interconnectedness amplifies the impact of API failures.

Consequences of API Failures

When APIs fail, the repercussions can extend far beyond the immediate service. Consider the following examples:

- Financial Services: A failure in a banking API can prevent customers from accessing funds, leading to significant financial loss.

- E-commerce: An outage in a product API can result in lost sales and frustrated customers.

- Healthcare: APIs that manage patient data must be resilient; failures can compromise patient care.

Real-World Examples of API Failures

Real-world incidents highlight the critical need for API resiliency testing. One notable example is the outage of a leading cloud service provider that affected numerous businesses relying on its APIs. Services were disrupted, leading to lost revenue, customer dissatisfaction, and reputational damage.

Notable Incidents

- Airline Check-in Systems: A failure in a flight information API resulted in chaos at airports as passengers were unable to check in.

- Social Media Platforms: When a popular social media API went down, users were unable to post updates, affecting engagement and ad revenue.

- Payment Gateways: An outage in a payment processing API led to transactions being declined, frustrating customers and merchants alike.

Common Challenges in API Resiliency Testing

Despite its importance, many organizations struggle with implementing effective API resiliency testing. Here are some common challenges:

Challenges Faced

- Lack of Awareness: Many teams do not fully understand the implications of API failures.

- Resource Constraints: Limited time and budget can hinder comprehensive testing efforts.

- Complex Architectures: As systems grow more complex, testing each API in isolation becomes increasingly difficult.

- Inadequate Tools: The right tools are essential for effective testing, yet many organizations lack access to advanced solutions.

Introducing Specmatic: An Open Source Solution

Specmatic is an open-source tool designed to address the gaps in API testing, particularly around resiliency. It allows teams to simulate API behavior, conduct contract testing, and ensure that services adhere to defined specifications.

Key Features of Specmatic

- Contract Testing: Ensures that APIs meet predefined specifications, reducing the risk of failures.

- Service Simulation: Allows developers to simulate API responses, enabling testing even when the real service is unavailable.

- Integration with CI/CD: Easily integrates into existing pipelines, promoting continuous testing and feedback.

- Community Support: Being open source, it benefits from community contributions, ensuring ongoing improvement and feature enhancements.

Getting Started with Specmatic

To begin using Specmatic, developers can easily set it up within their existing environments. Documentation is available to guide users through the installation and configuration process. As teams adopt this tool, they can enhance their API resiliency testing practices and ultimately improve the reliability of their applications.

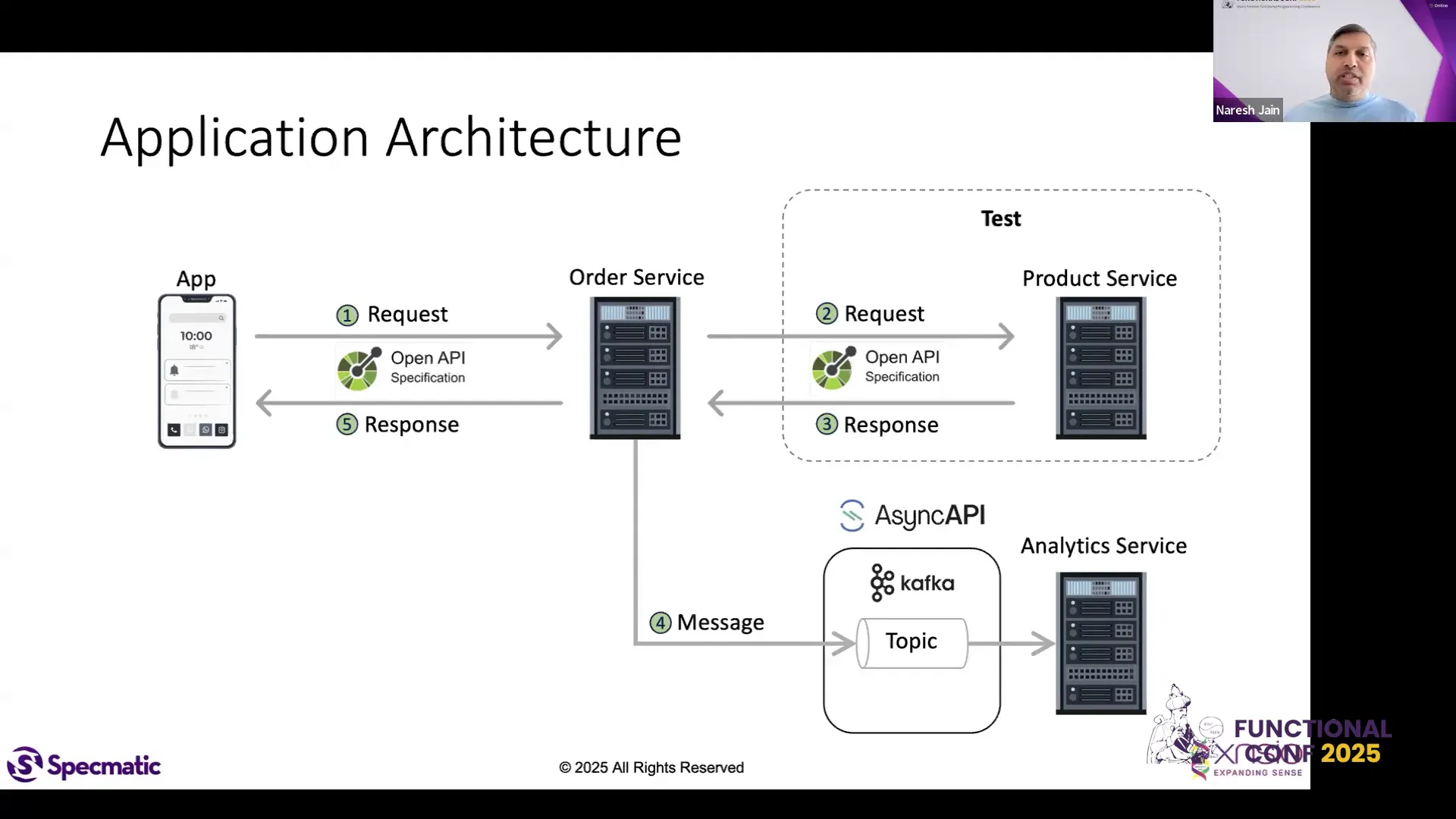

Understanding the Architecture of API Testing

API testing architecture is built on a foundation of specifications and contracts. The key lies in understanding how these elements interact to create a resilient API. At its core, an API test verifies the expected behavior of an API endpoint against its specification.

APIs and Specifications

APIs communicate through requests and responses, and specifications define how these interactions should occur. A well-defined specification acts as a contract between the service provider and the consumer, detailing the expected inputs, outputs, and error handling.

Contract Testing Frameworks

Contract testing frameworks like Specmatic leverage these specifications to automate the generation of tests. They ensure that the API adheres to its contract by validating requests and responses against the defined rules.

Test Generation Process

When you define a specification, tools like Specmatic can automatically generate a suite of tests. This process minimizes manual effort and enhances coverage. The generated tests can include both positive scenarios, where inputs are valid, and negative scenarios, where inputs are invalid or unexpected.

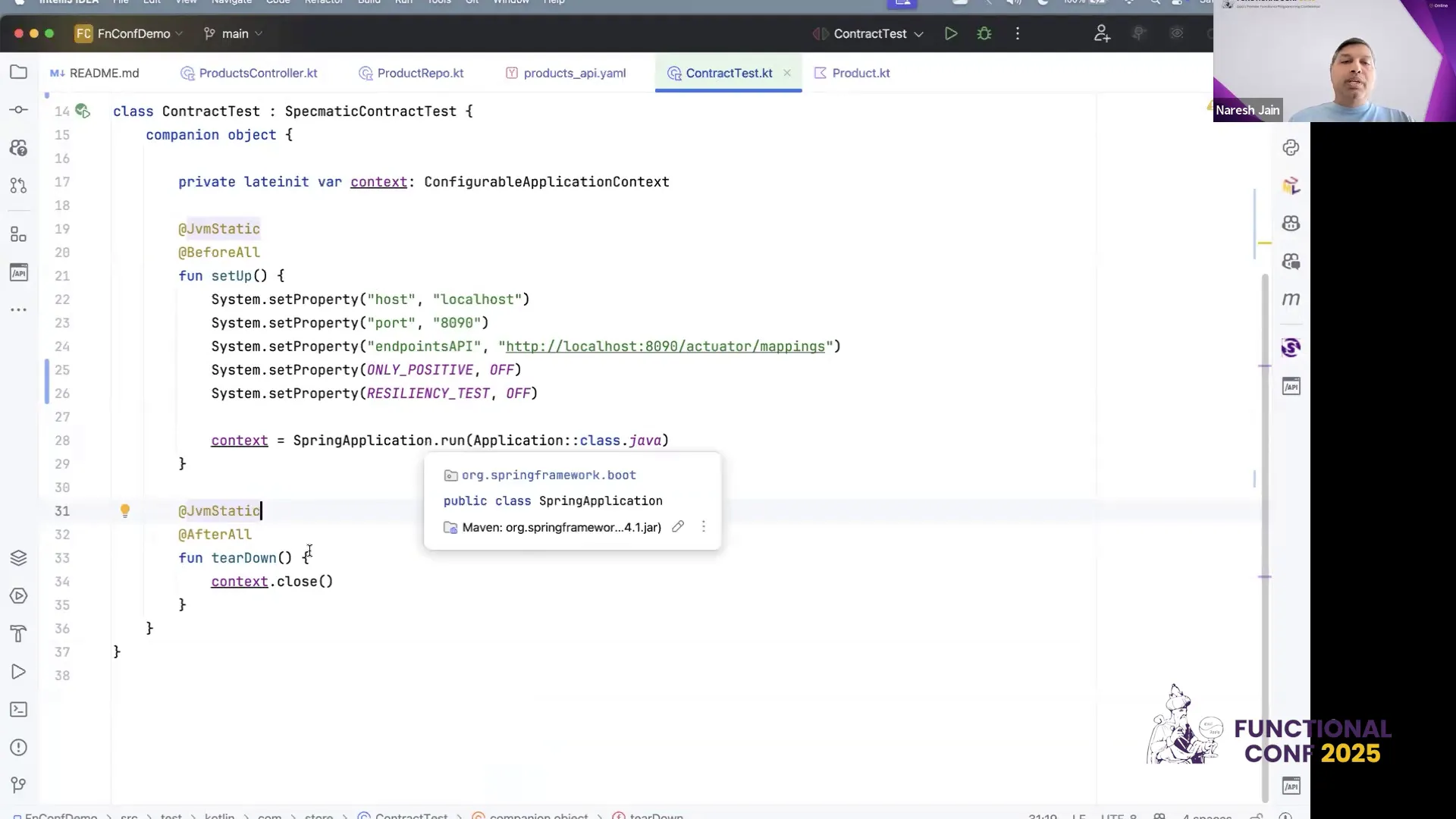

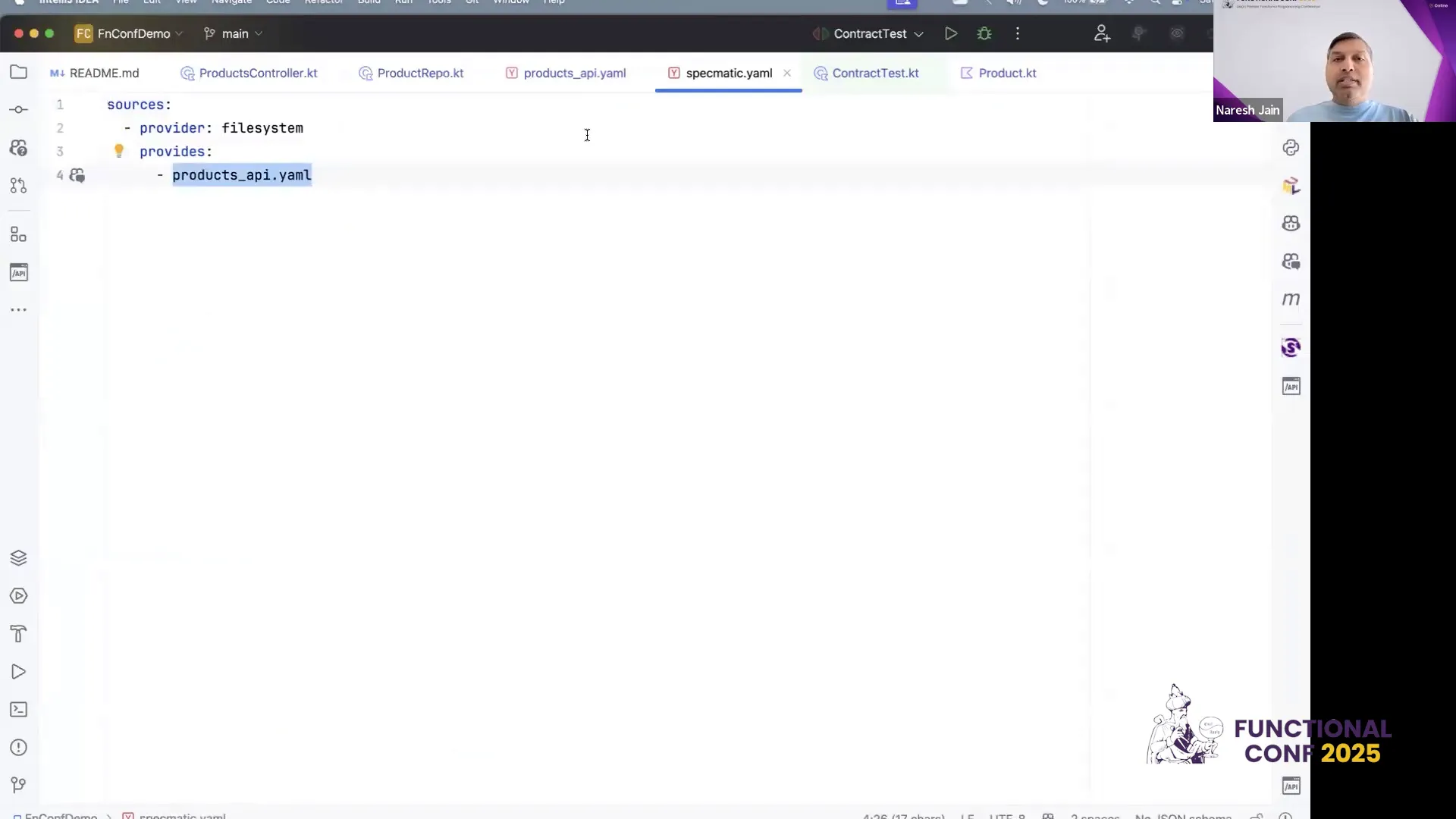

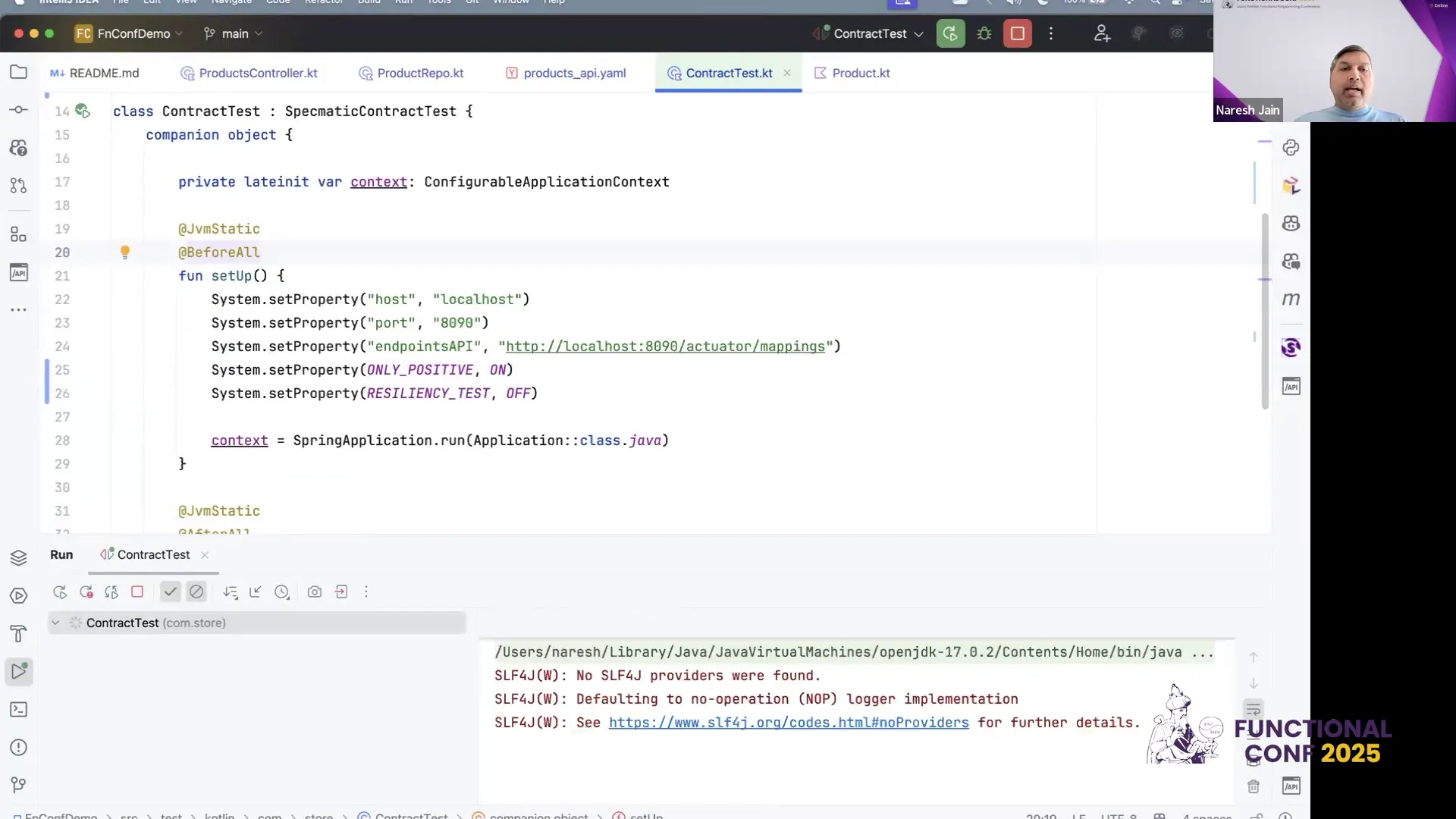

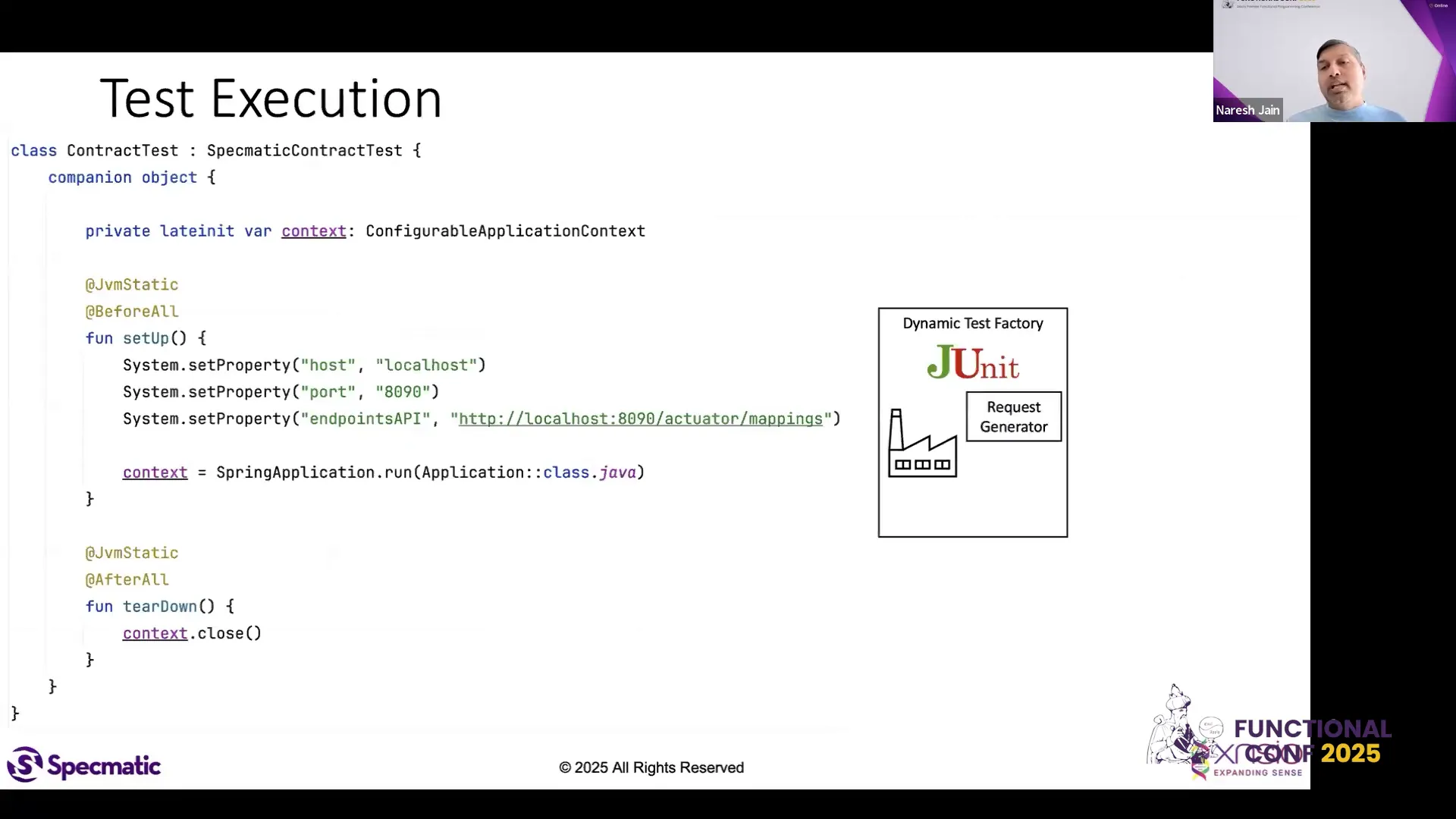

Setting Up a Simple API Test

Setting up an API test with Specmatic is straightforward. Begin by defining your API specification in a YAML file. This file outlines the endpoints, request parameters, and expected responses. Specmatic reads this file to generate tests automatically.

Creating the Specification

Your specification should detail all the necessary information for each API endpoint. For example, include the HTTP method, path, request body structure, and response formats. A well-structured specification is crucial for effective test generation.

Running Tests

Once your specification is ready, you can run the tests using Specmatic. The tool will simulate requests based on the defined scenarios and validate the responses against the expected outcomes. This process helps identify any discrepancies early in the development cycle.

Dynamic Test Generation with Specmatic

One of the standout features of Specmatic is its ability to generate dynamic tests. This capability allows the tool to create a wide range of scenarios based on the defined specification, significantly increasing test coverage.

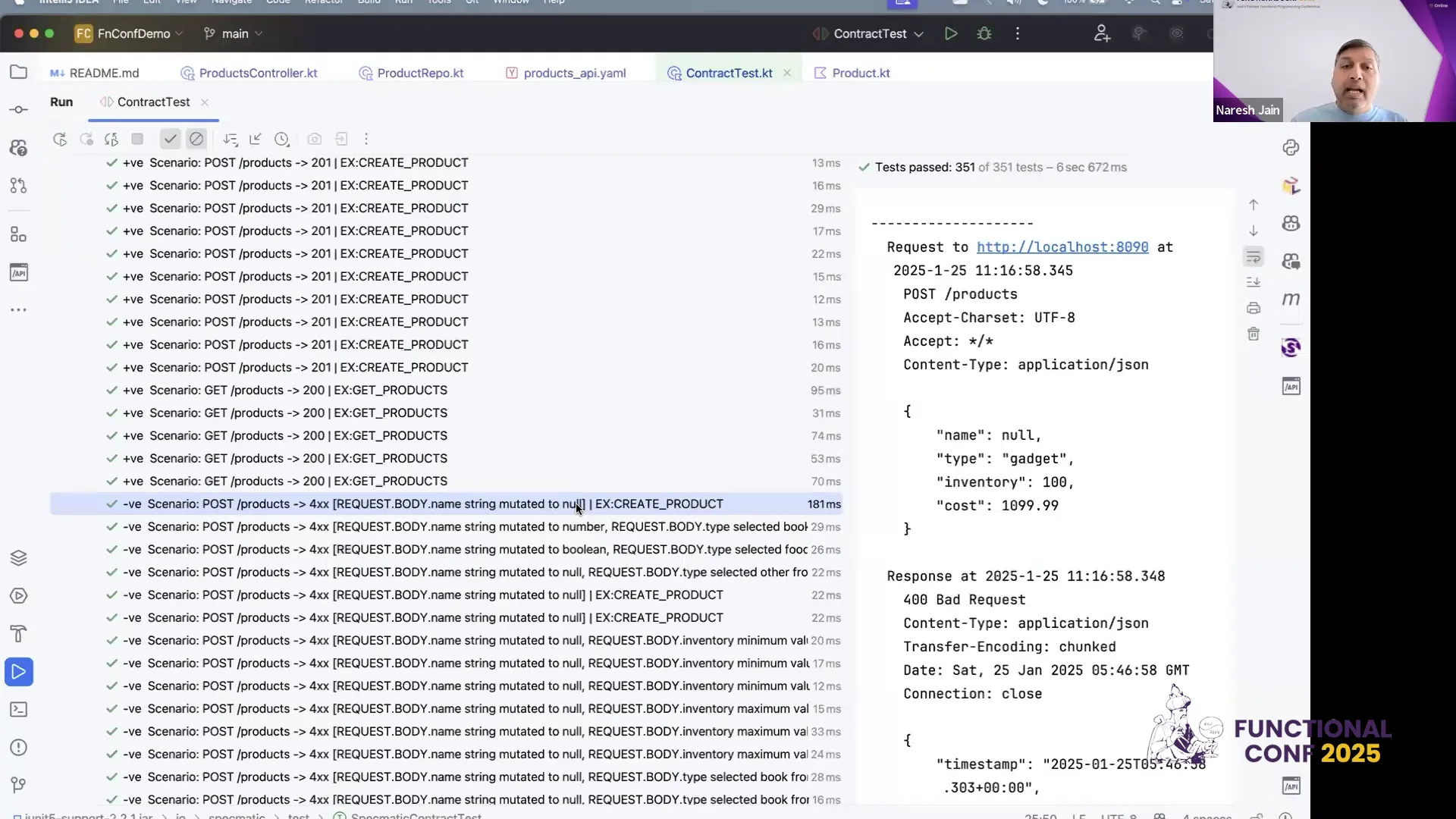

Positive Test Scenarios

When you enable positive test generation, Specmatic creates tests that validate the expected behavior of your API. This includes testing various valid inputs and ensuring that the API responds correctly.

Negative Test Scenarios

In addition to positive tests, Specmatic can also generate negative test scenarios. These tests help identify how the API behaves under erroneous conditions, such as invalid input types or missing required fields. This dual approach helps build confidence in the API’s robustness.

Exploring Positive and Negative Test Scenarios

Understanding both positive and negative test scenarios is essential in API testing. Positive tests confirm that valid inputs yield the expected results, while negative tests ensure that invalid inputs are handled gracefully.

Implementing Positive Tests

Positive tests should cover all valid input combinations defined in the specification. Specmatic can automatically generate these tests based on the examples provided within the spec file. This ensures comprehensive coverage without manual intervention.

Implementing Negative Tests

Negative tests are equally important as they reveal how the API handles unexpected situations. By mutating inputs or omitting required fields, you can test the API’s error handling mechanisms. Specmatic’s ability to generate these tests from the specification is a game-changer.

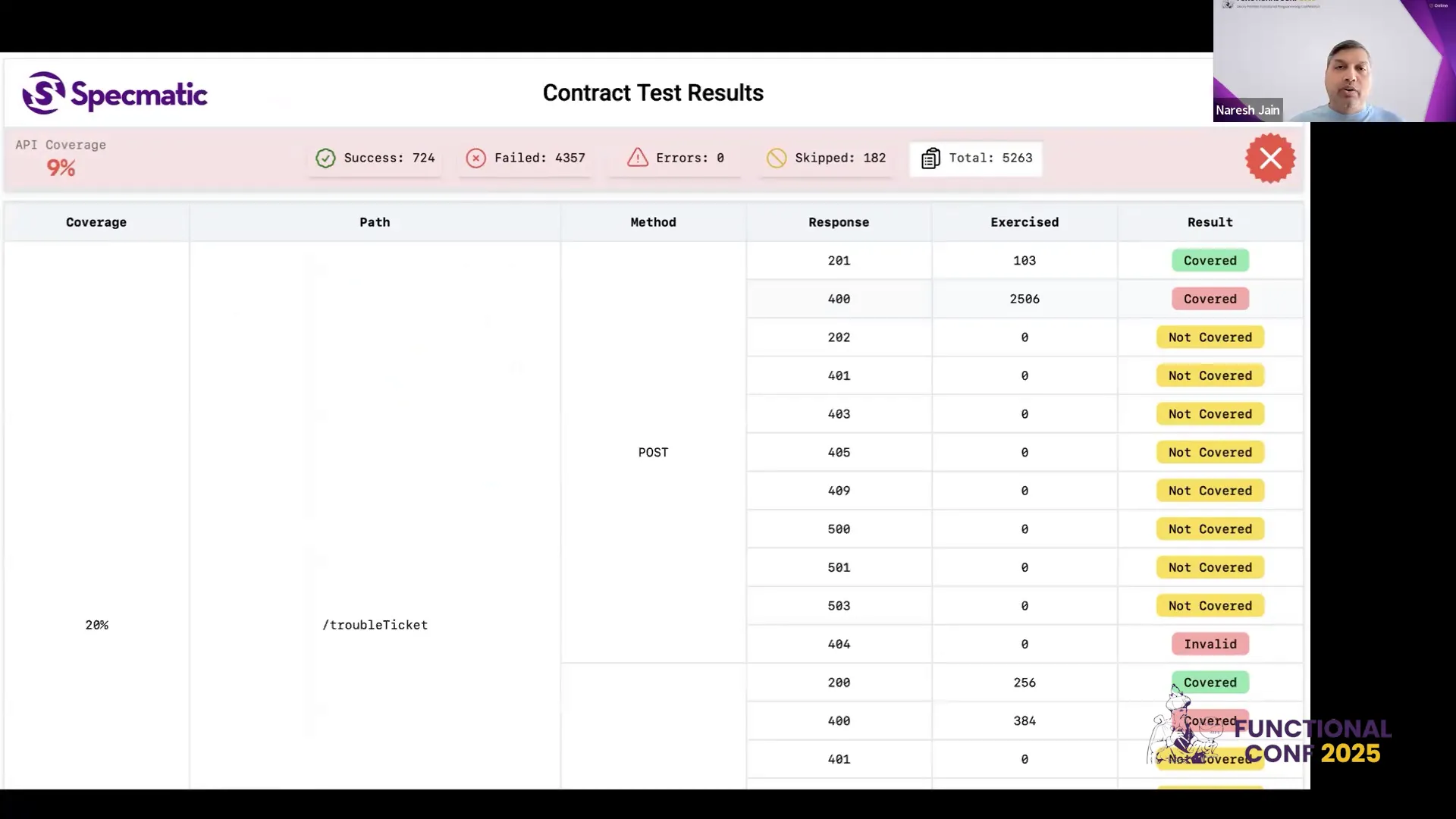

Analyzing Test Results and Coverage

Once tests are executed, analyzing the results is crucial. Specmatic provides detailed reports that outline which tests passed and which failed, along with the reasons for any failures. This insight is vital for maintaining API reliability.

Understanding Coverage Reports

Coverage reports generated by Specmatic detail the scope of tests conducted. This includes the number of tests run, the types of responses received, and any errors encountered. Understanding these reports helps teams identify gaps in their testing strategy.

Iterating on Feedback

With each test run, teams can gather feedback and iterate on their API design and implementation. Addressing issues revealed by the tests ensures that the API evolves to meet its specification and handle real-world scenarios effectively.

Addressing the Combinatorial Explosion of Tests

In software testing, particularly with APIs, one of the most significant challenges is the combinatorial explosion of tests. This occurs when the number of test scenarios grows exponentially with the complexity of the API specifications. For instance, a seemingly simple API can generate thousands of tests, as demonstrated in the telecom example, where a spec of just over 4,000 lines could potentially lead to 70,000 tests.

The sheer volume of tests not only overwhelms testing resources but also risks missing critical validation points. It’s essential to manage this explosion effectively to ensure comprehensive testing without exhausting system resources.

Strategies to Mitigate Combinatorial Explosion

- Test Prioritization: Focus on the most critical paths and functionalities of the API first. This helps in identifying high-impact tests that need immediate attention.

- Parameterized Testing: Utilize parameterized tests to cover multiple input combinations with fewer test cases, reducing redundancy.

- Dynamic Test Generation: Generate tests dynamically based on the current state of the API and its specifications, rather than pre-computing all possible scenarios.

By implementing these strategies, teams can better manage the volume of tests while ensuring that the essential functionalities are thoroughly validated.

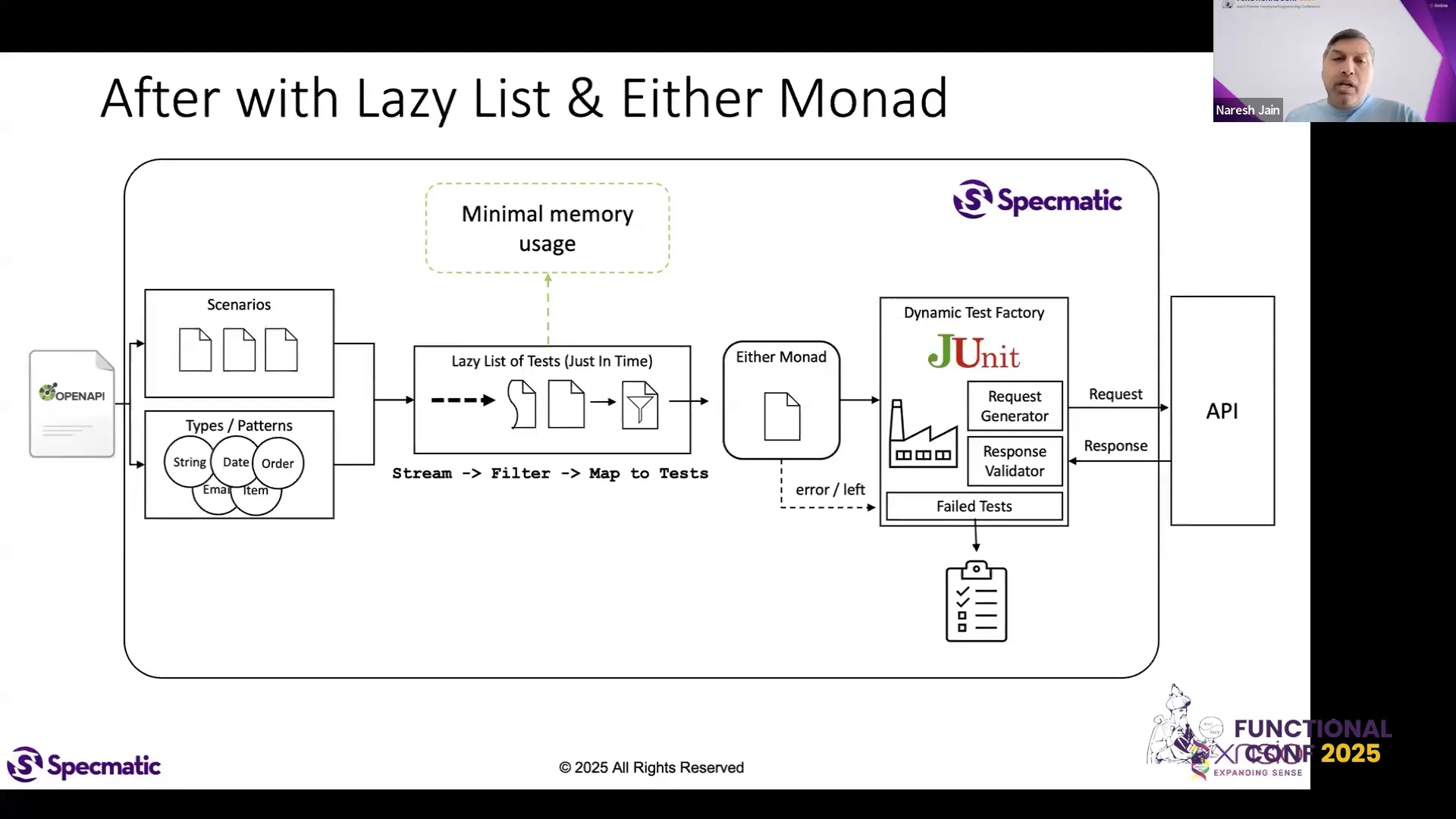

Optimizing Memory Usage with Lazy Lists

Memory optimization is crucial when dealing with large sets of tests. The traditional eager evaluation approach can lead to excessive memory consumption, especially when generating a vast number of test cases. Instead, leveraging lazy lists can significantly improve memory efficiency.

Lazy lists allow for the evaluation of test cases only when needed, thus preventing the pre-computation of all possible scenarios. This approach not only conserves memory but also optimizes CPU usage by delaying the creation of test objects until they are explicitly required.

Benefits of Lazy Evaluation

- Reduced Memory Footprint: By only loading test cases into memory when they are executed, lazy lists help in managing memory usage effectively.

- Improved Performance: The system can process tests more efficiently, focusing on relevant cases and avoiding unnecessary computations.

- Enhanced Flexibility: Lazy evaluation allows for dynamic adjustments to the test strategy based on real-time API behavior and responses.

The Shift from Eager to Lazy Evaluation

The transition from eager to lazy evaluation represents a fundamental shift in how we approach API testing. Previously, tests were constructed and evaluated upfront, leading to significant memory overhead and potential performance bottlenecks. By adopting lazy evaluation, we can streamline the testing process and improve overall efficiency.

In lazy evaluation, the test generation process is encapsulated within a monad, which allows for the handling of failures gracefully without overwhelming the system with pre-computed test cases. This strategic redesign not only mitigates memory issues but also simplifies the execution pipeline, making it easier to manage and maintain.

Implementing Lazy Evaluation in Practice

To implement lazy evaluation, developers can leverage sequences in Kotlin, which are designed to handle collections of elements in a memory-efficient manner. This allows the system to generate test cases on-the-fly as they are needed, rather than holding all possible cases in memory at once.

Additionally, using filters within this lazy structure enables developers to refine which tests are executed based on current conditions, further optimizing resource usage.

Final Thoughts and Best Practices

As we navigate the complexities of API testing, especially in scenarios involving extensive specifications, it’s critical to adopt strategies that enhance both efficiency and effectiveness. The shift to lazy evaluation, combined with dynamic test generation and prioritization, can significantly improve our testing frameworks.

Here are some best practices to consider:

- Embrace Lazy Evaluation: Transition to lazy lists to optimize memory usage and improve performance.

- Focus on Critical Tests: Prioritize test cases based on their impact on the API’s functionality and reliability.

- Iterate on Test Strategies: Continuously evaluate and refine your testing approach to adapt to changing specifications and requirements.

Frequently Asked Questions

What is the main difference between eager and lazy evaluation?

Eager evaluation computes all possible test cases upfront, leading to high memory usage. In contrast, lazy evaluation generates test cases only when needed, optimizing resource consumption.

How can I implement lazy testing in my API tests?

Utilize sequences in Kotlin to create lazy lists that evaluate test cases at execution time. This allows for efficient memory management and dynamic test generation.

What are the benefits of using lazy lists in testing?

Lazy lists reduce memory footprint, improve performance by avoiding unnecessary computations, and offer enhanced flexibility in test execution.

This article was created from the video Testing API Resiliency using Kotlin: Leveraging FP at Scale by Naresh Jain #FnConf 2025 with the help of AI.