Reliable Kafka-Lambda Data Pipelines with AsyncAPI & Specmatic

11 Dec 2025

Paris, France

Summary

Event-driven architectures built with Kafka and AWS Lambda are increasingly popular for powering real-time data pipelines for analytics. However, ensuring reliable data transformations and compatibility between event-driven services presents two critical challenges: how to verify event schemas across isolated Lambda functions, and how to streamline local testing without continuous deployments to AWS.

In this talk, we’ll share practical lessons from real-world experiences building Kafka-Lambda pipelines. We’ll demonstrate how to model Kafka topics and event schemas clearly using AsyncAPI 3.0’s request-reply pattern. Then, leveraging Specmatic for automated contract testing, we’ll show how each Lambda function’s input-output compatibility can be rigorously verified in isolation. Lastly, we’ll highlight the use of LocalStack for rapid local development, significantly improving developer productivity and shortening feedback loops.

Attendees will walk away with a clear strategy and proven tools to:

• Eliminate data schema compatibility issues before they reach production

• Accelerate the development process through effective local testing

• Build robust, contract-driven Kafka-Lambda integrations using AsyncAPI and Specmatic

Join us to unlock the full potential of reliable, maintainable, and developer-friendly real-time event-driven data pipelines.

Transcript

Building resilient Kafka-Lambda Data Pipelines means catching problems early, giving engineers targeted feedback, and avoiding surprises during integration. When external systems push events into Kafka and a chain of Lambda functions transform and forward those events toward warehousing systems like Snowflake or Databricks, a small mismatch can ripple across dozens of processing steps. This article lays out a practical pattern using AsyncAPI and Specmatic to make each step of a Kafka-Lambda Data Pipelines observable, testable, and contract-driven.

Table of Contents

- The challenge: opaque pipelines and late discovery

- Core idea: make specifications executable and tests automatic

- How it works in practice

- Live demo walkthrough and useful screenshots

- AsyncAPI and Specmatic: practical snippets

- Testing strategy: unit, component, and end-to-end

- Contract-driven development and team workflows

- Handling multi-protocol, schemas, and messaging patterns

- CI, governance, and insights

- Implementation checklist for teams

- Common pitfalls and how to avoid them

- What you get

- Getting started: minimal steps to run a first contract test

- Real-world advice for adoption

- Further reading and references

- FAQ

- Closing notes

The challenge: opaque pipelines and late discovery

Many teams end up with long Kafka-Lambda Data Pipelines where events flow through a series of transformations. Typical characteristics:

- Multiple Lambda functions (commonly 30 to 35 in large setups) each performing small transformations.

- Heterogeneous ownership where different teams or vendors own different functions.

- Mixed payload formats such as XML/XSD coming from external partners and JSON used internally.

- Endpoints for analytics like Snowflake or Databricks at the end of the line.

The painful symptom is always the same: messages in production do not fully arrive, or they arrive with corrupted fields or unexpected states. It is difficult to pinpoint where the problem originated because each Lambda acts as a black box and production logs are noisy. The consequence: long incident resolution times and developer frustration.

Core idea: make specifications executable and tests automatic

Instead of relying solely on ad hoc tests and end-to-end runs, capture every message contract in an AsyncAPI specification and use tooling to turn that specification into:

- Service virtualization: a cable-compatible mock that consumers can use locally.

- Contract tests: dynamically generated tests that validate providers and consumers against the spec.

This approach enables teams to validate each Lambda in isolation as well as run full Kafka-Lambda Data Pipelines end to end with confidence. The key components are AsyncAPI as the specification format and Specmatic as the tool to generate mocks and contract tests from that specification.

How it works in practice

The workflow has three simple phases:

- Model the contract in AsyncAPI: define channels (Kafka topics), message payloads (XSD or JSON Schema), and operation patterns.

- Run a Specmatic mock locally: Specmatic consumes the AsyncAPI and presents a virtual Kafka topic that emits messages matching the schema.

- Execute contract tests: Specmatic generates payloads and posts them to the topic. Your Lambda picks them up (running on LocalStack or another local emulator), processes them, and posts the resulting message to the next topic. Specmatic validates the output automatically.

The result: direct, automated feedback on whether a Lambda is doing exactly what the specification says. No manual test vectors, no guesswork, and no dependency on the entire pipeline being deployed.

Typical message flow

Imagine a simple step in a Kafka-Lambda Data Pipelines:

- An external system posts an orderCancel event in XML (XSD validated) to topic A.

- A Lambda consumes from topic A, converts XML to JSON, fills defaults, and sets a processing status.

- The Lambda publishes a processCancel event to topic B, which downstream services consume.

With the AsyncAPI spec in place, Specmatic can generate an XML payload that matches the XSD, publish it to topic A, wait for the corresponding processCancel message on topic B, and validate that fields such as correlation IDs and status values match expectations.

Live demo walkthrough and useful screenshots

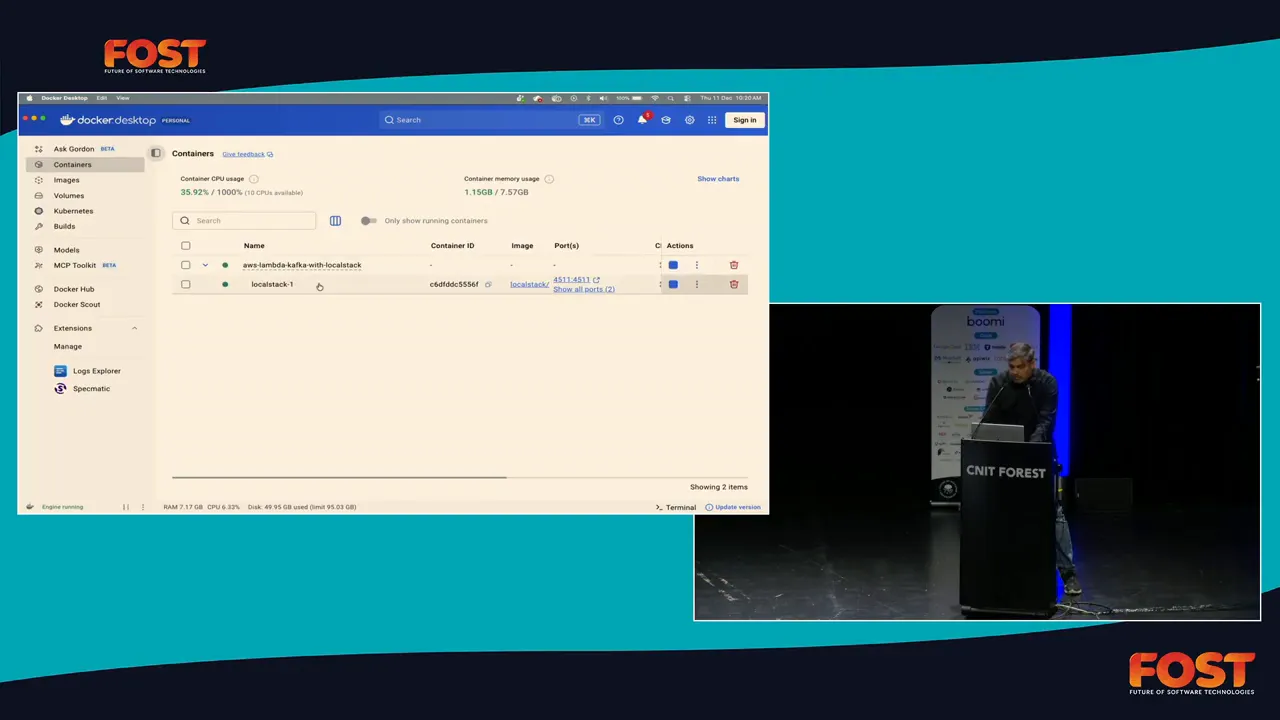

The demonstration environment typically uses LocalStack to host Lambda and Kafka locally so that you avoid repeated cloud deployments. Here are key moments to replicate when you build a similar setup.

The dev environment shows LocalStack hosting the Lambda functions. Use this environment to run contract tests faster and cheaper than deploying to the cloud.

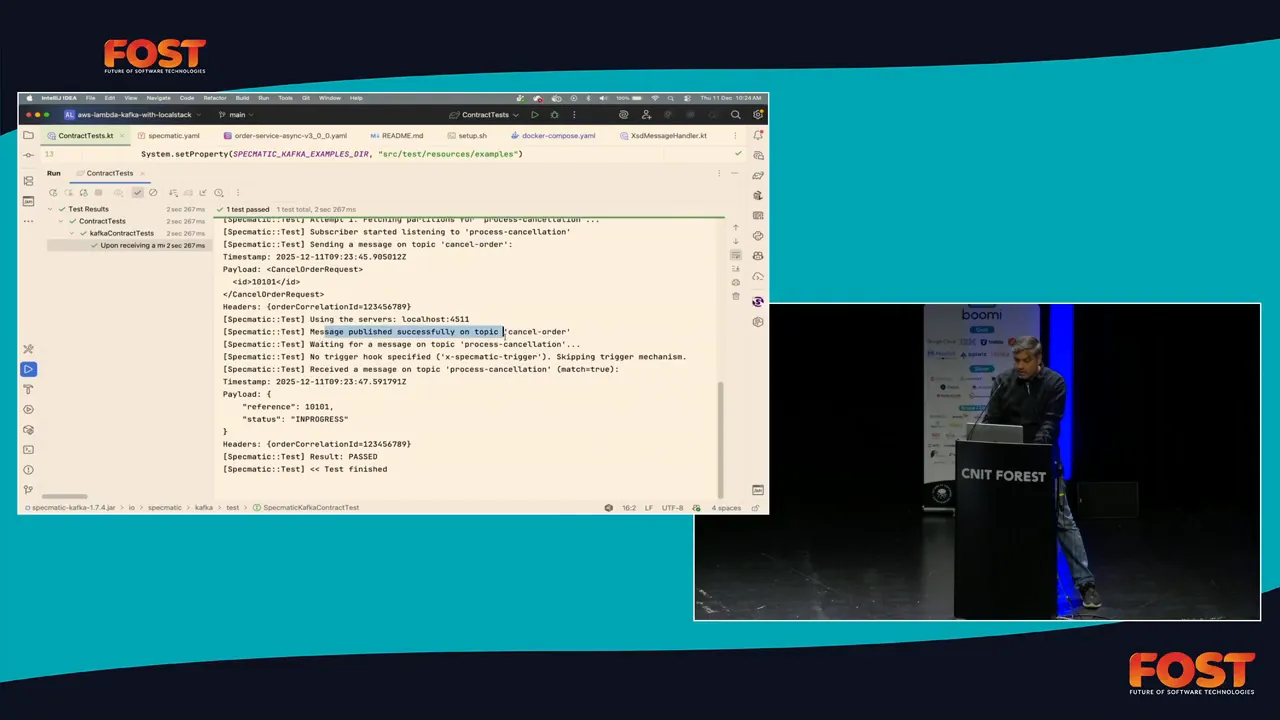

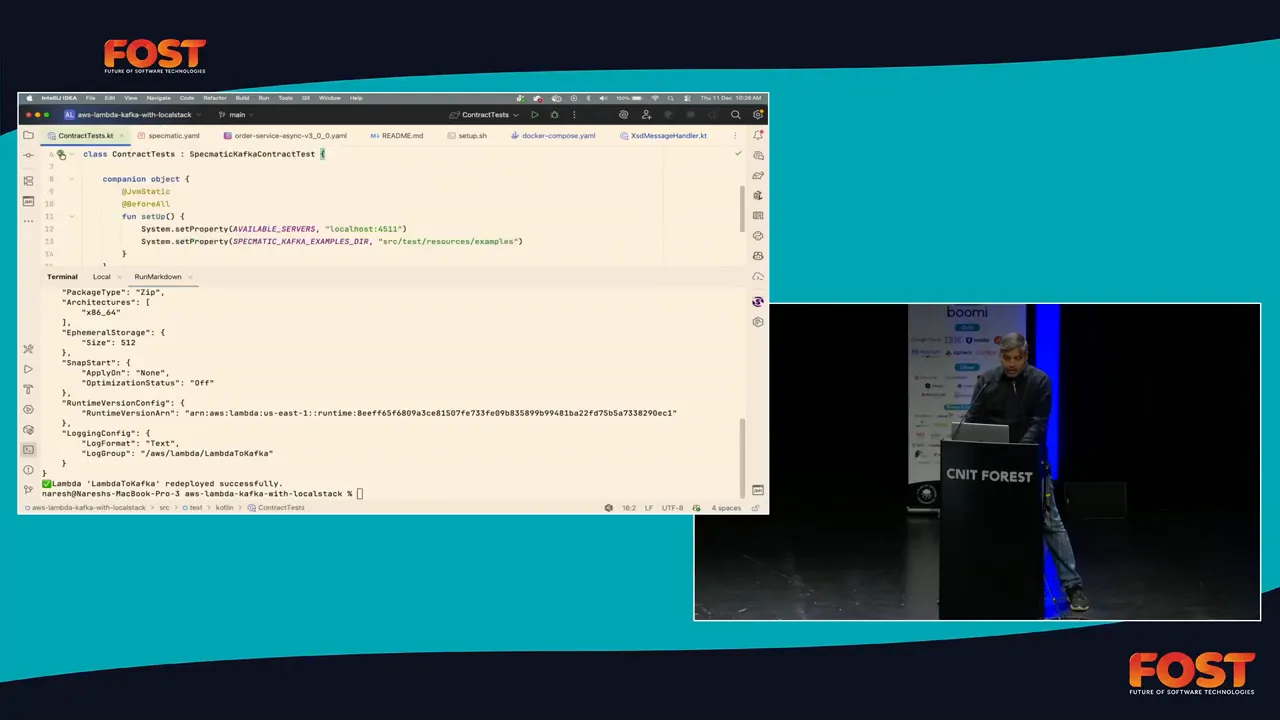

Start a contract test with Specmatic. Initially, the test may look like a minimal setup that simply references an AsyncAPI specification and a Specmatic YAML file that points to the AsyncAPI. Specmatic will generate a message and post it to the configured Kafka topic.

After publishing the generated payload to the orderCancel topic, Specmatic waits and listens for the expected processCancel message on the downstream topic. Validation includes matching correlation IDs and schema conformance.

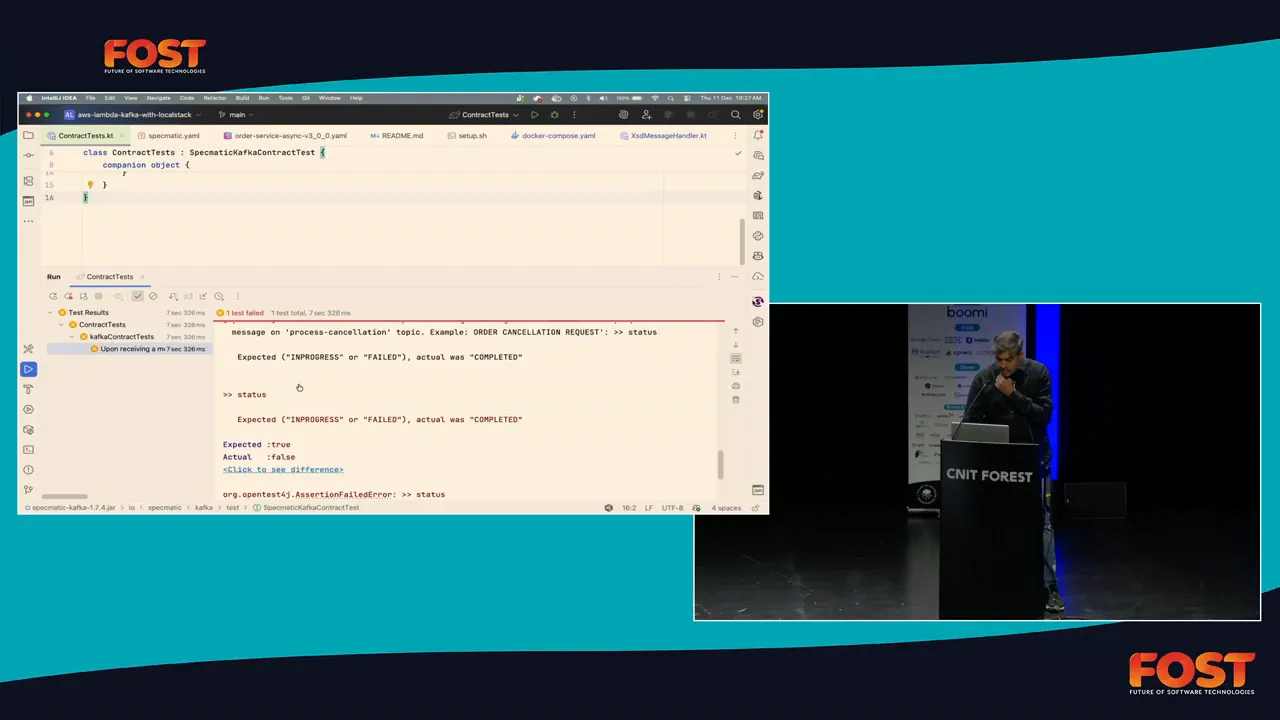

To test the safety net, intentionally modify the Lambda to produce an incorrect status value. Redeploy the Lambda to LocalStack and rerun the contract test. Specmatic will detect the mismatch and fail the test immediately, showing the expected values versus actual values.

The output clearly indicates the mismatch: the test expected “in-progress” or “failed”, but the Lambda set the status to “completed”. This rapid feedback prevents a faulty function from being merged into production.

AsyncAPI and Specmatic: practical snippets

Below are minimal examples to illustrate the pieces you would put into source control for a single step in a Kafka-Lambda Data Pipelines.

The AsyncAPI channel definitions reference the incoming XML payload validated by an XSD and the outgoing JSON payload validated by a JSON Schema. Keep these schemas as the canonical contract between teams.

asyncapi: '2.0.0'

info:

title: order-processing

version: '1.0.0'

channels:

order.cancel:

subscribe:

message:

contentType: application/xml

payload:

$ref: './schemas/order-cancel.xsd'

process.cancel:

publish:

message:

contentType: application/json

payload:

$ref: './schemas/process-cancel.json'Example Specmatic YAML that points to the AsyncAPI spec and instructs Specmatic how to run a contract test against Kafka.

specmatic:

asyncapi: ./asyncapi.yaml

runner: kafka

kafka:

brokers: localhost:9092

publishChannel: order.cancel

subscribeChannel: process.cancel

With these artifacts in a repository, Specmatic can:

- Generate synthetic XML messages conforming to the XSD.

- Post those messages to the order.cancel Kafka topic.

- Wait for and validate messages on the process.cancel topic against the JSON schema.

Testing strategy: unit, component, and end-to-end

A robust approach to Kafka-Lambda Data Pipelines divides testing effort into layers:

1. Per-function contract tests

Each Lambda owner should have an AsyncAPI entry for the Lambda’s input and output. Specmatic generates tests automatically from the spec so the provider is validated against consumer expectations. These tests are cheap, fast, and run during development.

2. Integration tests with local emulation

Use LocalStack or similar tools to emulate AWS services locally and run multiple Lambda functions as they would operate in production. This is where Specmatic’s virtualization helps because you can mock upstream or downstream services while testing a single Lambda.

3. End-to-end pipeline tests

Once building blocks are stable, run full pipeline tests. Use an AsyncAPI spec that models the first and last topics. Post a message to the first topic, let all Lambdas process it, and validate the final message. End-to-end tests catch integration surprises that per-function tests might miss.

Important note: end-to-end tests are expensive and brittle. Use them sparingly and reserve them for critical flows and release gates. Per-function contract tests are the most effective early-warning mechanism.

Contract-driven development and team workflows

Contract-driven development makes the API specification the single source of truth. Treat AsyncAPI like code:

- Store specs in a central repository and enforce changes via pull requests.

- Automate linting and example validation so specs are correct before they are merged.

- Run backward compatibility checks to avoid breaking consumers.

A practical compatibility check process:

- Take the incoming spec change as the candidate spec and run it as a mock.

- Use the existing spec version to generate consumer tests.

- Run those tests against the candidate mock; if they pass, the new spec is backward compatible.

This approach finds breaking changes during design time instead of after deployment. It also enables multiple teams to work in parallel on the same Kafka-Lambda Data Pipelines because consumers get a virtualised service they can code against from day 0.

Handling multi-protocol, schemas, and messaging patterns

Kafka-Lambda Data Pipelines often coexist with AMQP, MQTT, or cloud pub/sub. The AsyncAPI spec supports multi-protocol modeling, but you must standardize schema formats and naming conventions across your organization. Consider these principles:

- Standardize schema format (JSON Schema, XSD, or Protobuf) for different teams so validation is consistent.

- Decide messaging patterns up front: event notification (fire-and-forget), asynchronous request/response, fan-out publish/subscribe, and event streams all have different characteristics and validation needs.

- Capture protocol-specific constraints such as Kafka topic naming, partitions, and security settings in an operational layer of your spec or documentation.

For cross-protocol architectures, keep the contract focused on message shape and behavior, and manage protocol wiring separately in deployment manifests.

CI, governance, and insights

Integrate Specmatic-generated tests into CI pipelines. Typical flow:

- Developer opens a pull request with a spec or provider code change.

- CI runs spec linting and mock-based compatibility checks.

- Specmatic runs generated tests against a mock and then against the provider where possible.

- Results feed into a governance dashboard that tracks API health, contract violations, and dependency graphs.

When contract regressions are detected before merging, you avoid runtime incidents and can enforce API quality systematically. Insights collected during test runs enable visibility into the Kafka-Lambda Data Pipelines entire graph and where failures commonly occur.

Implementation checklist for teams

Use this checklist to roll out contract-driven validation across Kafka-Lambda Data Pipelines:

- Define an AsyncAPI template for the organization.

- Choose a schema standard and document where to store XSD/JSON schema files.

- Set up Specmatic in CI and developer machines.

- Standardize on a local emulation stack like LocalStack for AWS resources.

- Require PRs for spec changes with automated compatibility checks.

- Train teams to run per-function contract tests during development.

- Run end-to-end tests for critical flows before releases.

Common pitfalls and how to avoid them

Not modeling correlation

Without explicit correlation IDs in the spec, matching request and response messages becomes fragile. Include correlation fields in payload schemas and make sure tests validate them.

Overreliance on end-to-end tests

Do not treat long-running end-to-end tests as the primary safety net. They are too late-stage. Invest heavily in per-function contract tests generated from AsyncAPI to catch issues earlier.

Ignoring protocol constraints

Kafka-specific properties such as partitions, keying, and topic retention can affect behavior. Capture these non-functional constraints in operational docs so developers know how produced messages will be consumed downstream.

What you get

Adopting AsyncAPI and Specmatic for Kafka-Lambda Data Pipelines delivers concrete benefits:

- Early detection of contract mismatches and malformed messages.

- Parallel development because consumers can use virtualized providers.

- Faster incident resolution due to clearer contract boundaries and deterministic tests.

- Better governance through automated checks and insights into API quality.

Getting started: minimal steps to run a first contract test

- Create an AsyncAPI document for one input and one output channel for a Lambda in your Kafka-Lambda Data Pipelines.

- Store schemas (XSD/JSON Schema) next to the AsyncAPI file in Git.

- Install Specmatic on your machine or in CI.

- Run Specmatic to mock the input topic and generate a message.

- Run your Lambda on LocalStack and let it process the message.

- Let Specmatic validate the output topic message automatically.

Once these steps pass, extend the pattern to other parts of the pipeline. The whole point is that each Lambda can be validated independently while still enabling full pipeline tests when needed.

Real-world advice for adoption

Start small. Pick a critical pathway where dropped or mis-processed messages have a cost. Build AsyncAPI specs for the first and last topics and one or two intermediate Lambdas. Use Specmatic to prove the concept and measure the reduction in debugging time.

Expect cultural change: treating contracts like code requires team discipline. Automate everything. Remove manual steps that allow divergence between docs and implementation.

Further reading and references

- AsyncAPI specification documentation for modeling event-driven systems

- Specmatic documentation for converting specs into mocks and contract tests

- LocalStack guides for local AWS emulation

FAQ

How do I get started with contract testing for Kafka-Lambda Data Pipelines?

Create one AsyncAPI spec for a single step and run a Specmatic mock while your Lambda runs on LocalStack. Follow the checklist at #getting-started to set up the minimal environment and iterate from there.

Can AsyncAPI model both XML and JSON payloads in the same pipeline?

Yes. AsyncAPI allows you to reference different schema types per message. You can point to an XSD for an XML input and a JSON Schema for the output. See #asyncapi-specmatic for an example.

What if multiple protocols are in use across the pipeline?

Capture the message shapes and behavioral contracts in AsyncAPI and manage protocol specifics separately. Standardize on a schema format and model protocol constraints in operational documentation referenced from the spec. Check #multi-protocol for principles to follow.

How do I ensure backward compatibility when changing a spec?

Run the compatibility workflow described in #contract-driven-dev: mock the new spec, generate tests from the existing spec, and run them against the new mock. Passing tests indicate backward compatibility.

Where do I run Specmatic tests in CI?

Integrate them at the PR level. Run spec linting and mock-based tests as part of the CI job that validates spec changes and provider implementations. See #ci-governance for the recommended flow.

Can this approach reduce production incidents?

Yes. By making contracts executable and running per-function contract tests early, you detect mismatches before they reach production, reducing incidents and mean time to recovery.

Closing notes

Kafka-Lambda Data Pipelines are powerful but fragile if contracts are fuzzy and tests are manual. Turning AsyncAPI specifications into executable contracts with Specmatic provides immediate value: automatic tests, local virtualization, parallel development, and clearer governance. Focus on per-function contract testing first, then expand to end-to-end validation for critical flows. That combination dramatically improves reliability and developer confidence across Kafka-Lambda Data Pipelines.