Project API Forge: Streamlining Dev-First API Lifecycle Management

03 Dec 2024

Paris

Summary

All Telcos have realised the importance of building an efficient and resilient API lifecycle management for driving innovation, improving interoperability between telcos and maintaining competitive advantage. “Project API Forge” at TMForum is an ambitious initiative aimed at streamlining the way APIs are developed, standardized, and managed. This talk will dive into how API Forge is creating a unified, automated, and developer-first API Factory that enhances TMForum’s OpenAPI and AsyncAPI programs.

We will explore the challenges faced in traditional API management and how API Forge addresses these by standardizing tooling and architecture, ensuring synchronization between OpenAPI and AsyncAPIs, and enhancing both internal and external developer experiences. Key components of the project include the use of agile/lean methodologies, shift-left practices, and a continuous improvement approach that fosters collaboration, technical excellence, and safe-fail experimentation.

Attendees will gain insights into the technical innovations behind API Forge, including automation strategies, conformance testing enhancements using open source tools like Specmatic, and the synchronization of API artifacts. The session will showcase how these advancements are not only streamlining API lifecycle management but also setting new standards for developer experience, efficiency, and resilience in API creation.

Join us to discover how Project API Forge is shaping the future of API management at TM Forum, providing a blueprint for organizations looking to modernize their API processes and achieve developer-first excellence.

Transcript

Streamlining API Lifecycle Management with Project API Forge

In this session, we dive into Project API Forge, a collaborative effort with TMForum aimed at revolutionising the way we manage APIs. This comprehensive experience report covers the tools and processes involved in creating a streamlined developer experience for API lifecycle management.

Table of Contents

- Introduction to Project API Forge

- Understanding TMForum

- Exploring TMForum’s API Specifications

- The API Factory Concept

- Live Demonstration of Artifact Production

- Understanding the Rule File

- Generating OpenAPI and AsyncAPI Specifications

- Implementing Factory Value Objects

- Generating API Examples

- Validating Examples Against Specifications

- Updating Rule Files with Examples

- Generating the Certification Toolkit and Virtual Service

- Running the Virtual Service

- Contract Testing with the CTK

- Postman Collection and Additional Artifacts

- CI Pipeline and Artifact Publishing

- Open Digital Architecture Components

- API Implementation and Testing Pipelines

- Conclusion and Open Source Tools

Introduction to Project API Forge

Project API Forge represents a significant leap forward in API lifecycle management. It aims to simplify and automate the processes involved in creating, maintaining, and consuming APIs. By collaborating with TMForum, we have developed a framework that enhances the developer experience, making it easier for telcos and vendors to adhere to industry standards.

Understanding TMForum

TMForum is a recognised standards body that plays a crucial role in the telecommunications industry. It supports telcos globally by providing a framework for interoperability through its extensive library of API specifications. With over 120 OpenAPI specifications already launched, TMForum is also progressing towards AsyncAPI specifications, which add further flexibility and capability to API interactions.

The Role of TMForum in API Development

- Standardisation: TMForum standardises APIs to ensure seamless integration and communication between different systems.

- Resource Availability: They provide a comprehensive API directory that includes various assets like OpenAPI specifications and Postman collections.

- Community Collaboration: TMForum encourages collaboration among its members to propose and develop new API standards.

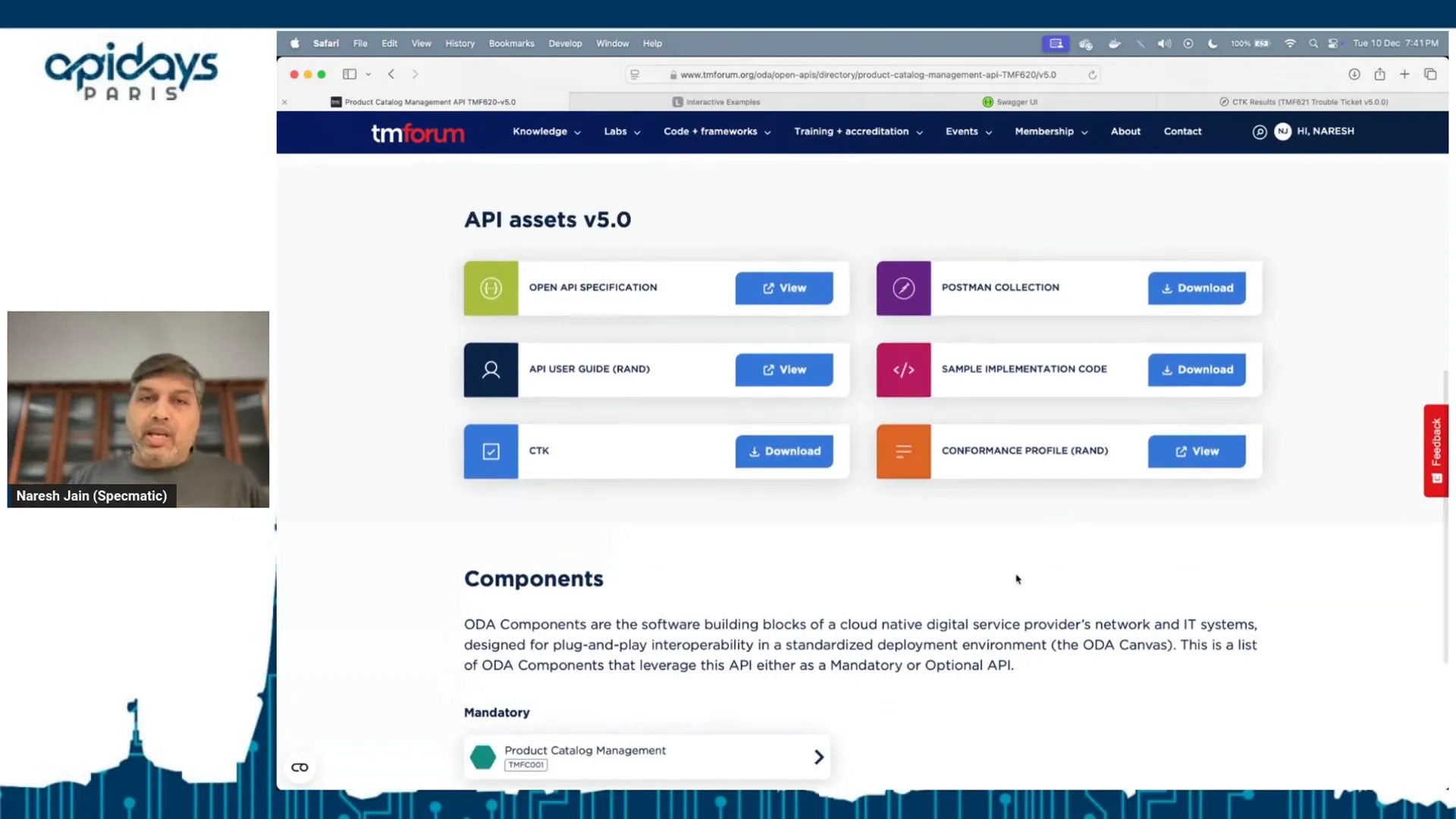

Exploring TMForum’s API Specifications

The API directory of TMForum is a treasure trove of resources. It includes not just the API specifications but also supplementary tools like sample implementations and conformance toolkits. These resources empower developers to create applications that comply with industry standards while reducing the complexity of integration.

Key Components of the API Directory

- OpenAPI Specifications: Detailed guidelines for API design and implementation.

- AsyncAPI Specifications: Frameworks for asynchronous communication between services.

- Postman Collections: Pre-built collections for testing and interacting with APIs.

- Conformance Toolkit: Tools to certify that implementations meet TMForum standards.

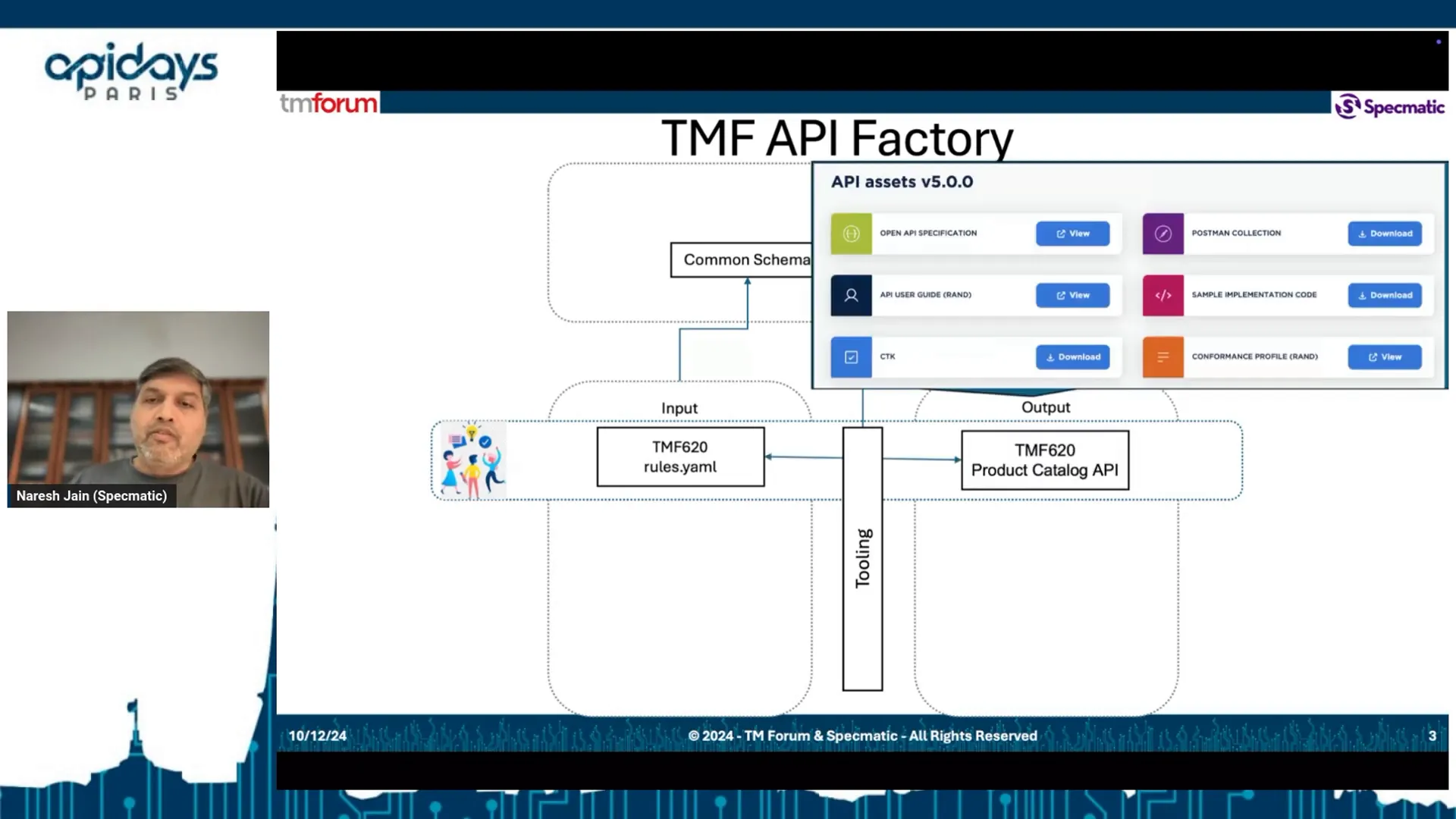

The API Factory Concept

At the heart of Project API Forge lies the API Factory concept. This innovative approach utilises a rule file as an input to generate various API assets automatically. The API Factory streamlines the process, making it scalable and repeatable, allowing multiple members to collaborate on API development efficiently.

How the API Factory Works

The API Factory operates on a set of defined schemas and directories. When a new API standard is proposed, the factory takes the rule file and processes it through automated tooling. This results in the generation of all necessary assets, significantly reducing the time and effort traditionally required for API development.

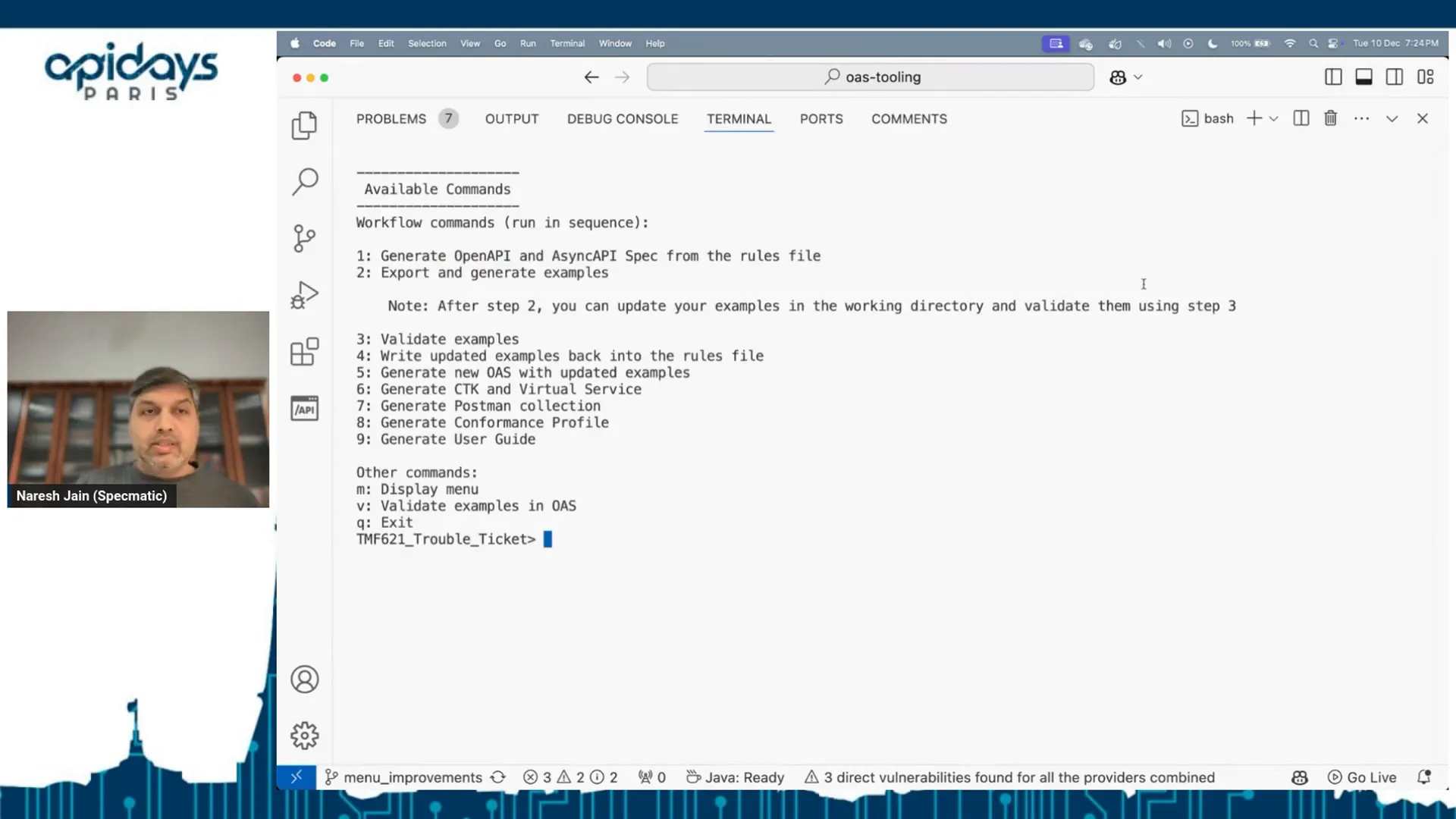

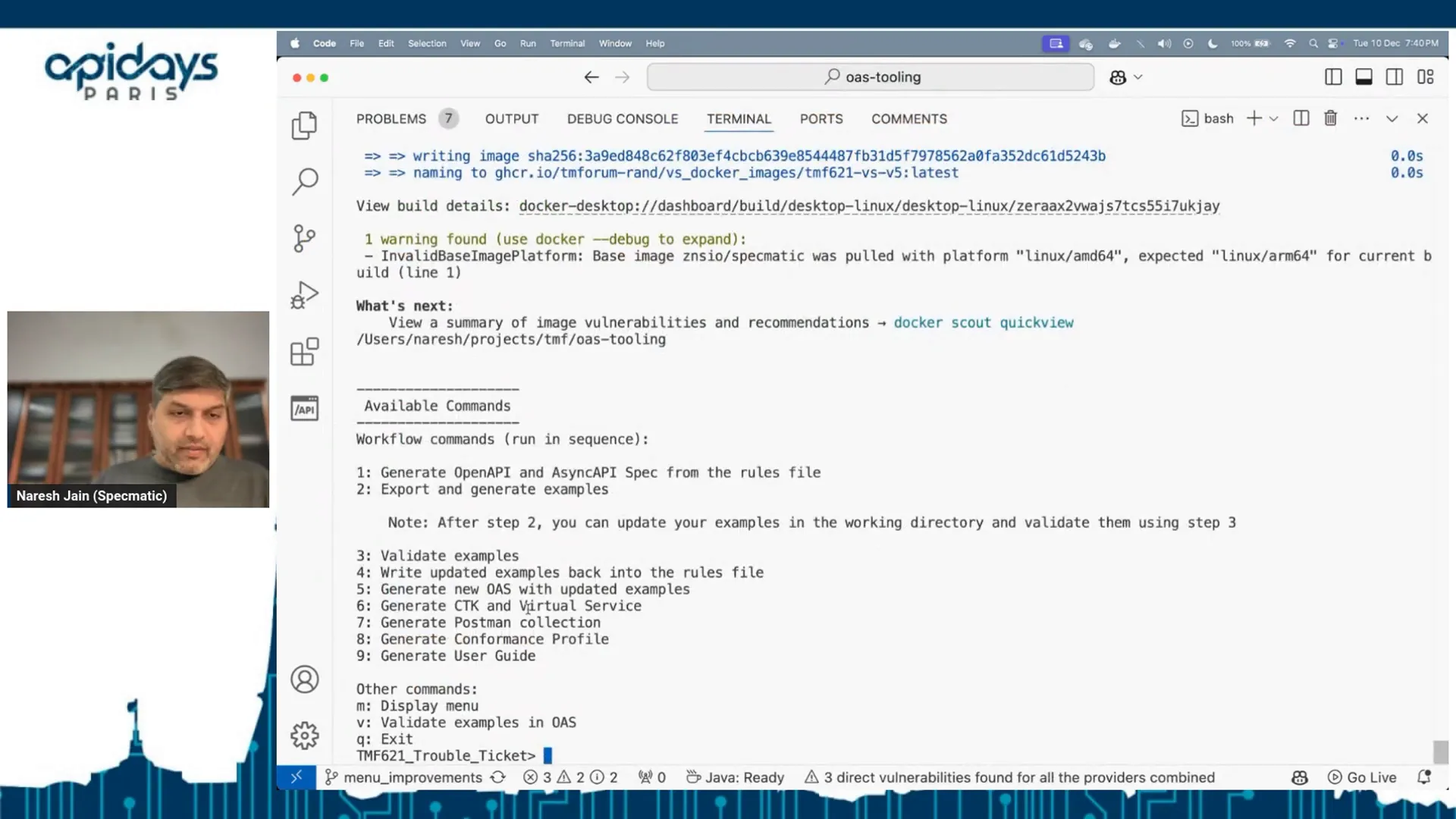

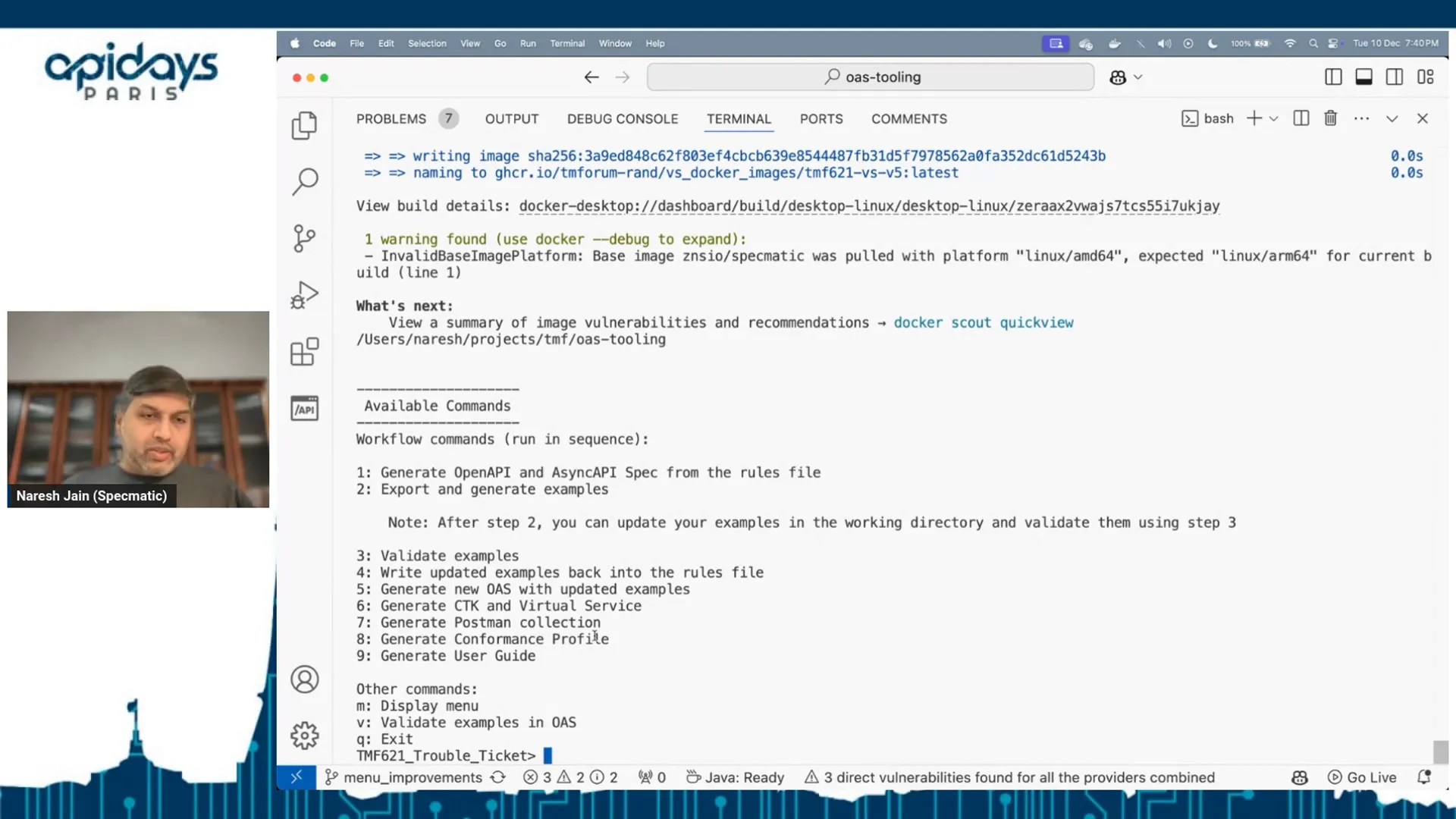

Live Demonstration of Artifact Production

During our session, we conducted a live demonstration showcasing the production of API artifacts using the API Factory. This demonstration illustrated how a simple rule file can lead to the generation of comprehensive documentation and specifications for APIs. The workflow was designed to be intuitive, guiding users from the rule file to the final API specifications seamlessly.

Step-by-Step Process

- Input the rule file defining the API schema and operations.

- Trigger the tooling to generate the OpenAPI and AsyncAPI specifications.

- Review the generated assets in the designated folders.

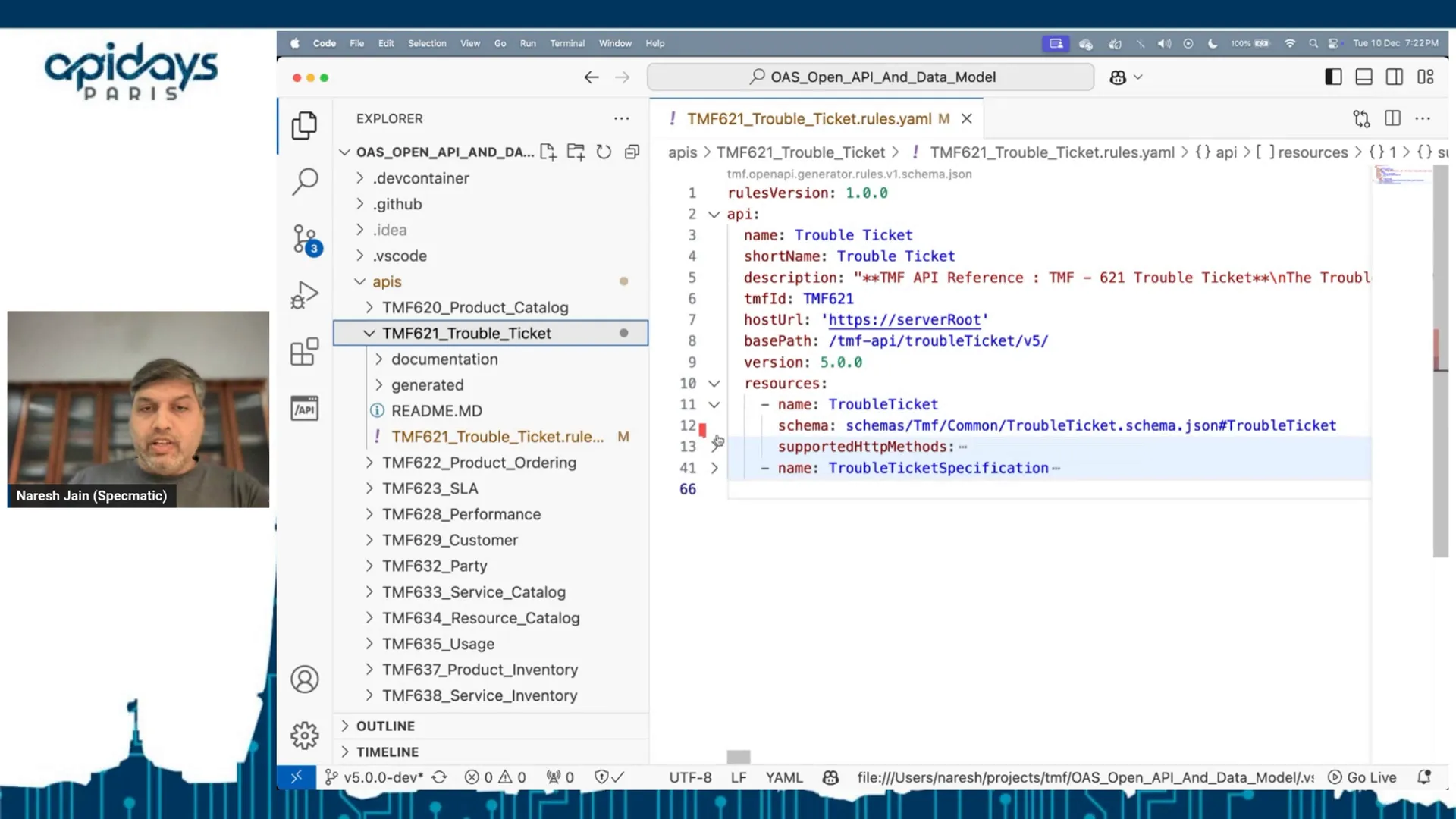

Understanding the Rule File

The rule file is a critical component of the API Factory. It serves as a concise input that defines the API schema and the operations available for each entity. This streamlined format allows developers to specify essential details without the overwhelming complexity often associated with full API specifications.

Components of the Rule File

- Entities: Define the core components of the API, such as trouble tickets or user accounts.

- Schema: Outline the structure and data types associated with each entity.

- Supported Operations: Specify allowed HTTP methods (GET, POST, PATCH, DELETE) and their parameters.

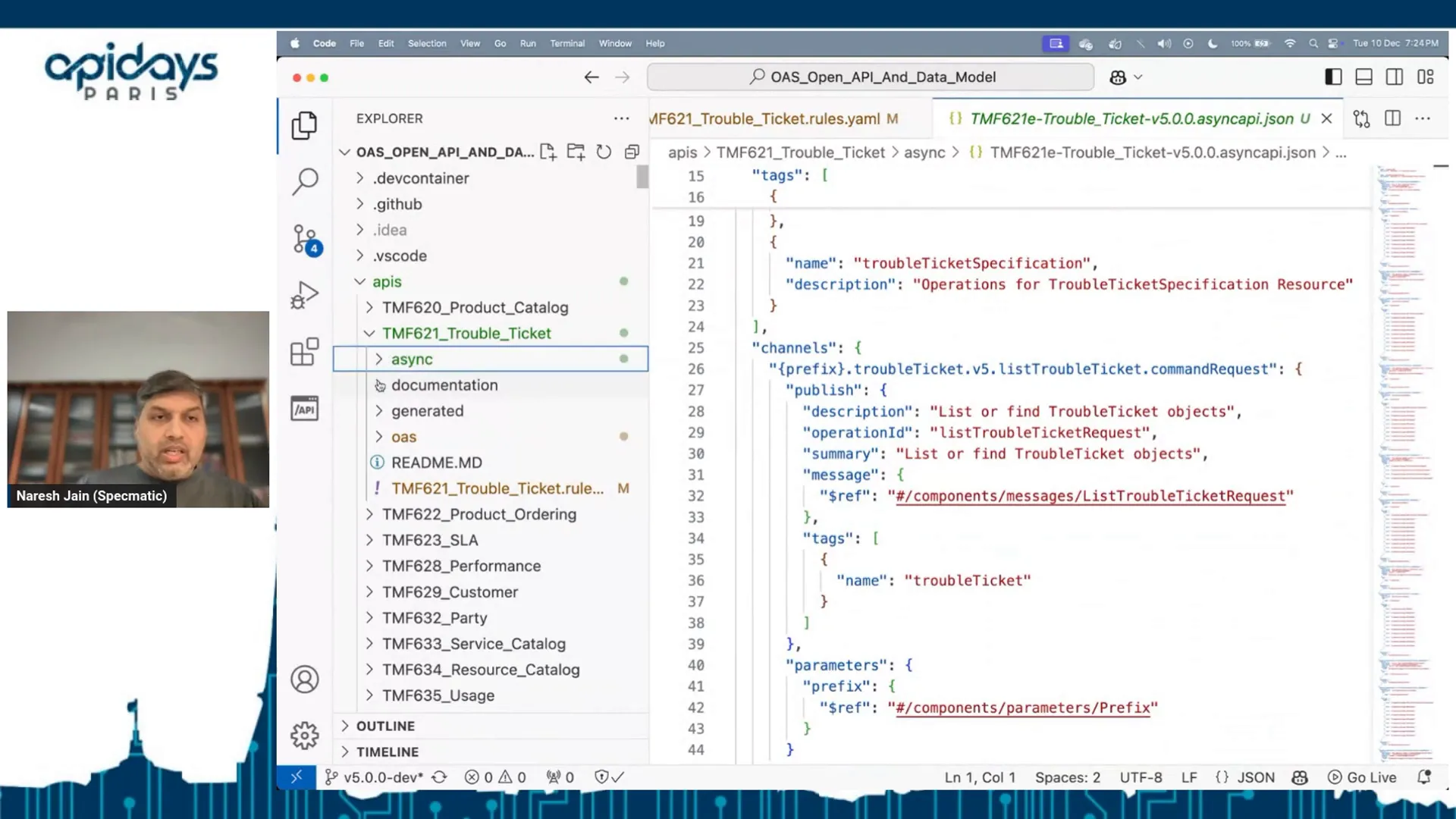

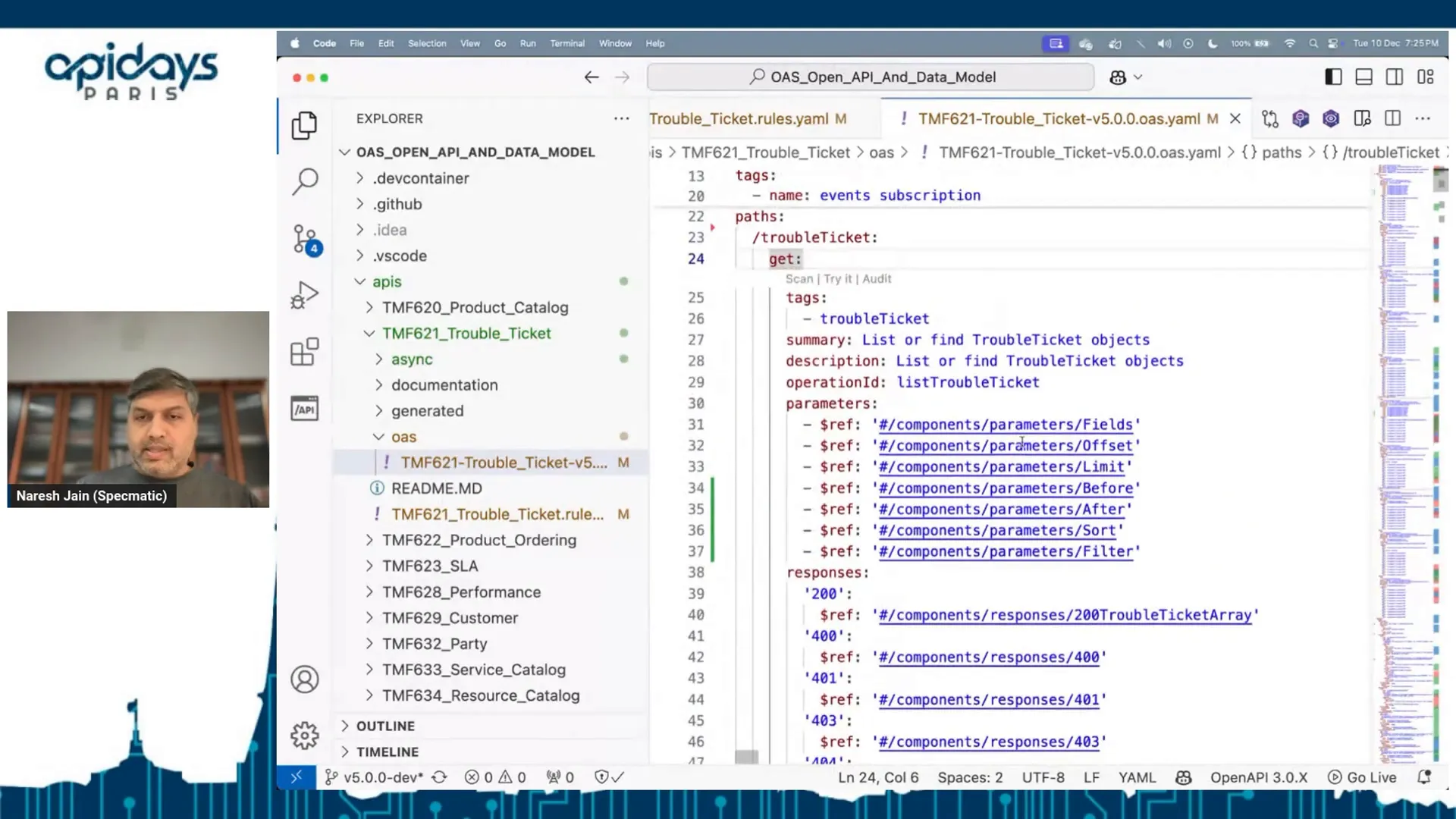

Generating OpenAPI and AsyncAPI Specifications

Once the rule file is completed, the next step is to generate the OpenAPI and AsyncAPI specifications. This process is automated, ensuring that specifications are consistent and adhere to TMForum standards. The tooling handles the heavy lifting, allowing developers to focus on higher-level design and functionality.

Benefits of Automated Specification Generation

- Consistency: Automated generation ensures that all specifications follow a uniform format.

- Efficiency: Reduces the manual effort required to create detailed API documentation.

- Ease of Use: Developers can quickly generate specifications without deep technical knowledge of the underlying standards.

Implementing Factory Value Objects

Factory Value Objects (FBOs) serve as a foundational element in our API design, particularly for managing entities like trouble tickets. An FBO encapsulates common attributes and mandatory properties, allowing us to maintain consistency across various API operations.

For instance, a trouble ticket FBO includes properties such as name, description, and severity. These properties are vital for defining the ticket type, ensuring that all necessary information is captured without redundancy. When a GET request is made, the FBO pattern allows us to retrieve the ticket using its primary key, eliminating the need to repeat the same information across different requests.

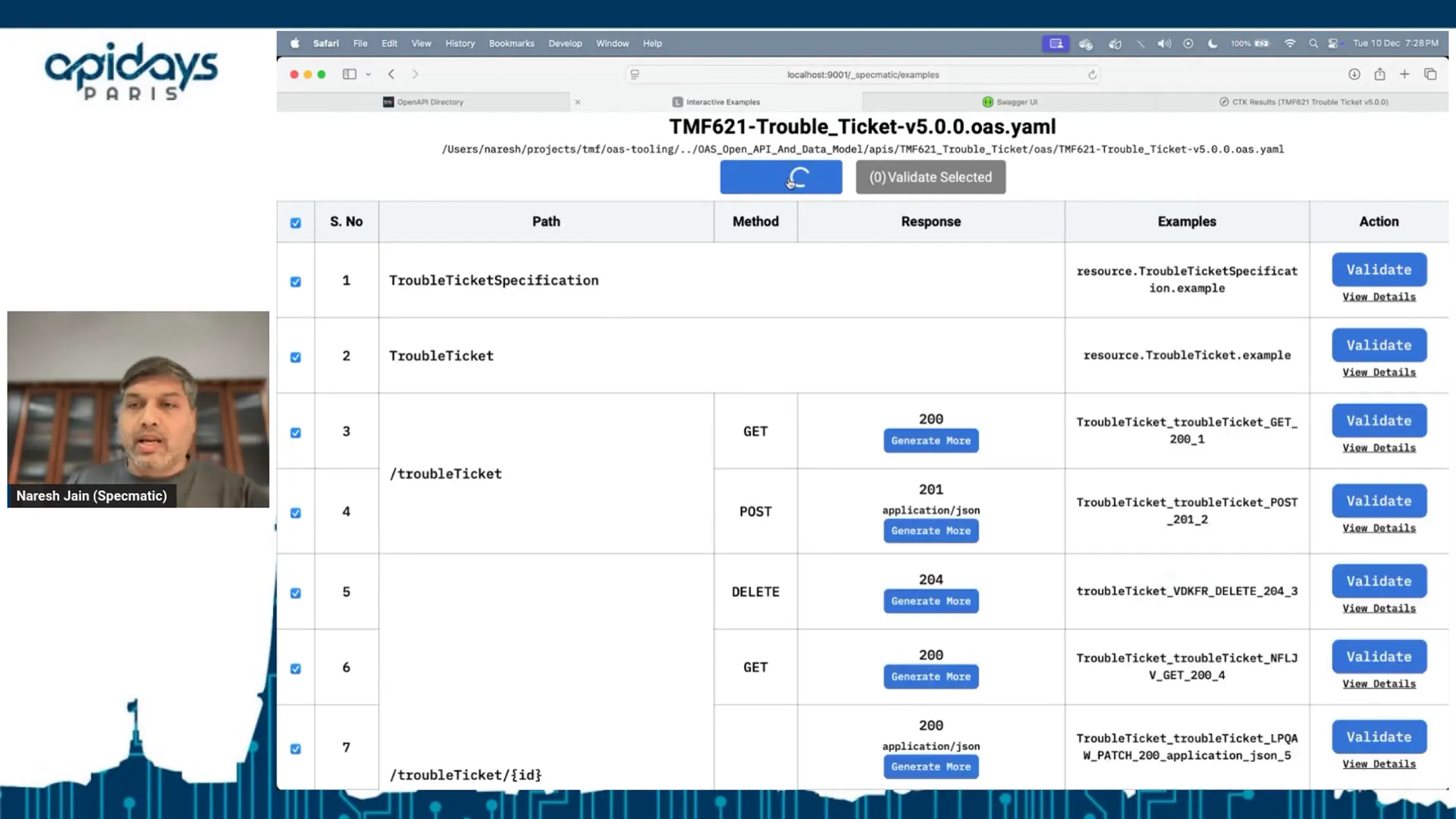

Generating API Examples

Upon successful creation of the OpenAPI and AsyncAPI specifications, the next step is to generate examples that illustrate how the API works. Examples are crucial for understanding because they provide tangible use cases that developers can refer to when implementing their integrations.

To generate these examples, we initiate the server, which analyses the OpenAPI specification. It identifies the various entities and operations defined within it. In our case, we focus on the trouble ticket specifications and their associated operations such as GET, POST, DELETE, and PATCH.

Steps to Generate Examples

- Launch the server to access the OpenAPI specification.

- Select the entities for which you want to generate examples.

- Trigger the generation process, which creates multiple examples based on the defined operations.

In our session, we generated a total of eighteen examples, showcasing the various interactions possible with the API. However, the validity of these examples must also be confirmed to ensure they adhere to the defined specifications.

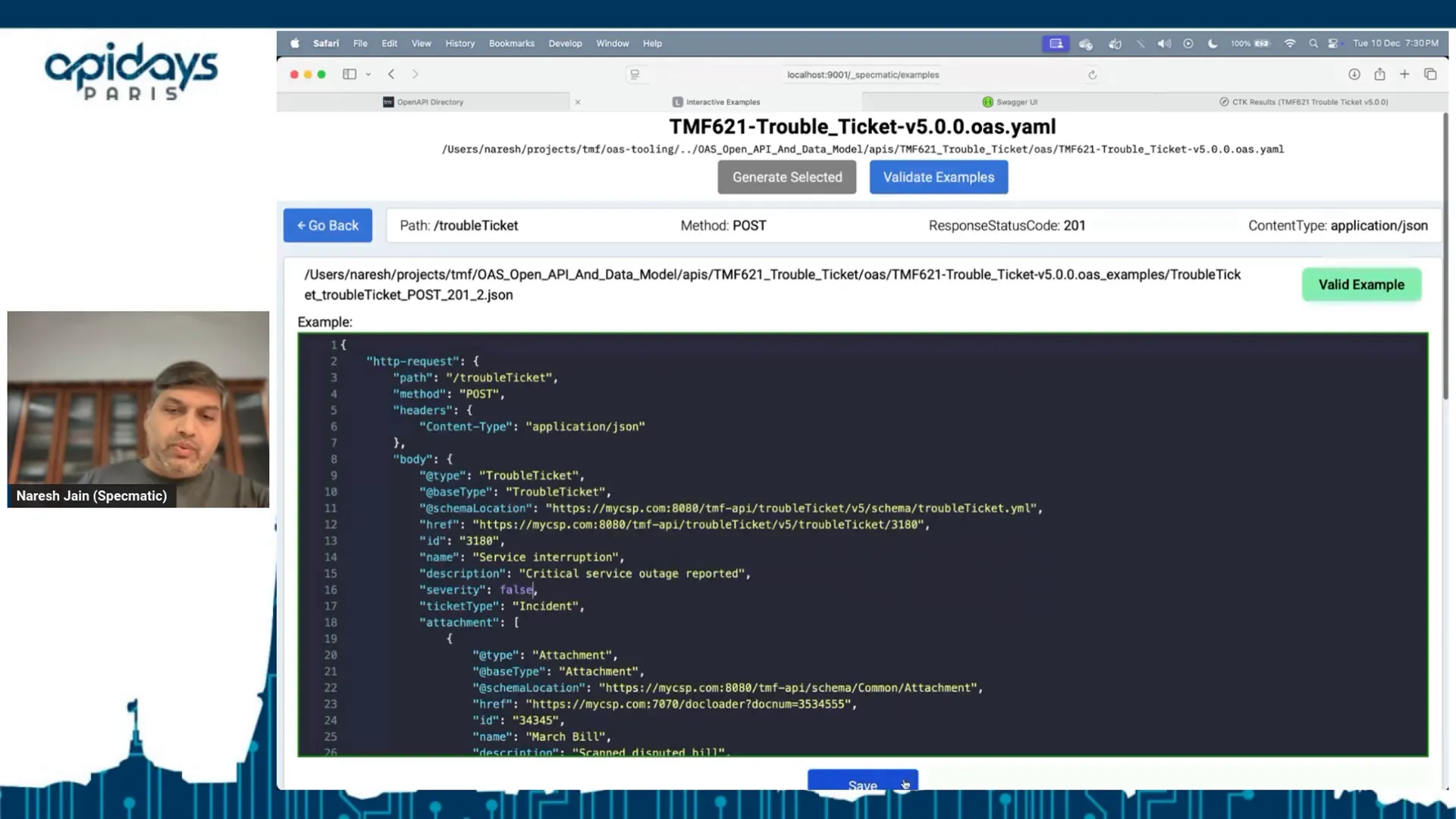

Validating Examples Against Specifications

Once the examples are generated, the next critical step is validation. This process checks whether the examples conform to the OpenAPI specification and the associated schemas. Validation helps to prevent errors that could arise from discrepancies between the examples and the specifications.

For instance, if an example specifies a severity type as a boolean instead of a string, the validation process will highlight this inconsistency. This inline feedback is invaluable, allowing developers to correct mistakes immediately.

Validation Process Steps

- Run the validation tool against the generated examples.

- Identify any discrepancies highlighted by the tool.

- Make necessary adjustments to ensure compliance with the specifications.

After validating all eighteen examples, we confirmed that none contained errors, allowing us to proceed with confidence.

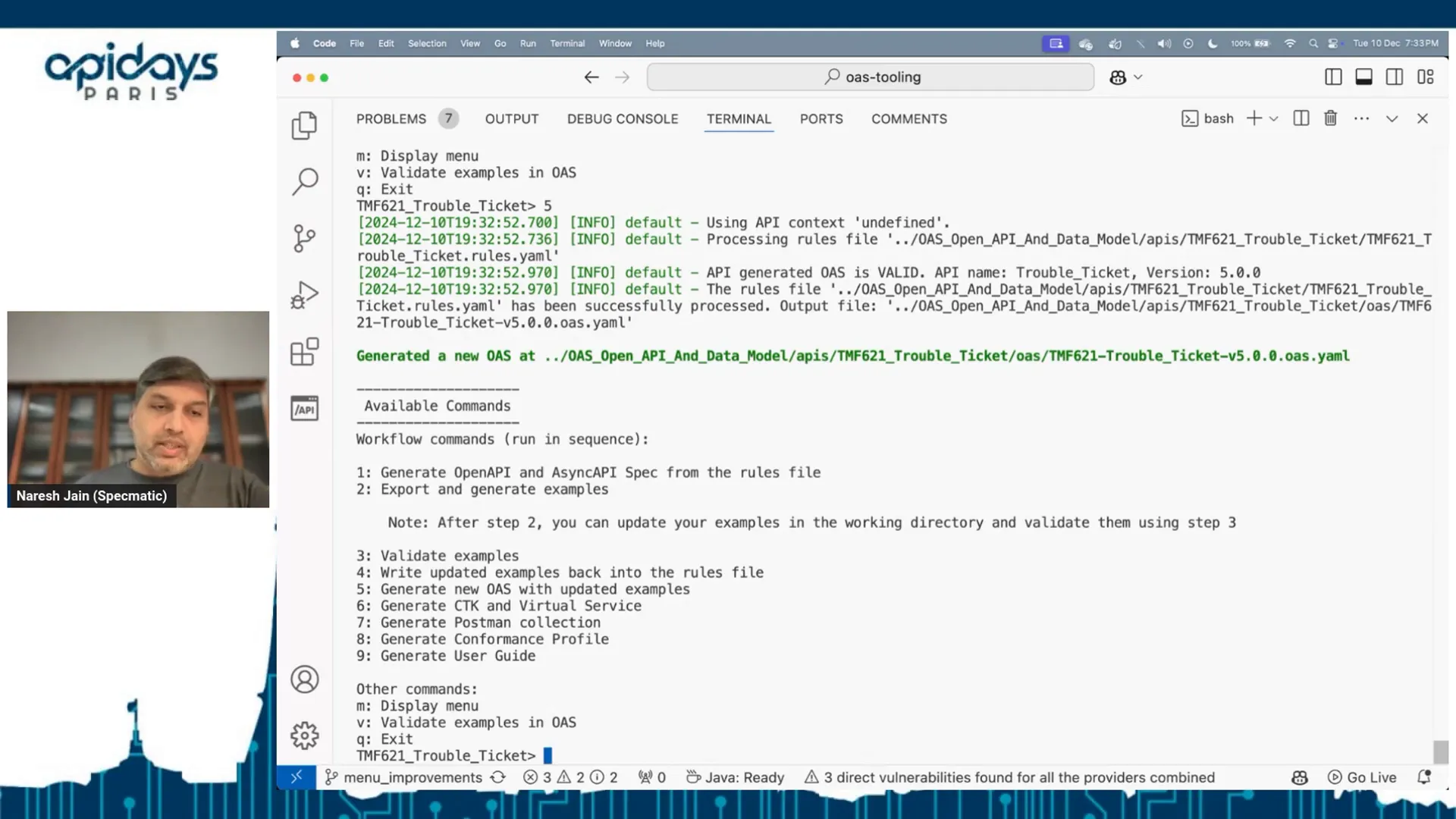

Updating Rule Files with Examples

With validated examples in hand, it is essential to update the rule file, which serves as the source of truth for our API specifications. This step ensures that all generated examples are documented alongside their respective schemas within the rule file.

By integrating these examples into the rule file, we maintain a single point of reference for all API-related documentation and facilitate easier updates in the future.

Steps to Update the Rule File

- Access the rule file that defines the API schemas.

- Embed the validated examples into the corresponding sections of the rule file.

- Save the updated rule file to ensure all changes are captured.

Upon completion, the rule file now includes examples alongside the schemas, enhancing its utility for future development and reference.

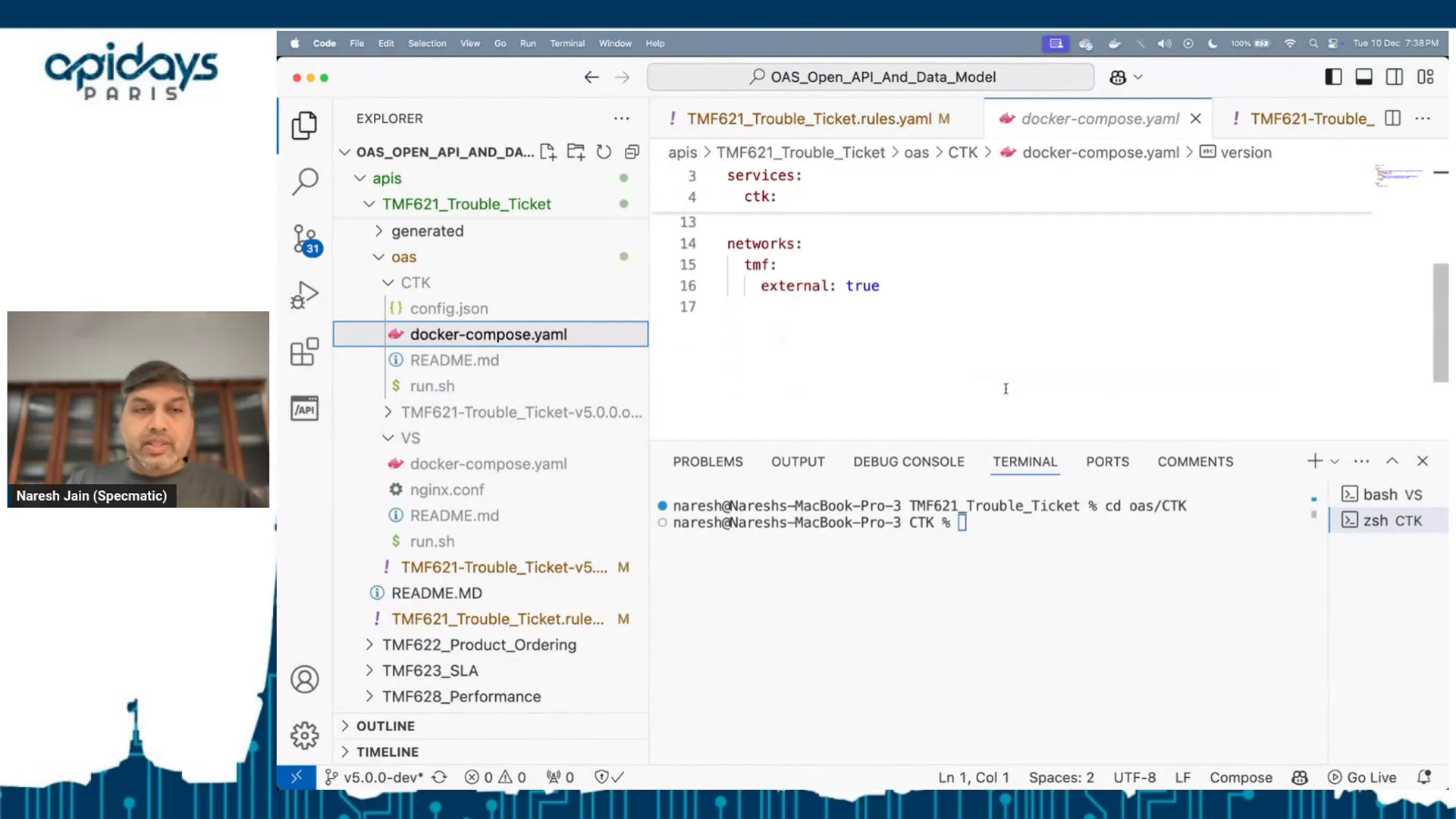

Generating the Certification Toolkit and Virtual Service

Next, we turn our attention to generating the Certification Toolkit (CTK) and the virtual service. This process involves creating a Docker image that encapsulates all necessary components for the virtual service and the CTK, which can be easily deployed by any team member.

The CTK is fundamental for validating that implementations comply with the specifications outlined in the rule file. By automating this process, we ensure that any member can quickly set up and run the virtual service, facilitating seamless integration testing.

Steps to Generate CTK and Virtual Service

- Initiate the generation process via the tooling.

- Confirm that the Docker image for the CTK and virtual service has been successfully created.

- Locate the generated directories containing the Docker compose file for deployment.

Once generated, the virtual service can be run locally, providing a mock environment for developers to interact with as they build their integrations.

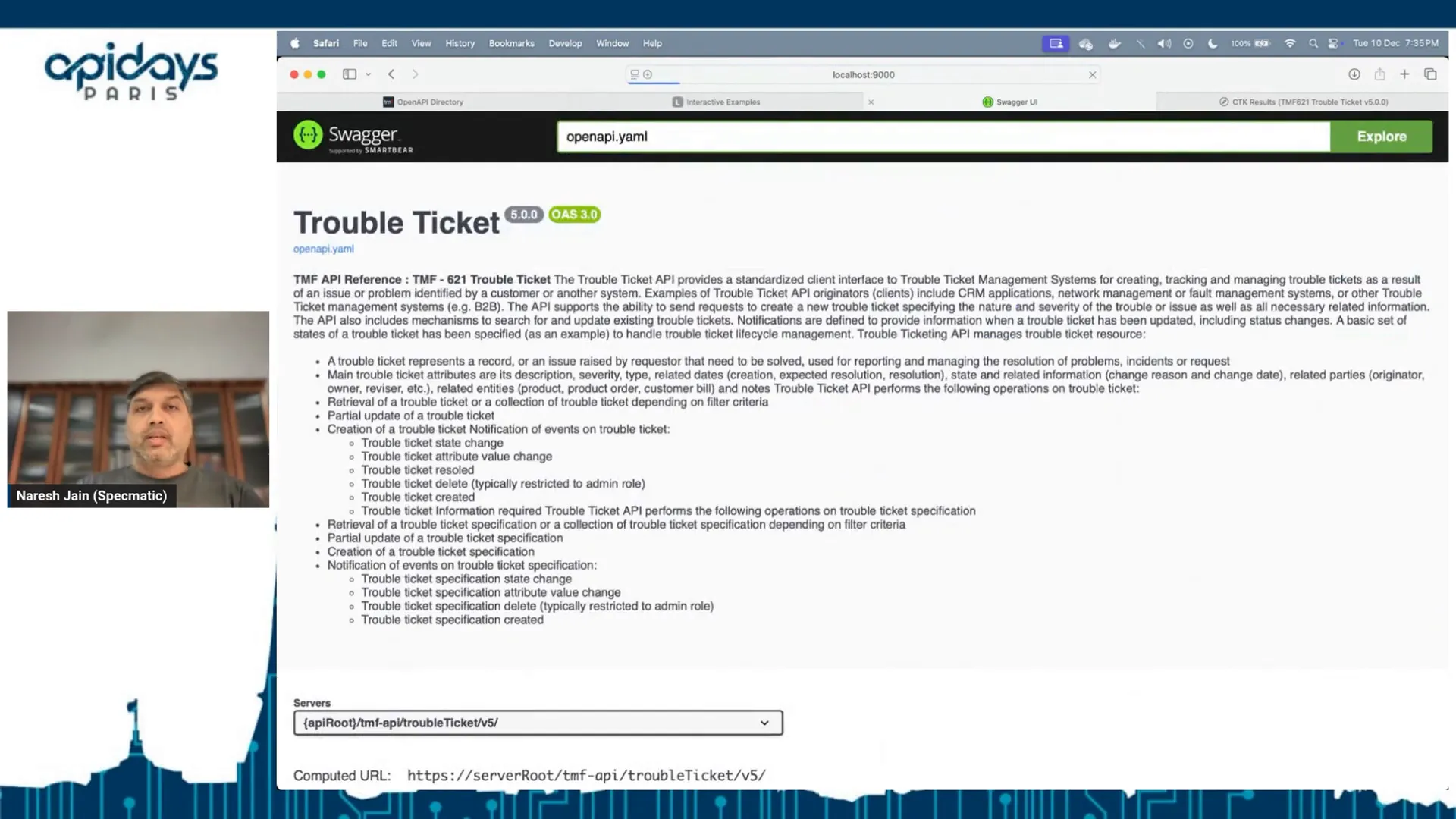

Running the Virtual Service

With the virtual service set up, it can be executed to simulate real API interactions. This service offers an invaluable resource for both API consumers and developers, providing a platform to test and refine their implementations.

Accessing the virtual service is straightforward. By navigating to the specified localhost address, users can interact with the Swagger UI, which presents the available endpoints and examples for testing.

Steps to Run the Virtual Service

- Navigate to the Docker directory and run the Docker compose command.

- Access the virtual service via the specified localhost address.

- Use the Swagger UI to explore the API operations and execute requests directly.

This setup allows developers to validate their understanding of the API behaviour and iterate on their implementations efficiently.

Contract Testing with the CTK

Finally, we employ the Certification Toolkit (CTK) to conduct contract testing. This process verifies that the implementation adheres to the defined API specifications, ensuring that all endpoints behave as expected.

The CTK runs a series of automated tests against the virtual service, checking for compliance with the API contract. This is crucial for maintaining the integrity of the API as it evolves over time.

Steps for Contract Testing

- Launch the CTK against the virtual service.

- Review the results of the tests to identify any compliance issues.

- Address any failures to ensure the implementation meets the specified contract.

In our testing, we successfully executed ten tests, all of which passed, confirming the robustness of the implementation and its alignment with the specifications.

Postman Collection and Additional Artifacts

As we further enhance the API development process, we can generate a Postman collection alongside other critical artifacts. This collection serves as a practical tool for developers, allowing them to easily test the APIs and understand the expected behaviours. In addition to the Postman collection, we also produce a conformance profile and a user guide to assist in the implementation and testing phases.

Generating these artifacts is an automated process that streamlines the workflow from API design to testing. By providing a comprehensive suite of resources, we empower developers to create and implement new APIs efficiently. This integration significantly enhances the developer experience, making it faster and more intuitive.

CI Pipeline and Artifact Publishing

Once the artifacts are generated, they enter our Continuous Integration (CI) pipeline, which is responsible for publishing these resources on our website. This publication process ensures that all generated artifacts are accessible to members, allowing them to implement the API specifications effectively.

The CI pipeline also plays a pivotal role in maintaining the integrity of the artifacts. Each time an artifact is updated or a new one is generated, the CI pipeline triggers the necessary steps to ensure that the latest resources are available to users. This automated flow not only saves time but also increases the reliability of the published materials.

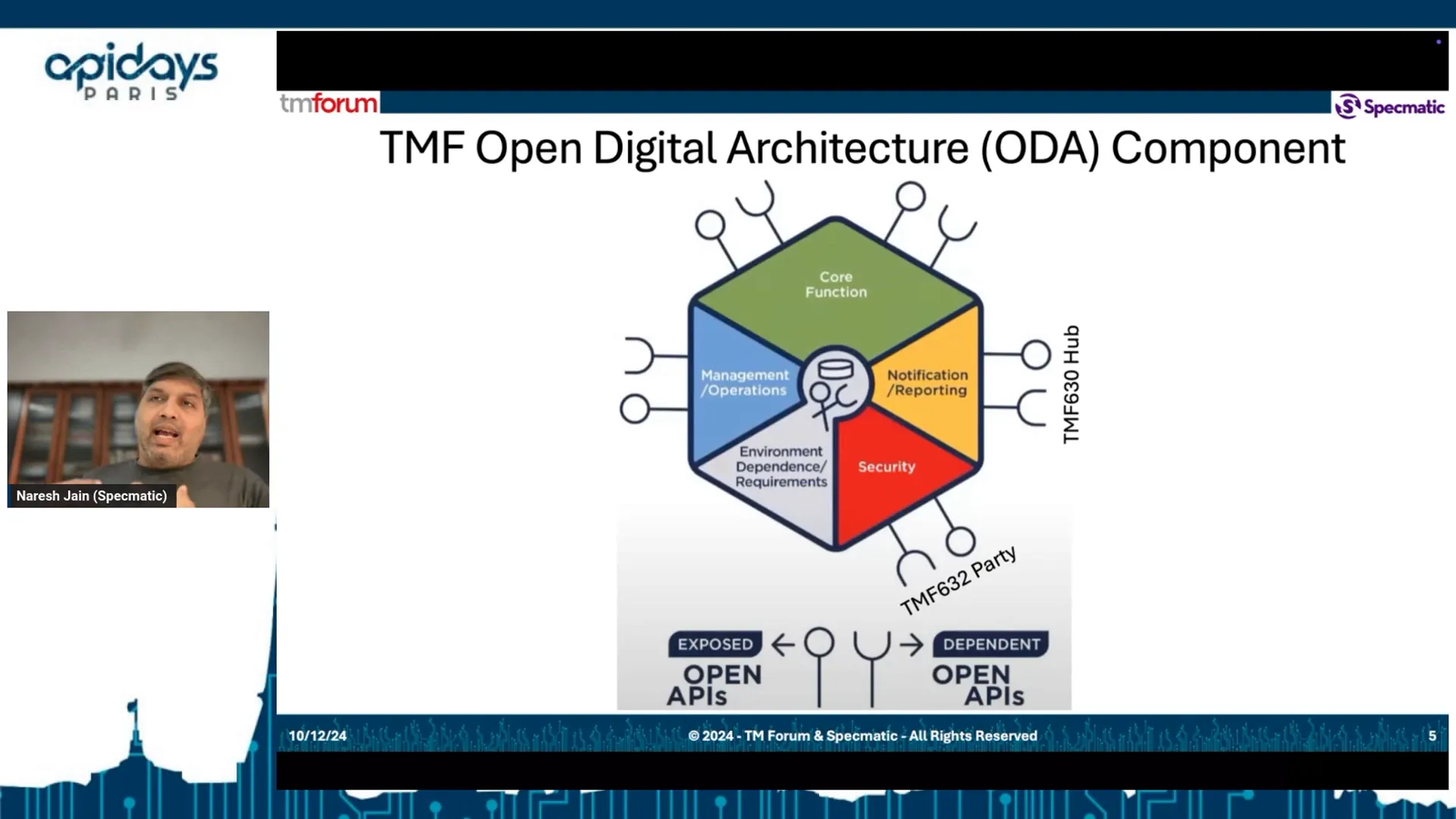

Open Digital Architecture Components

Introducing Open Digital Architecture (ODA) components marks a significant step in API management. ODA components group API specifications into logical business functionalities, providing clarity and structure to the development process. Each component encompasses core functions delivered by the APIs, along with associated security standards and monitoring metrics.

For instance, when a member identifies a business functionality to implement, they can reference the corresponding ODA component. This component will list both the exposed APIs and the dependent APIs necessary for achieving the desired functionality. This structured approach simplifies the development process and ensures that all necessary resources are readily available.

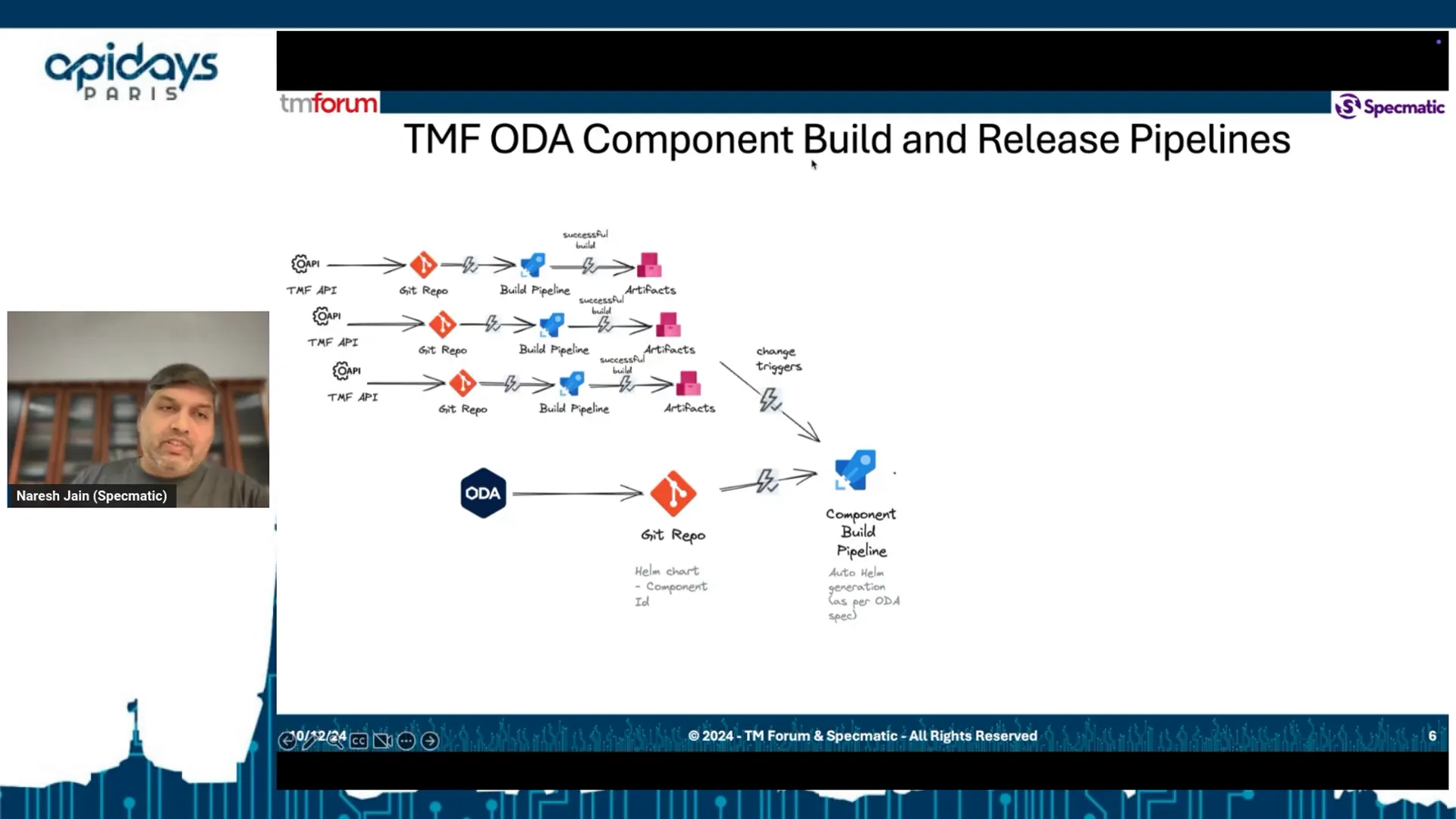

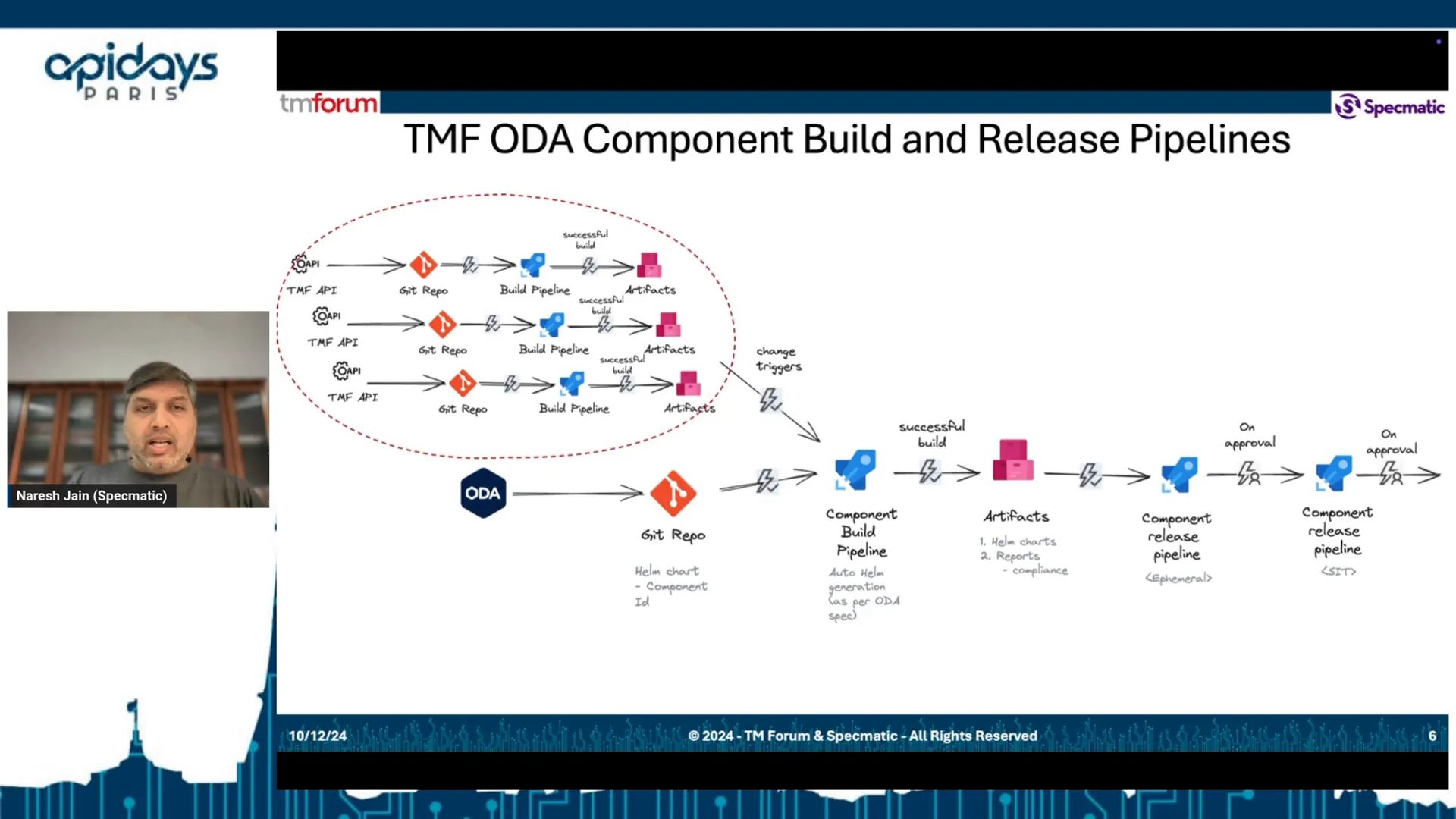

Building and Managing ODA Components

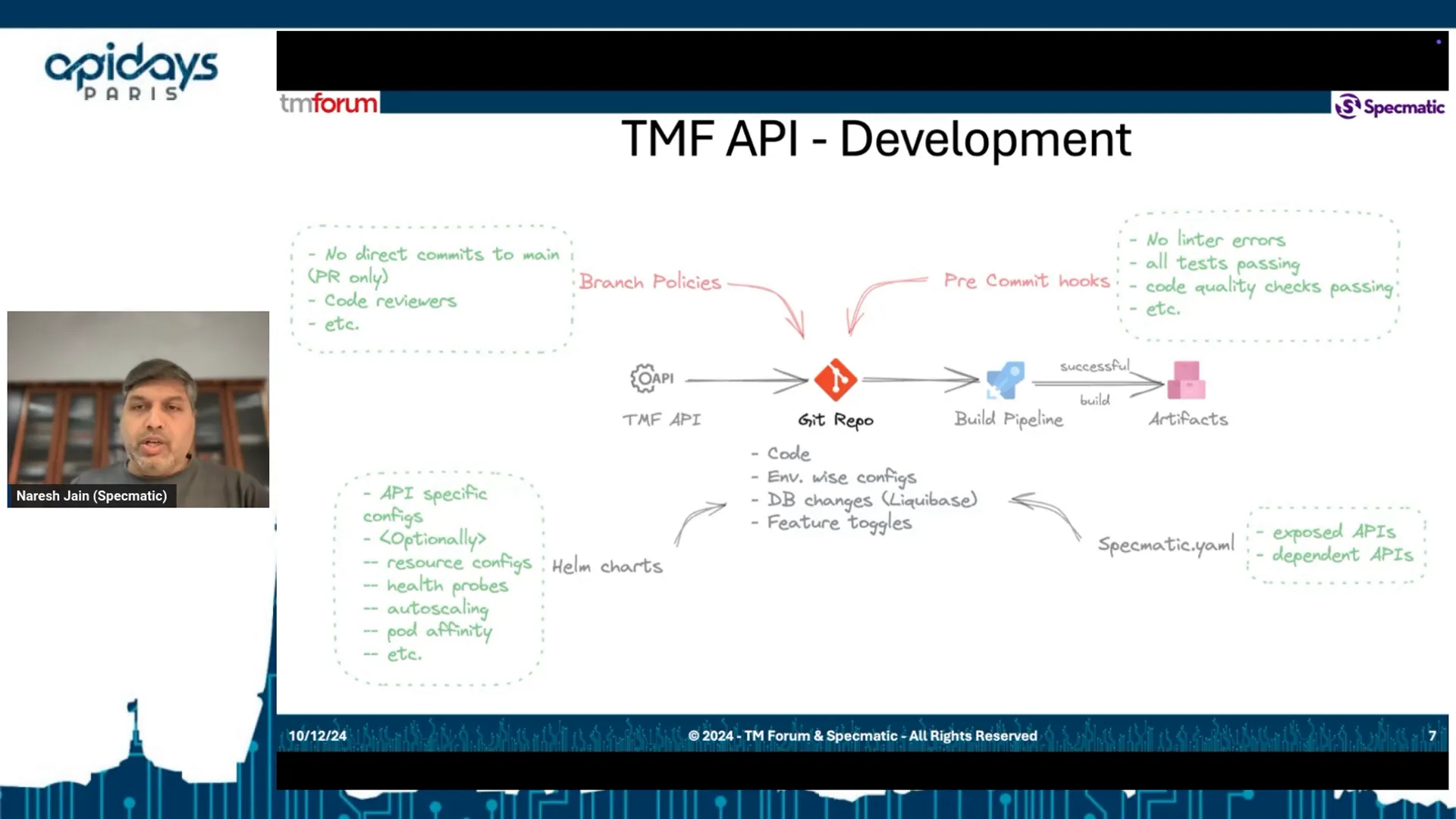

Each ODA component is housed in its own Git repository, complete with a dedicated build pipeline. This pipeline automates the generation of deployment artifacts like Helm charts, ensuring that each API can be independently developed and deployed. Furthermore, changes made within any component trigger the build pipeline, which generates the necessary artifacts for subsequent release processes.

API Implementation and Testing Pipelines

The API implementation code resides within the Git repository, where it is accompanied by environment-specific configurations. For databases, tools like Liquibase are employed to manage schema changes, while feature toggles allow for flexible deployments. This setup ensures that the APIs can be tested and deployed in a controlled manner.

Once a pull request is raised, the pipeline initiates a series of tests, including unit tests, component tests, and contract tests. These tests are crucial for ensuring that the implementation aligns with the defined specifications. The use of tools like Spectmatic guarantees that all tests are executed efficiently, providing immediate feedback to developers.

Contract Testing with Spectmatic

Contract testing is a vital part of the pipeline, as it verifies that the API adheres to its specifications. By running a comprehensive suite of tests, we ensure that any changes made do not compromise the integrity of the API. In our recent tests, we successfully executed over 2,200 tests, all of which passed, reinforcing the reliability of our implementation.

Conclusion and Open Source Tools

In conclusion, the integration of automated artifact generation, CI pipelines, and ODA components creates a powerful framework for API management. This streamlined process not only enhances the developer experience but also ensures that all resources are readily available and compliant with industry standards.

Tools like Spectmatic play a crucial role in this ecosystem, providing open-source solutions that facilitate testing and validation. As we continue to refine our processes, we encourage members to explore these tools and leverage them in their API development efforts.