Avoiding APIpocalypse; API Resiliency Testing FTW!

21 Jun 2024

Online

Summary

As we build more complex distributed Applications, the resilience of APIs can be the linchpin of application reliability and user satisfaction. This talk will delve into practical tools and techniques used to enhance the resilience of APIs. We will explore how we utilise API specifications for simulating various input data, network conditions and failure modes to test how well the API handles unexpected situations.

Our session will begin with an overview of API resilience—why it matters, and what it means to build robust APIs that gracefully handles flaky dependencies in real-world operations. We’ll discuss the role of contract testing in achieving resilience and how to turn API specifications into executable contracts that can be continuously validated.

Following the introduction, we will dive into hands-on strategies for implementing these techniques into your day-to-day development and testing workflow. This includes setting up practices to run resilience tests, such as testing endpoints for handling latency, errors, and abrupt disconnections. We’ll provide examples of how to configure tools to simulate these conditions, interpret test results, and iteratively improve API designs.

Additionally, the session will also cover how to integrate with CI/CD pipelines and practices to foster better collaboration between Architect, Developers, QA engineers, and DevOps stakeholders through shared understanding and executable documentation.

To wrap up, we will discuss best practices for scaling API testing strategies across larger projects and teams, ensuring that resilience testing becomes a cornerstone of your API strategy rather than an afterthought.

This talk is designed for Architect, Tech Lead, Software developers, QA Engineers, and DevOps Engineers, who are keen on enhancing API resilience. Attendees will leave with actionable insights and tools to implement robust API testing strategies that can withstand the pressures of real-world usage. Join us to learn how you can transform your API testing approach and help build systems that last.

Transcript

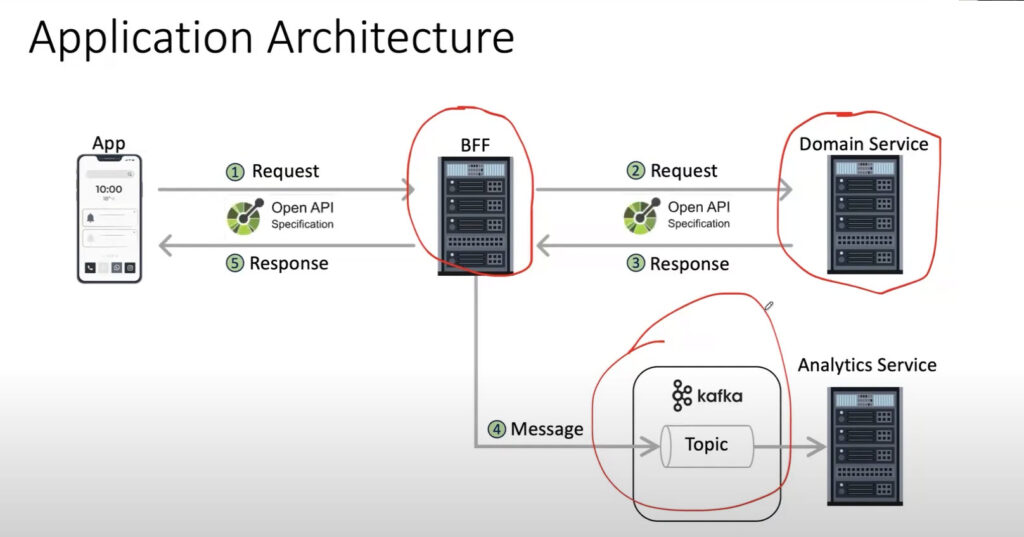

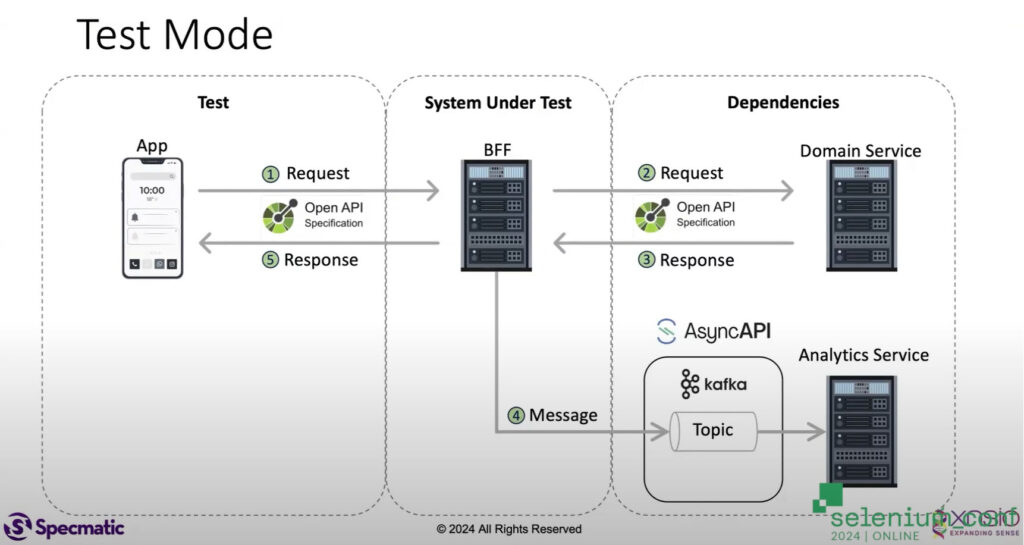

This talk is about my experience of building really resilient APIs that work at really large scale. And so this is some of the background around how to make that happen in your own organization. I’m going to take a quick example here to just explain the architecture of an application. Let’s quickly recap something that all of you must be very familiar with.

The workings of a simple app – what could go wrong?

You have an app, the app makes a request to a backend for front end (BFF). This backend for front end may depend on one or more actual domain services. So it makes a request to the domain service, it gets a response back from the domain service, it does some business logic, and then it posts a message on a Kafka topic so that an analytic service could pick up and do its thing and then get back the response to the application? Like this is a very simple application, but there are a lot of things that actually can go wrong in this simple application. So I want you to think about from a resiliency point of view, let’s say I am thinking about this BFF layer over here. Let me quickly highlight that. So let’s say I have this BFF layer and I want to make sure that this BFF layer is very resilient. Subsequently, we will also make sure that this domain service is very resilient. And finally, we want to make sure that this Kafka guy is also very resilient. So these are all the pieces that technically could have some a fault or some a problem, and that could make the overall experience for the user who’s using the app, not so pleasant.

So our job here as quality engineers, is to make sure that the experience here on the app is seamless, which means these various moving parts that we depend on are actually very resilient. What are the kinds of things that can actually go wrong? Of course, here, I’m just highlighting that these have OpenAPI specifications and AsyncAPI specifications as well. But what are the kinds of things, from a resiliency point of view, that you think can go wrong in this case? Network latency between these services could cause a problem.

There are many servers here, and any one of them could go down. And we don’t really seem to have a redundancy in place, at least in this simplistic diagram that I’ve shown you. So that’s certainly a good possibility. The response time and network latency, both of those could be certainly an issue. Kafka could choke the topics on Kafka. We could overwhelm Kafka, and it may not be able to process, and then that might get choked with too many requests at a time. You could have a runaway success, and your app could be downloaded by millions of people and they all try and hit this thing, and then there might be too many requests. There could be the disk usage, when you’re trying to write logs or database or things like that, you may have disk issues. I mean, there are several such problems that can occur, that can cause problems from a resiliency point of view, for your services.

Resiliency Testing for your application

So what kinds of testing would you now perform to make sure that your application is actually resilient? We talked about the kinds of problems that can occur, but now what testing would you do to ensure that your service is resilient? I see chaos testing. I see load testing. Very good. What else do you anticipate? If only Kafka is down, then how do we resolve it? But what kinds of testing would you do? Contract testing. Chaos and resiliency testing. Load testing. So here’s my opinion, not an exhaustive list, but a more pragmatic list that you would use on a day-to-day basis when you’re testing resiliency of your services.

Negative functional testing

The first and the very simple one that I think a lot of us already practice is the negative functional testing, where you’re doing boundary value testing, where you’re doing equivalence partitioning. We’re doing invalid data types, schema, invalid validations, where you’re testing for format validations, underflow overflow conditions and stuff like that. These are all in the realm of functional testing, but more negative functional testing. So this is like bread and butter in my opinion. We would do this very often. A little bit more sophistication on top of that could be service dependency testing.

Contract testing

Backward compatibility testing

This is also very important from a resiliency point of view. If a new version of your app or BFF API was released and that made a backward breaking change, then the apps will face an issue. So that also will make your service non-resilient or non-available.

Chaos engineering and chaos testing

So under chaos engineering, you have several different kinds of testing. The first one is fault injection testing. So you may inject or induce a fault by bringing down a service. To see how resilient the services, you may want to do failover testing, if you have multiple pods, you bring down one of them and see if the traffic fails over to the other pods without causing an outage. You may also want to test for example, recovery from a database. You might want to try and restore from your database backup and see if that recovery testing is working fine. You may want to do partial failures in your network, and you may want to see if it’s still responding within the given SLA. From a response time perspective, you may do a lot of chaos engineering and related chaos testing in this context.

Performance testing

We have several different types of performance testing, starting with load testing, then stress testing, soak testing things, perform well, for a few hours, and then maybe a few hours later or a few days later, suddenly things starts becoming slow and there may be memory leak issues. There may be file descriptor running out of disk or other kinds of things that may cause these kinds of issues. So soak testing becomes again very important from that perspective. Latency testing, concurrency testing. what happens if the exact same user is logged in from two devices and is trying to make a request, which one gets honored? How does it work? So those kinds of concurrency testing and just bombarding the service with a lot of requests as well, but that is already covered under load testing.

Security testing

Security testing is very important, from SQL injection to cross site scripting to unauthorized access to session expiry, whether the sessions are expiring correctly. You may then have a host of penetration testing or pen tests that you might do. You may want to do DDoS attacks to make sure your firewalls and other kinds of things are resilient and they are holding up things. And of course people may do vulnerability scans to try and find known vulnerabilities that they can exploit and so forth. So again, that’s important from a testing point of view.

Observability model testing

And finally, of course, in spite of all of this testing, you may still want to make sure that you test your observability model monitoring and alerting itself, that it does give you the alert at the time, whether the data is visible on the dashboards and whether you’re able to do deep tracing and other kinds of observability related practices.

So all of these are things that we use on a very regular basis at work, are what I would categorize under “resiliency testing”. Of course, this session is only 45 minutes long so I won’t be able to cover each and every topic in detail. But my hope is to cover the things on the left side and show you some actual demos of how we would go about doing things from a functional testing, negative functional testing, the dependency pieces, contract testing specifically, and then a little bit of fault injection related testing. So we will cover the ones on the left, the ones on the right, I think we might need a separate session, but from a completeness point of view, I just wanted to call these things out.

Introducing API Contracts

The first thing that I want to tackle today is both the negative functional API testing and service dependency testing. Here I want to introduce you to the concept of API contracts and how you can actually leverage API contracts to be able to tick off these two boxes for you. Often people ask, “Hey, what is a contract?” There is a lot of confusion in terms of what we mean with the term “contract”? So I’m going to take a quick minute and set the stage. To make sure that we’re all on the same page when it comes to “what is a contract?”

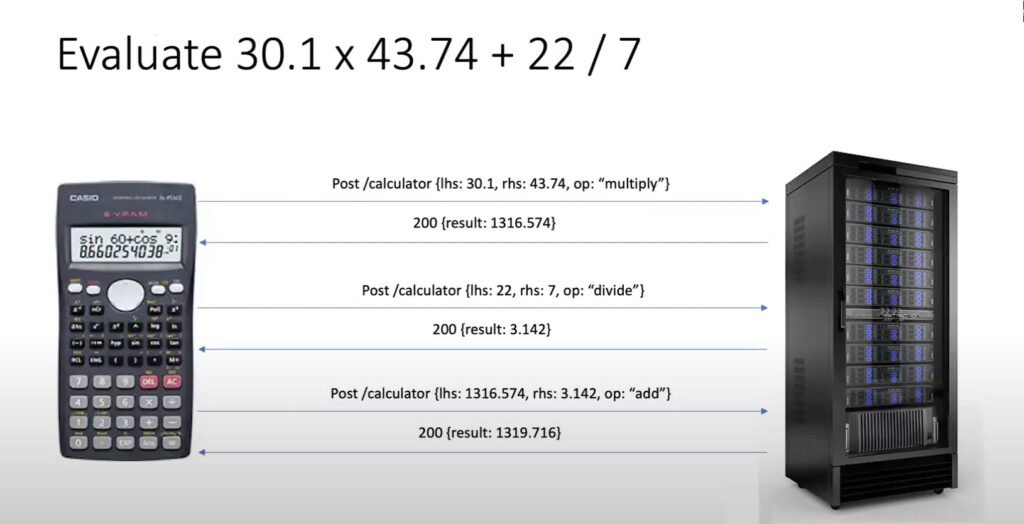

So let’s imagine I want to evaluate this expression, 30.1 into 43.74 plus 22 divided by seven. So if you think about it, I might first make a request for 30.1 in two. So I would evaluate the multiplication, then I would evaluate the division, and then I would take the results from both, and then I would add the two and that would give me the final answer. This is a very simple interaction. I can now represent this more like an API call that all of us can relate to. So this is, I’m posting a message to /calculator with a left-hand side, a right-hand side, and an operator, and essentially doing the same for the three multiplication, division and addition.

I’m hoping this is something everyone can relate to. So now from an API testing point of view, something that all of you are familiar with, what are the kinds of tests one would consider doing on this? I might send 30.1 and 43.74, multiply and I would expect a 200 response back with this a result That is what I would validate or assert in my test. Then of course I would also store the result because I want to add this later. I might also want to do negative numbers and see if negative numbers are being handled correctly or not. I should get back a negative result in this case.

I might want to do a little bit of now we’re getting into data type validation and boundary conditions and things like that where I might want to send ABC and see what happens. And of course you should be getting a 400 bad request saying invalid left hand side value. The HTTP status and the error message are all important to be validated in your tests. You may also want to play around with the operator itself. The operators are fixed and they are of specific type. But if you try and send something that’s completely invalid, again, you should get a 400 response back with a valid response with a valid reason for it. So I’m hoping these are all the kinds of things that one would perform. These are more on the functional positive and negative side of things that one would perform. In this case, of course you can chain these methods together and then do API workflow tests. But here, let’s just focus on the API test point of view.

What is a Contract?

Now, coming back to the original question, what is the contract? I think someone mentioned that we can do contract testing. So what does a contract mean in this case? There is a specific agreement between the consumer of the service and the provider of the service in terms of the data types, the schema, the API signatures, the possible response codes and so forth that has been agreed upon. That’s what is the API signature, or like the API contract if you will. There is one very popular specification format for capturing this. It’s called an OpenAPI specification. The older name for this is Swagger.

OpenAPI is one way in which you can say, “Hey, this is my OpenAPI 3.0 version of the specification and I have a path called /calculator. It has a post method and it can have possible 200 responses. It can have 400 responses. And here is the request body that it can take, which is has a left hand side, a right hand side and an operator.” So as you can see, all three are required. You’ll also notice that the op which is operation, is a type of enum which can be only these four possible values. Now this I would say is a pretty decent contract that would allow what the provider and the consumer have agreed upon to be captured pretty nicely in this document. Now once you have this, the advantage of this is that a lot of these tests that you’re looking at in terms of, if I send two valid numbers in the left hand side and right hand side, and I pick one of the operations from the enum that is there, then I expect a 200 result back with positive, like with the positive number.

Generating Contract Tests from the API spec

Similarly, I could also generate these tests. So what ends up happening is now from a resiliency testing point of view, and from a contract testing point of view, a lot of this can be taken care for you without you having to write all of these things. So this much feedback is shifted left to the developers and they can get this feedback in their IDE. So that quickly explains what a contract test can do. Now, how does this doing this help you improve your resiliency. So there’s two parts to it. One is now you don’t really need to keep sitting and manually verifying or writing automated API tests for things like these. These automated tests can be generated into the, in the developer’s ide and they could get this feedback in terms of ensuring that they’re sticking to the contract.

And if things outside the contract is presented to them, then the provider can gracefully handle them. The provider can gracefully handle them on the API side and respond back with valid status codes and response messages. That reduces a number of things you have to worry about from a resiliency point of view. Now you can take this even further and try and do a lot of the boundary case conditions. You can do several other kinds of, including fault injection. And that’s the next section.

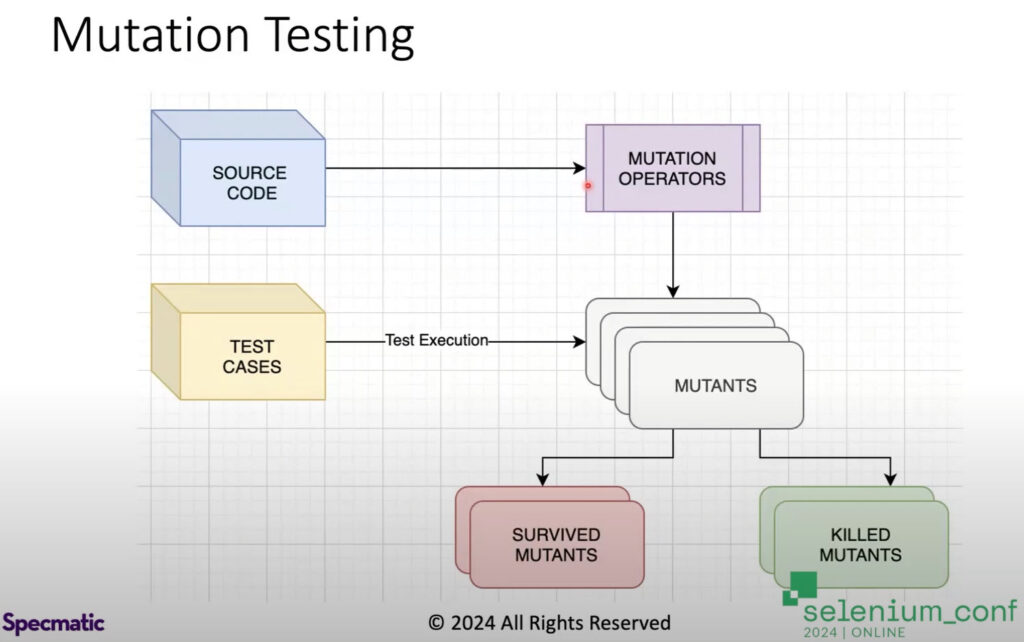

Once we understand this so far, what you will notice is in this specific example what I explained to you. We’ve taken inspiration from a couple of different styles of testing, and I think it’s important to understand those different styles of testing that were at play. You will notice here that we tried to, intentionally introduce a variant of the request that was not a valid variant and we wanted to make sure that that came back with a 400 bad request. . So that’s a little bit of what mutation testing is all about.

The genesis and benefits of Mutation Testing

I’m going to take a few minutes to explain what mutation testing is. I also have a sample example to walk you through. But the idea is very simple. I think it’s worth a little side tour here to talk about some of the genesis of this. Back in the days when Agile was in its glory days, one thing that caught on like a wildfire in a lot of companies was having “code coverage”. Everything should have at least 70% code coverage. Some organizations went even more crazy and they said 80% or 90%!

What ended up happening, is a lot of organizations put engineers under pressure to ship things, but the criteria was you had to have 80 or 90% code coverage. What they ended up doing is they just started writing tests without having assert statements or without doing things. And that would give you the code coverage, which is what the management wanted, but really didn’t give much benefit because the assertions were not good and the quality of the tests were not great. People just wrote them to tick a box and move forward. And then the leadership woke up after some time and they’re like, “Hey! We’ve invested so much, were getting people to write all these unit tests, and we have like 80% coverage, but still the bug leakage is very high. We’re still not able to catch these, what’s going wrong?”

That’s where I remember going to a few organizations and saying, well, how do you know the quality of your code and the quality of your tests is good? And they said, of course it is good because the coverage is very high. I was like, coverage just tells you intentionally or accidentally what got covered. It doesn’t tell you anything about the quality of the test. And that’s when mutation testing was introduced into a lot of organizations. So what mutation testing does is it takes your source code, it mutates your source code. For example, if there’s an “and” condition, it’ll turn it into “or” condition, it’ll like start trying to mutate your code, and it’ll produce mutants off that particular code. Then it’ll take your test cases and run against the mutants. And it would expect that you should be able to kill all these mutants.

This means that the test should fail when you run against the mutant, which shows that the test is actually able to catch silly mistakes or able to catch problems in the code. If the mutant survived, that means your tests are no good. They’re not able to catch these mutants. These are intentionally introduced bugs or errors in the code. And you would expect that your test should catch it. When you run this mutation test, if you’re written high quality code and high quality tests, then all mutations will not survive and everything will be caught.

An example of a Mutation Test

That’s what will give you confidence that mutation testing, that is what is high. Let’s look at this example, but first, let me show you the tests. What I’m doing here, essentially, is I’m creating durations and I’m verifying that. It’s giving me the closest matching duration for a given set of tests. And so what we will do here is we’ll quickly run these tests and we will see what happens. It’s a very small piece of code, has only about five tests, and at this point, you will see all five tests have passed. The code here is just trying to find you the closest matching duration for a given last-seen time.

In a lot of apps, you would see, like in WhatsApp, for example, you’ll see last seen 1 minute ago or 5 hours ago or five days ago. So it gives you, like, the closest matching last duration based on your last activity time. That’s what this little program is doing. So now let me run this guy and let’s see what happens. I’m running mutation testing. I’m using a tool called PIT for mutation testing. And then I’m also getting Jacoco to produce my coverage report.

So it’s done its magic. Here it says I have 100% branch coverage. I have 100% line coverage. So everything in this code is fully covered. So this should mean that essentially, the code that I have written is very high quality, and it’s hundred percent covered, so there shouldn’t be any problem at all. And I should be able to ship this into production. Correct?

I’ve got 100% line coverage. I’ve got 100% branch coverage. What could possibly go wrong? Well, let’s quickly look at what the mutation testing report says. So, mutation testing in this case, generates a very similar report. And you would see that it’s saying, well, sure, you have full coverage in terms of line coverage, 100%, but your mutation coverage is actually only 17. So, out of six mutants that we produced, your test is able to only catch one of the mutants, which means the quality of the test is pretty poor. So let’s look at what actually went on here. I’m going to zoom in this a little bit.

Here it gives you the list of mutations that it performed, and it highlights the one that survived in red, which is bad news, and the one that it killed in green, which is good news. So you’d see that replace long substitution subtraction with addition. So there is a subtraction that it replaced with addition over here. Then it’s tried to see if, after making that change, ideally your test should have failed. But in this case, my test did not fail, it still continued to work. So what? What do you think went wrong? Let’s go look at the tests. You would see that by mistake, instead of saying “assert equals”, I’ve said “assert not null”. So, whatever came back, just make sure that it’s not null. And I’m not really asserting it should be 1 minute ago, which is what it should have done. I’ve just asserted it’s not null.

And while this might look silly, I can tell you there’s so many places where you would see tests written this way. So let’s try and fix this. I’m just going to uncomment this out. And then let’s run all our tests. Let’s make this assert “equals”. And then I’m going to do the same thing. Just run this. Notice that it’s pretty fast.

It gives you the mutation coverage pretty quickly. Branch coverage is of course, still 100%. But now you’ll see the mutation score has also gone 100%, which means that all the different mutants that it produced, our test was able to catch all those mutants. So this is one example that ensures that your code is very resilient. It’s like you’re intentionally introducing these mutants and trying to verify whether they break or not.

So we are doing this more from a unit test point of view. The point of explaining this is to make sure that you understand the concept. So that’s the first concept that we took inspiration from. There is a second concept that I’m going to introduce that we can take inspiration from.

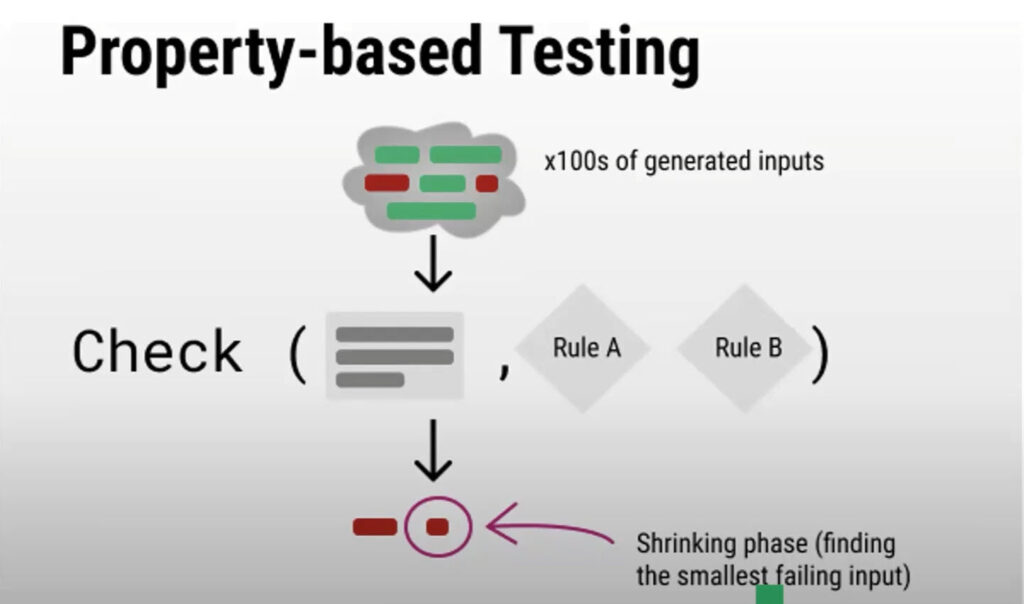

Property-Based Testing and its benefits

The second concept that is important to understand is essentially Property-Based testing. In property based testing, we define the system as a set of properties that it adheres to and then generate lots and lots of different data to make sure that under all those conditions, those properties are still held true by the system. So what does that mean? Let’s look at an example to see how this works.

Let’s look at this test. So what I’m trying to do is I’m trying to reverse a list and I want to make sure that my reverse method is actually doing what it’s supposed to do. So one way that a lot of you might be used to writing unit tests is something like this, ? So I would say, here’s a list, one, two, three. This is My collection that I’m interested in testing, and specifically the reverse method inside that. And then I would say reverse this and then assert that it contains exactly three to one and is equal to this order lists exactly three to one after reversing. This is typically how one would write unit tests for testing things like this. But this may not be sufficient.

Then you might think, okay, what happens if the list contains only one? Or what happens if the list is empty? Or what happens if the list has, hundreds of elements, do I need to sit and manually test each of these combinations? That might be too much to write all of these. So what ends up happening is, there is a property that you can say that when I reverse the list twice, I should get back the original list. That’s now a property of “reverse”. Reversing something twice gives you back the original thing.

So that’s what we are trying to do here is we are trying to say reverse. Reverse should be equal to the original list. And the original list now could be an empty list, could be a single item list, could be a multi item list. And essentially if you run this test, then it would test for all these different combinations for you. I’m just going to quickly run this. This is really not showing you the power of property based testing yet, but this is just showing you how to think in terms of properties. This is a round trip test, we would say, where you’re doing something twice to get back to the original thing.

And that’s one of the common ways in which people generally do. So you should see four tests and all four tests passing. This is fine, but I still had to write all these different combinations myself. So can I do something even better than this? Let’s go look at a slightly different property test, which will make this even more interesting for you. I have a property that I should be able to sort a list. And after I sort the list, everything should be in a sorted order. So what I’m doing is I’m giving an unsorted list.

I’m saying sort ascending. And then I’m verifying it asserted. Now, in this case, when I run this test, it’s going to go ahead and test it with several different combinations. Then it will tell me that with all those combinations, your sorting is working fine. But for some reason, let’s say I ended up making a mistake in my algorithm. Let’s say I did something like this. That’s my main method that I’m sorting. Then what should happen? Of course, this one still passed because I’m not really checking for the zero condition.

And you can see that this guy is warning me. But the mistake could be me actually putting zero, which means they are equal. Okay, will this catch it? It certainly did. What was the error message? It says it tried 22 different combinations. It tried one of these combinations and after sorting, it got this result back versus it should have got minus ten and one back. And it’s tried several different combinations for you. And finally, it distills down to one example that can demonstrate where things went wrong. So again, notice here, unlike in the previous case, I didn’t write all those different combinations myself. I simply defined this as a property.

And this allowed me to simply go ahead and generate several different combinations. But that’s an example of a property based testing where we are defining a property. In this case, in the previous case, it was a round trip. In this case, we are saying after ascending, this is how sorting should work. And this will then allow us to generate a whole bunch of different examples and make sure, under all kinds of conditions, our sorting is still working as expected. So this is like a second inspiration from testing and optimizing your testing point of view to make things more resilient. So now let’s try and put all of these together.

The power of combining Mutation Testing and Property-Based Testing

Remember, we go back to this example, and I want to take some of these learnings that I showed you in terms of mutation testing, in terms of property based testing, and try and bring it back to a more real world examples where we are trying to test APIs and see how that can be leveraged. So let’s say my system under test is the BFF layer. This is what I’m interested in testing. These are my dependencies for the BFF, and I want to abstract the dependencies away and then these are, the app itself is the test. But I don’t really want the app. I’m going to replace that with a different thing that would test the BFF for me and it will stub out. I’m going to stub out the domain service and the Kafka piece so that I’m able to test under all different conditions and make sure that the BFF itself is resilient. So by doing this, I would be able to inject faults because that’s under my control now and see if the BF works fine.

I might be able to give it all kinds of boundary case inputs and make sure that it performs correctly. But something has to guide us in terms of how to give all these boundary case tests and so forth. And that’s where we leverage an OpenAPI specification and we derive properties out of it. So in the previous case, you saw that you had to write the property by hand, which is fine. But in this case, what we’re going to do is we’re going to take the OpenAPI specification or the AsyncAPI specification, we’re going to derive properties out of it at runtime and then generate tests on top of that to be able to test if your BFF is resilient or not. Okay, so let’s quickly jump into live demo and see what happens. So in this example, I’m going to run my contract test and I’ve introduced an error intentionally here so that we can see what happens.

This is the test that I have – this is saying where the application is running, the host and port. I am saying where my stubs are for the dependencies that I have and where Kafka is running. Of course the real Kafka broker is not running – we’ve stubbed that out. We have an in memory stubbed out version of the Kafka broker, and then we start our application and then we have a teardown. That’s it. But where are the tests? I have a setup and I have a teardown. I don’t see any tests here.

Automatic test generation

So let’s see what happened here. Nothing should have run because I’ve not written any tests, right? I expected nothing to run, but I see 90 tests have run here. You see 90 tests have run, 81 have passed and nine have failed. Where did all these tests come from? Let’s look at one of these tests which saying I’m testing a positive scenario on post/products and I’m testing for a 201 success case. Okay, so what we will see here is our tests have made a post request to /products and essentially it is sentence this payload as the input to the request and then it’s got a response back from the server saying 200, okay, and it’s given an ID 8.

And it’s said, “Well, if that’s the case, then all of this looks good and I’m going to pass this test.” And then it’s got another test where it’s doing something very similar. iPhone book one. If you notice in the previous case we were doing iPhone Gadget 100. In this case we are doing iPhone book inventory one. And again we got a result back and everything looks good. So this guy is saying, well, I’ll pass this test. So it’s generated a whole bunch of these request payloads and it’s verified the response payload and made sure that it is as per the properties that we have derived from the specification.

Where is our specification? Let’s look at our specification. That is the BFF API specification. This is an OpenAPI specification. And essentially in this you would see there are three different parts. Find-available-products/orders and /products. /products has only one method, which is a post method and it can respond back with 201, 400 and 503.

Okay, so this is really what this testing tool that we’re using here has gone and looked at. And then it’s derived certain properties from it and basis that it’s generated a bunch of positive tests for us. But it didn’t just stop at a bunch of positive tests. You would also see that it’s generated a bunch of negative tests for us. So let’s look at one of the negative tests and see what happened here. So again, we’re looking at post/products and it’s made a request and notice here this time, the name also suggests that request body name string mutated to null.

Okay, so it’s intentionally mutating the name to null, but name itself, as you will see here in the request body, is a mandatory field and it’s a non-nullable field, so it cannot be null. But from what we had learned from mutation testing is I’m actually producing a mutant of not the source code in this case, but a mutant of my request. And I’m sending that request and I’m seeing whether there are validations that the developer has implemented correctly or not. And sure enough, when I send a null, the servers responded back with a 400 bad requests and it’s given me in the format, I’m expecting the error message. And it’s given a parse error saying you cannot instantiate this, which is fine. Maybe it’s a little too leaky abstraction at this point. It maybe could have summarized this a little better, but that’s fine. At least I’m happy that there is a validation in place!

Question:

Sometimes, the backend for front end, when the passing an incorrect response and the correct data is saved in Redis, they are also then getting the correct response from the Kafka itself. So can how this be identified.

Answer:

I’ll answer the second piece. First is essentially when a downstream dependency like Kafka or Redis or whatever is giving you back here because you are able to stub it out. You can have a control over what it is going to give you. But if you’re using a real Kafka, then you don’t have control over it. And that’s generally the problem where you won’t be able to do all kinds of resiliency testing. But in this case you’re actually able to stub it out. And in your test you’d be able to set expectations like I showed you.

If I go in my tests resources, you will see there’s a whole bunch of stubs that have actually got generated. And in one of these stubs you will see I’ve intentionally put a delay of 5 seconds. Now I’m controlling that the domain service, when it responds back. Or in this case, if the Kafka were to not create a topic, and I’m still putting something on the topic or things like that. I’m intentionally introducing a delay over here and I’m making sure that my service, when it times out, is handling it gracefully. So hopefully, that’s the answer, is that don’t rely on the actual database or the actual service or things like that, because you don’t have control over that. Instead, you would be able to induce this and you’d be able to stub it out and then induce the fault and have a control over that. And those can be controlled through your tests.